Model overview

Whether you've just added a new model or you're checking the existing ones, you can see them all on the model overview page. Here, you'll find basic information about each model, such as performance on key metrics, any issues with concept drift or covariate shift, data quality, and whether monitoring runs were successful.

Here is our video guide explaining how to use the model overview page:

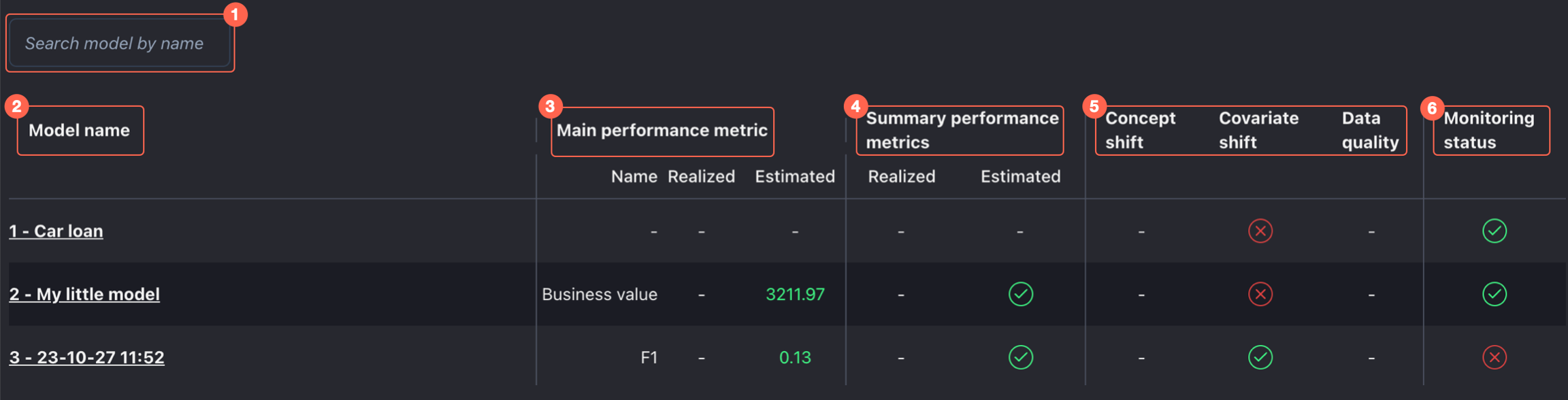

The model overview page is made up of six components:

Search model by name

When you have many monitored models in production, you can type in their names to quickly find the one that you're looking for.

Model name To get a more in-depth analysis of the model, click on its name. You can change this name in the Model settings.

Main performance metric These are a summary of the most critical performance metrics. It shows the name of the metric and the realized and estimated values.

Summary performance metrics These summarise the performance status of the model on the realized and estimated metrics. If available, when hovering, you can see more information about other metrics.

Summary of data shift and quality results These summarise the status of results from concept drift, covariate shift, and data quality. When hovering and clicking, you can see more information on these results.

Monitoring status This indicates whether the last run of NannyML was successful. These are the possible states:

Successful: when the most recent run didn't have any errors.

Error: when the most recent run did have errors.

Empty: When the model was newly created, there were no results yet.

Skipped run: when no new data was added, the run was skipped. The results would still be the same: Alerts/No Alerts/Empty.

What an 'error' means:

There was an exception when running NannyML calculators.

Something else went wrong that prevented us from running NannyML, e.g., a timeout when starting a new job to run.

Last updated