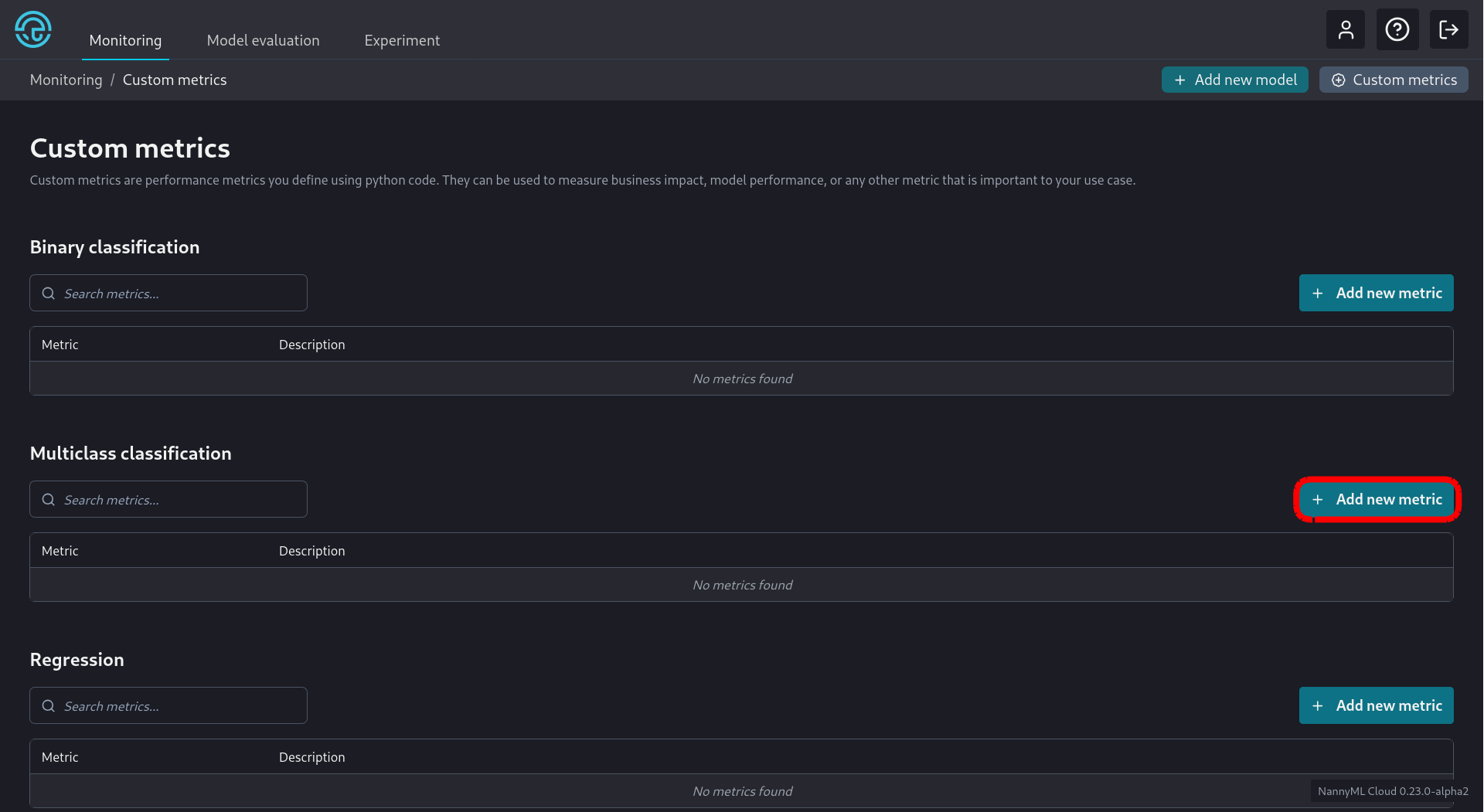

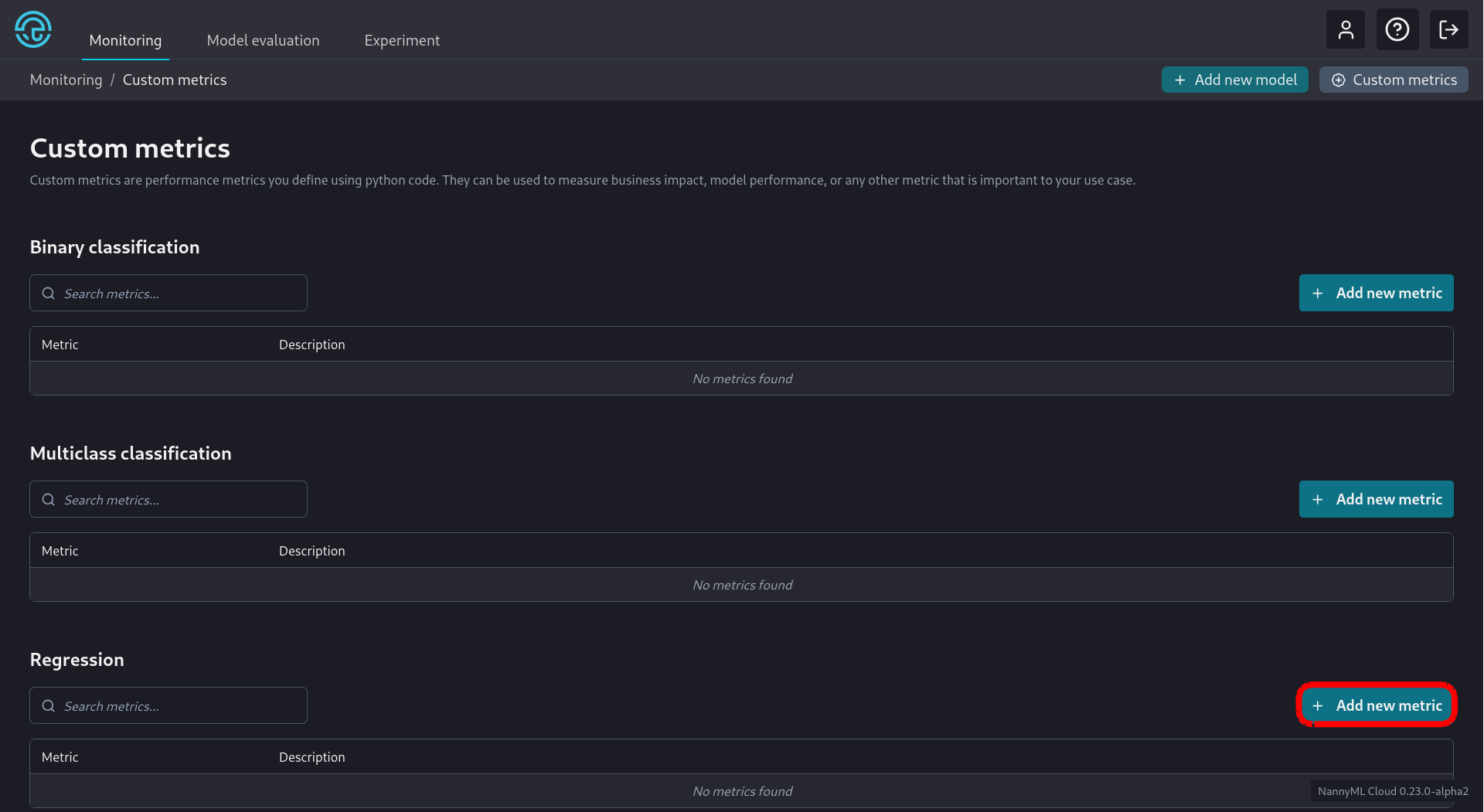

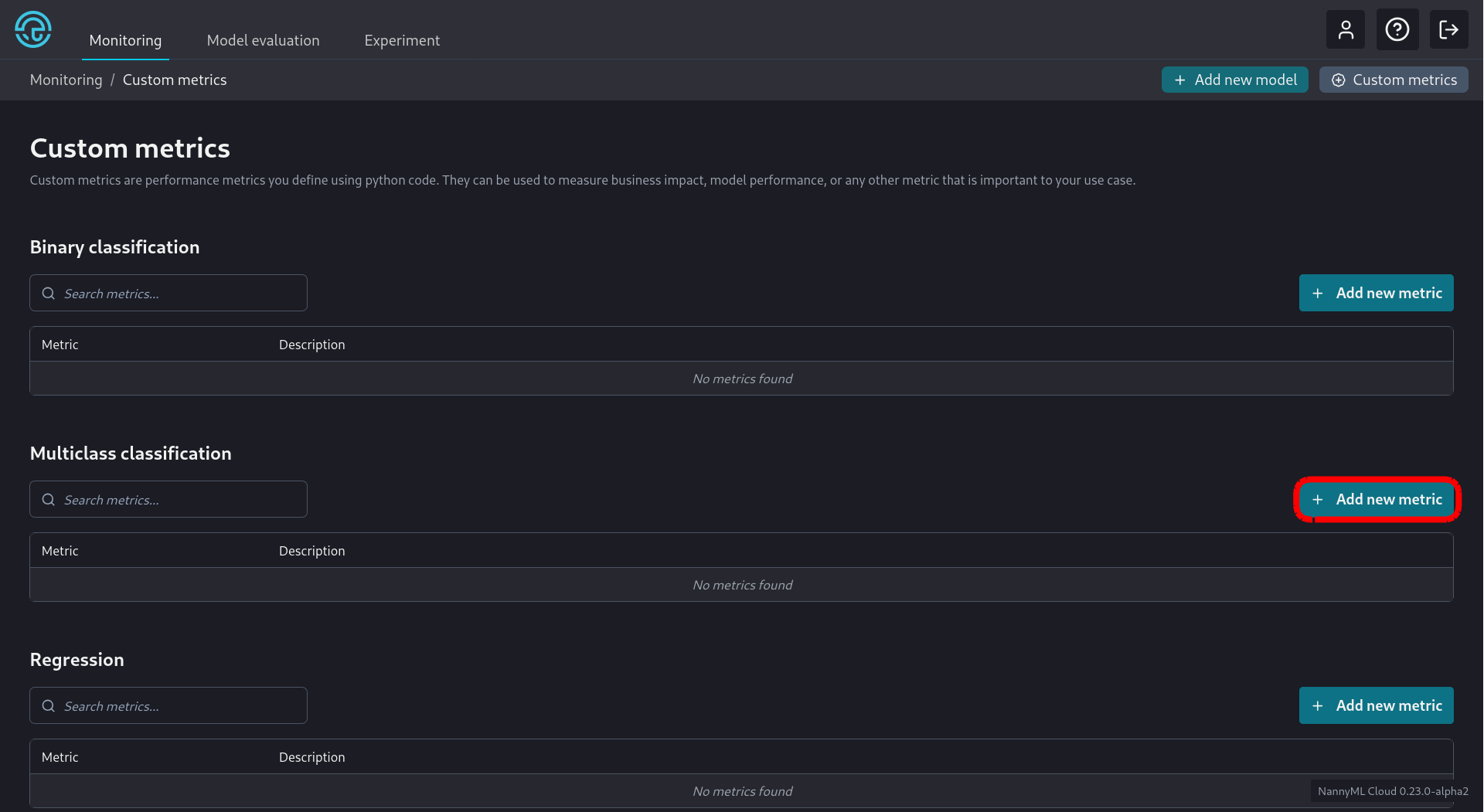

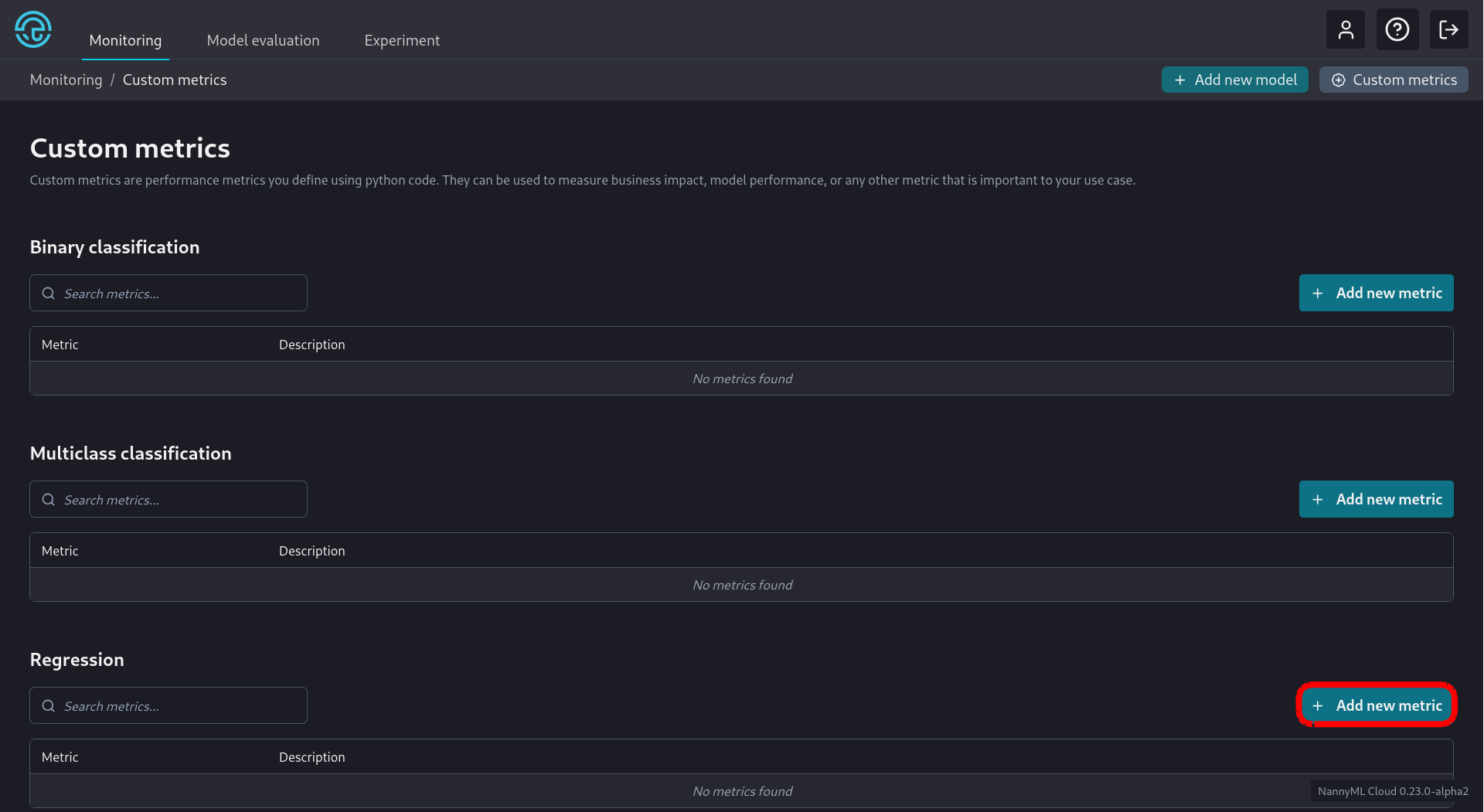

Custom Metrics

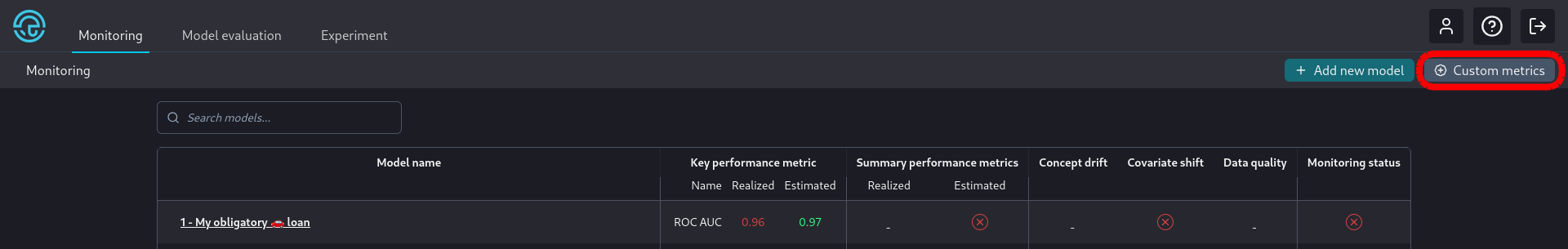

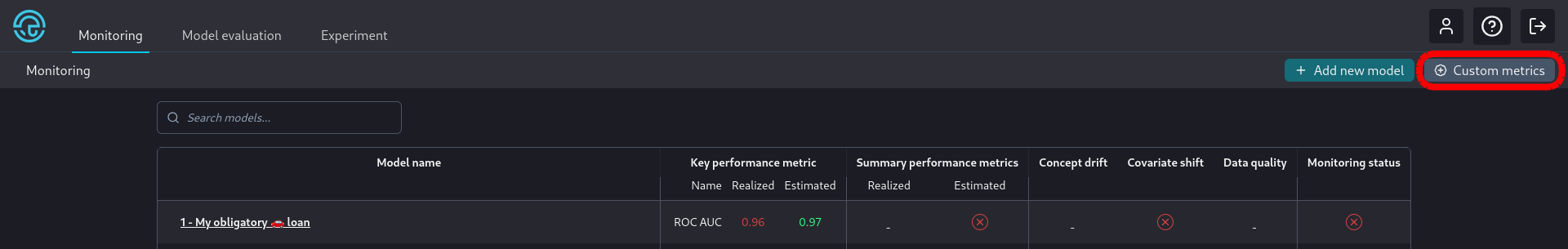

Monitoring Models with Custom Metrics

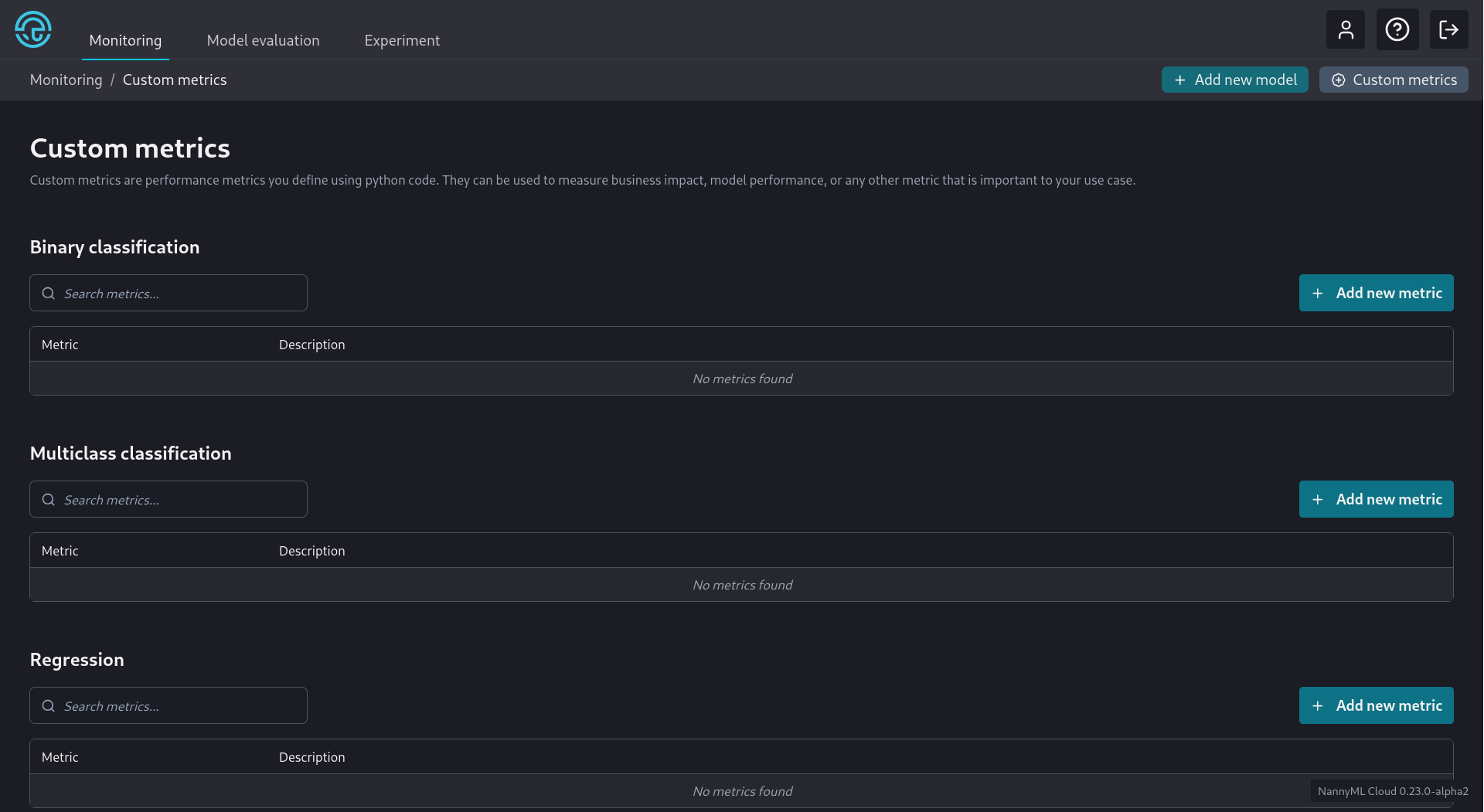

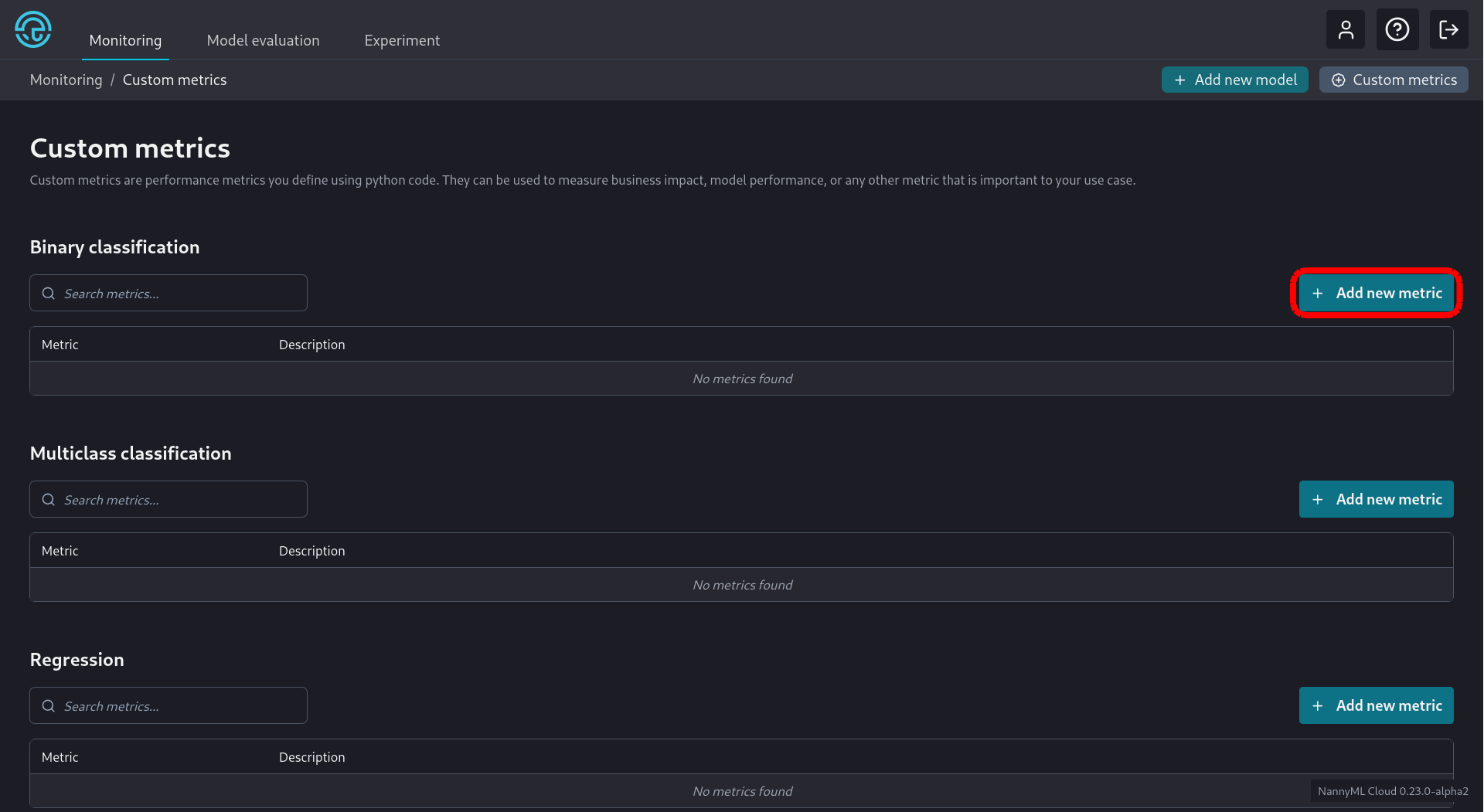

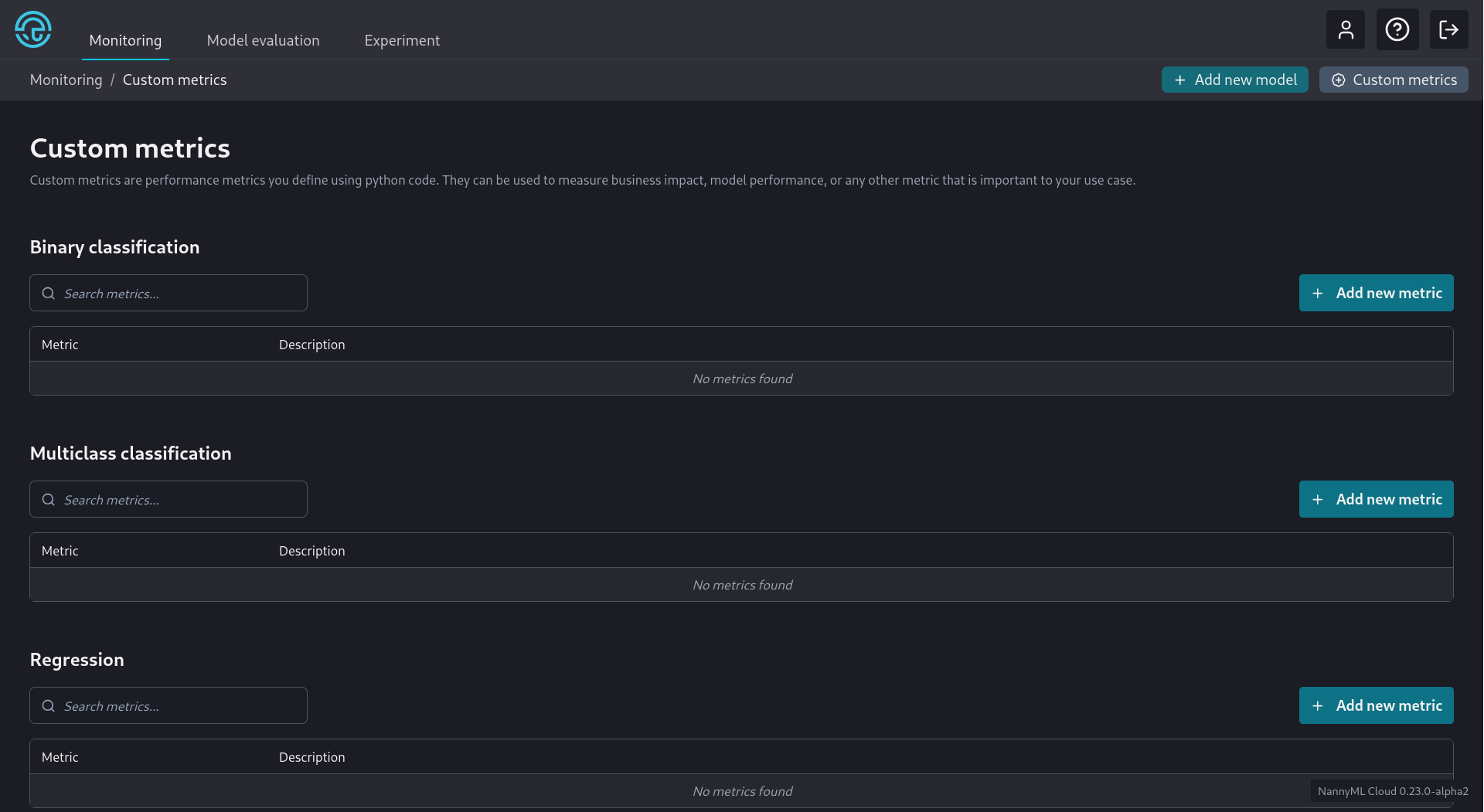

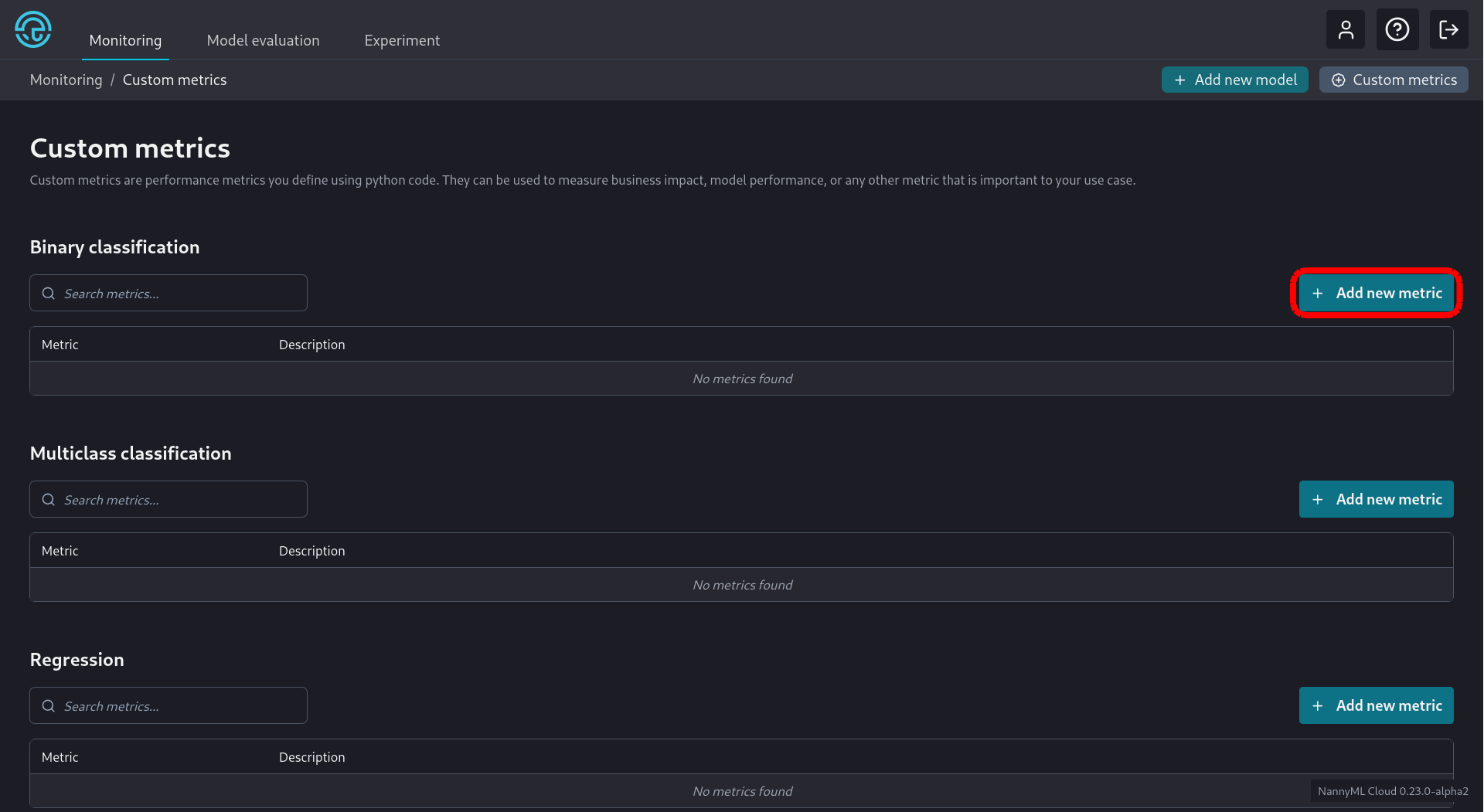

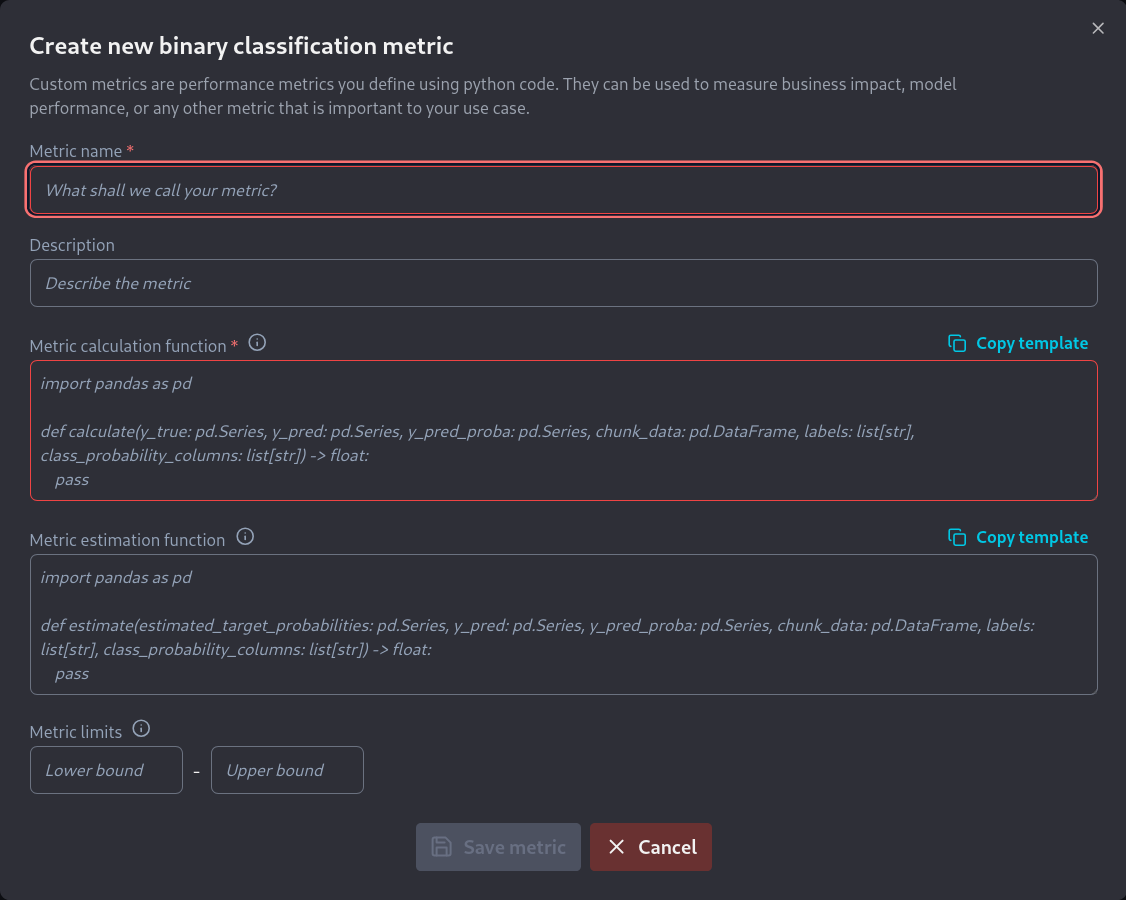

Introducing Custom Metrics

Using Custom Metrics

Monitoring Models with Custom Metrics

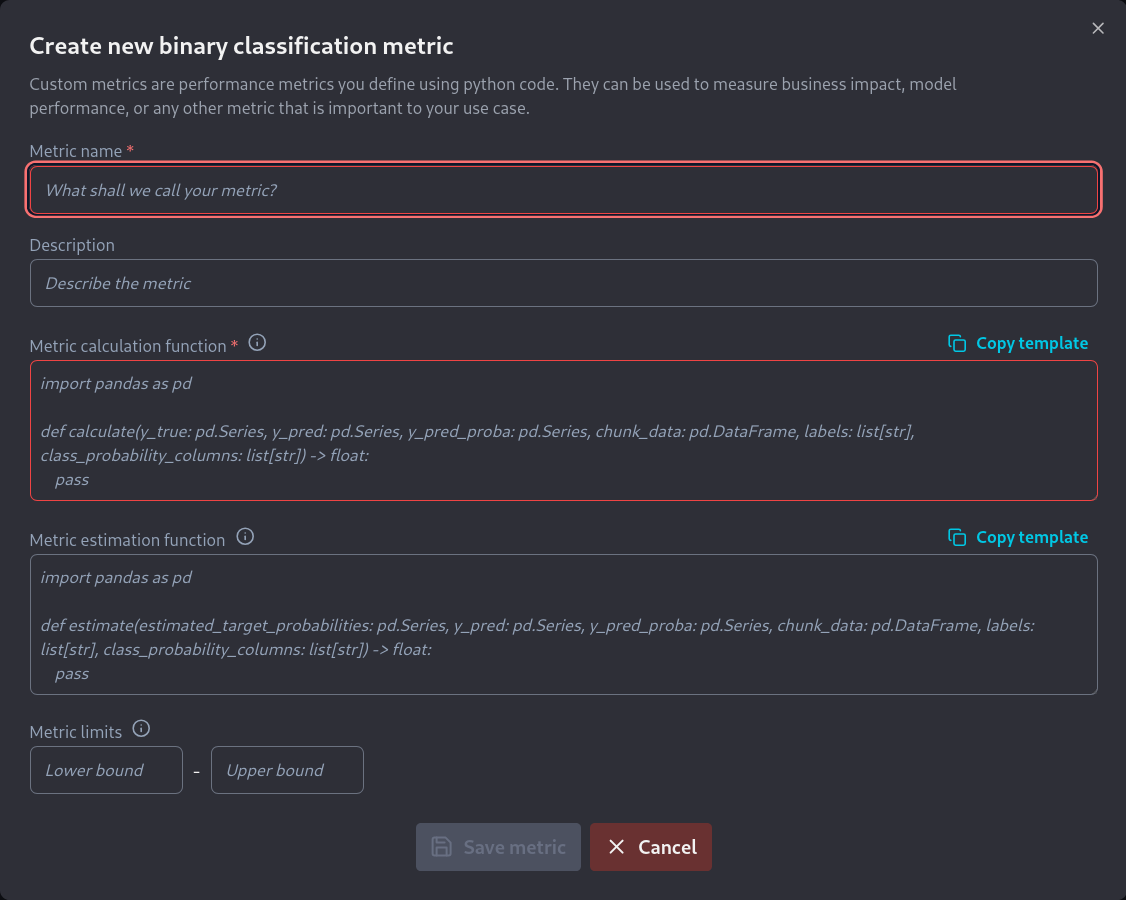

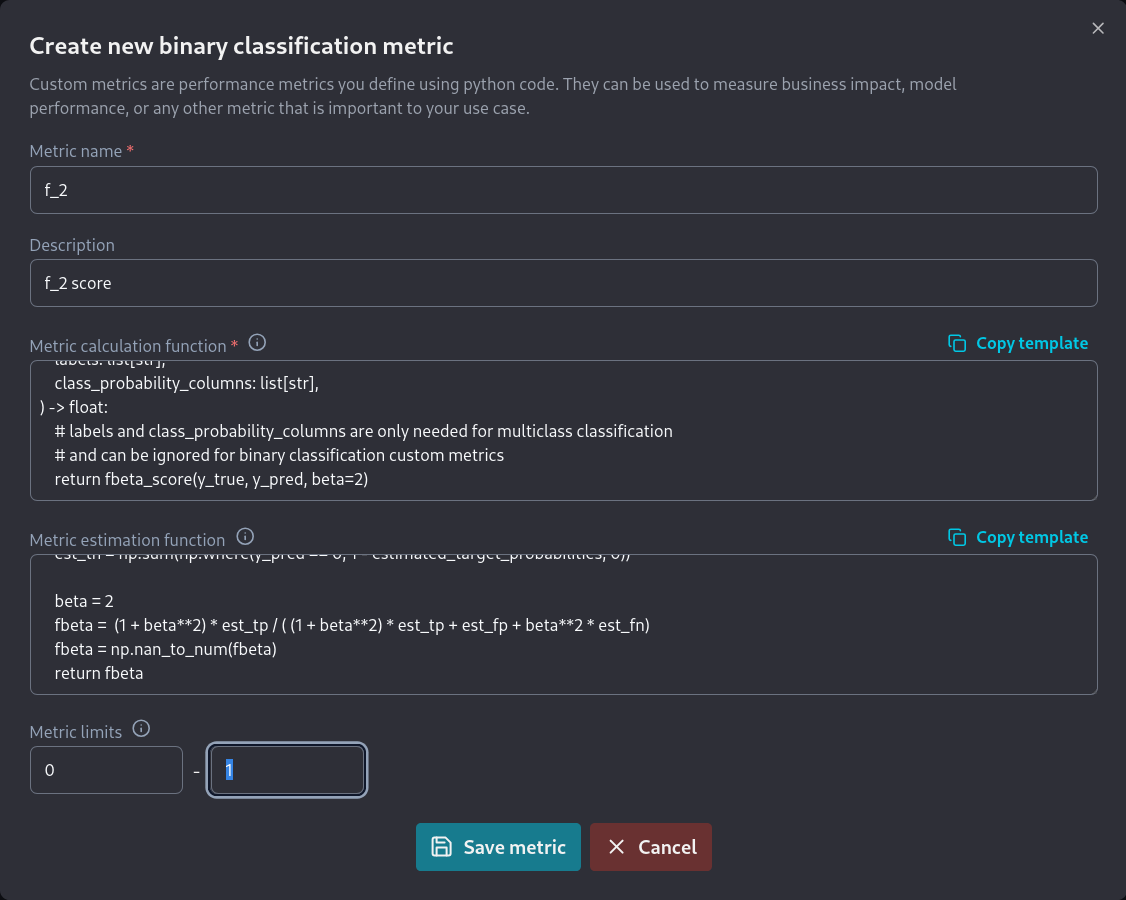

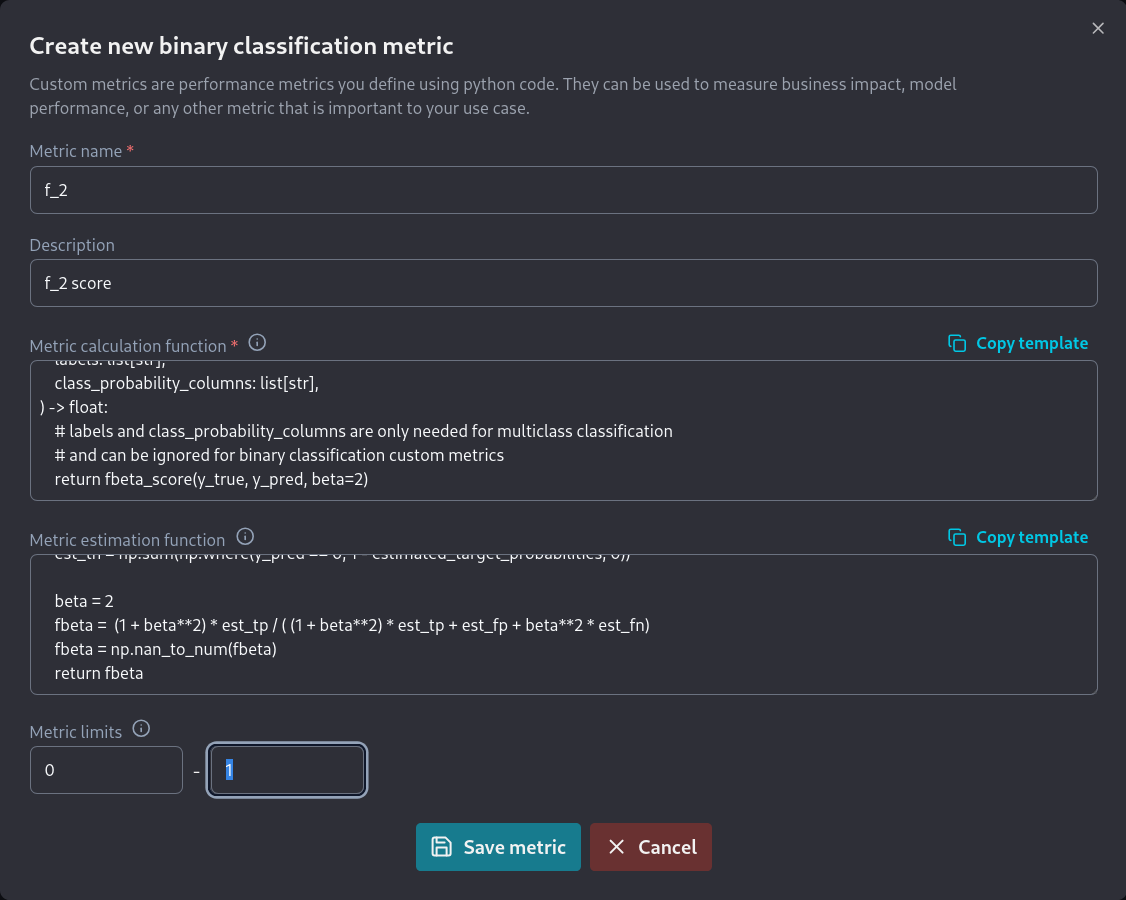

import numpy as np

import pandas as pd

from sklearn.metrics import fbeta_score

def calculate(

y_true: pd.Series,

y_pred: pd.Series,

y_pred_proba: pd.DataFrame,

chunk_data: pd.DataFrame,

labels: list[str],

class_probability_columns: list[str],

**kwargs

) -> float:

# labels and class_probability_columns are only needed for multiclass classification

# and can be ignored for binary classification custom metrics

return fbeta_score(y_true, y_pred, beta=2)import numpy as np

import pandas as pd

def estimate(

estimated_target_probabilities: pd.DataFrame,

y_pred: pd.Series,

y_pred_proba: pd.DataFrame,

chunk_data: pd.DataFrame,

labels: list[str],

class_probability_columns: list[str],

**kwargs

) -> float:

# labels and class_probability_columns are only needed for multiclass classification

# and can be ignored for binary classification custom metrics

estimated_target_probabilities = estimated_target_probabilities.to_numpy().ravel()

y_pred = y_pred.to_numpy()

# Create estimated confusion matrix elements

est_tp = np.sum(np.where(y_pred == 1, estimated_target_probabilities, 0))

est_fp = np.sum(np.where(y_pred == 1, 1 - estimated_target_probabilities, 0))

est_fn = np.sum(np.where(y_pred == 0, estimated_target_probabilities, 0))

est_tn = np.sum(np.where(y_pred == 0, 1 - estimated_target_probabilities, 0))

beta = 2

fbeta = (1 + beta**2) * est_tp / ( (1 + beta**2) * est_tp + est_fp + beta**2 * est_fn)

fbeta = np.nan_to_num(fbeta)

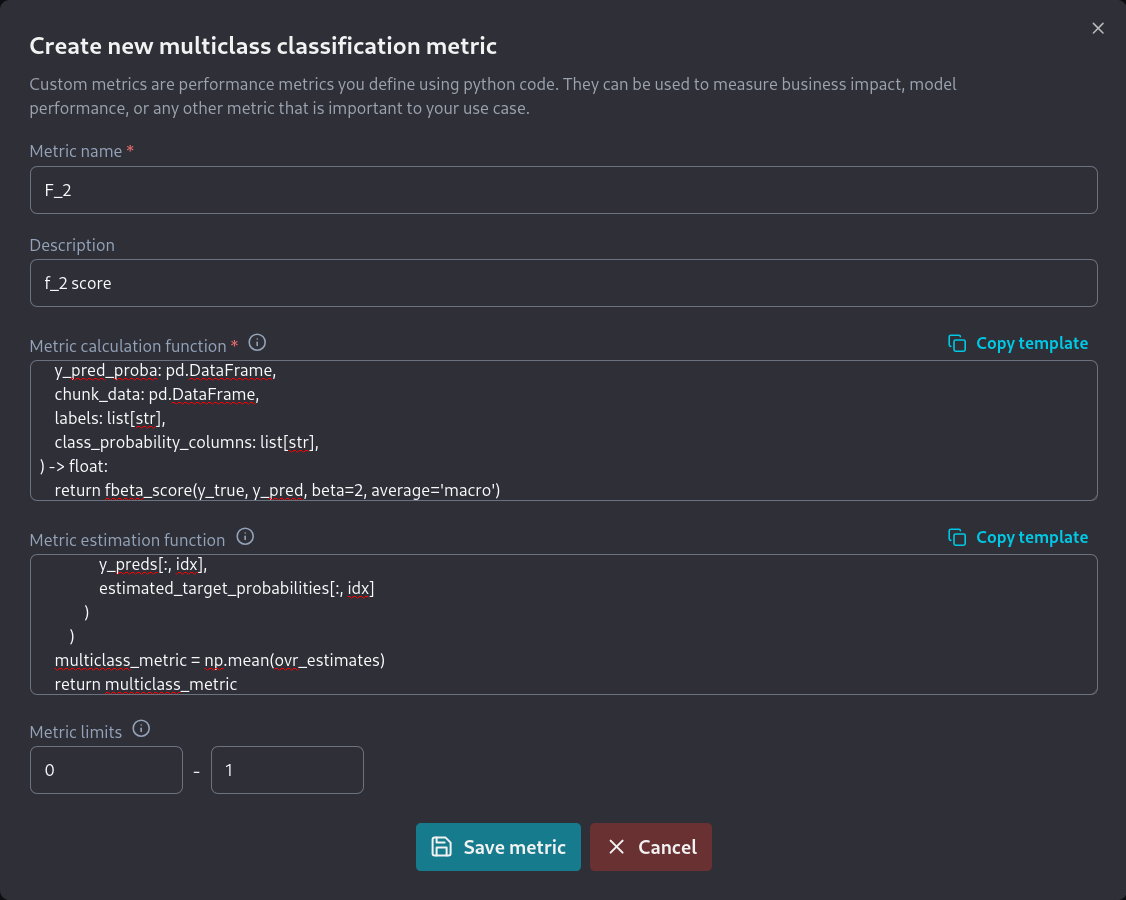

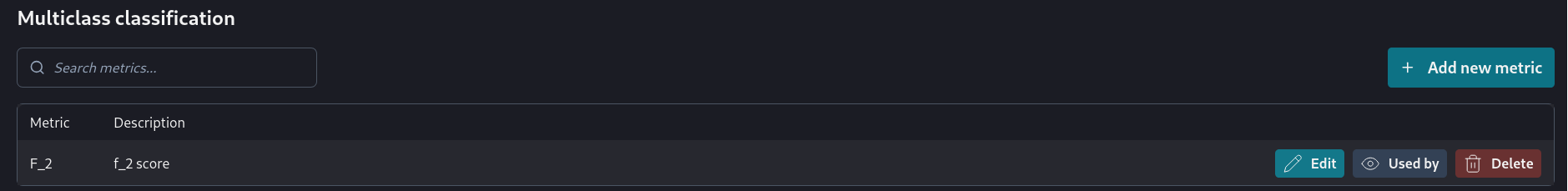

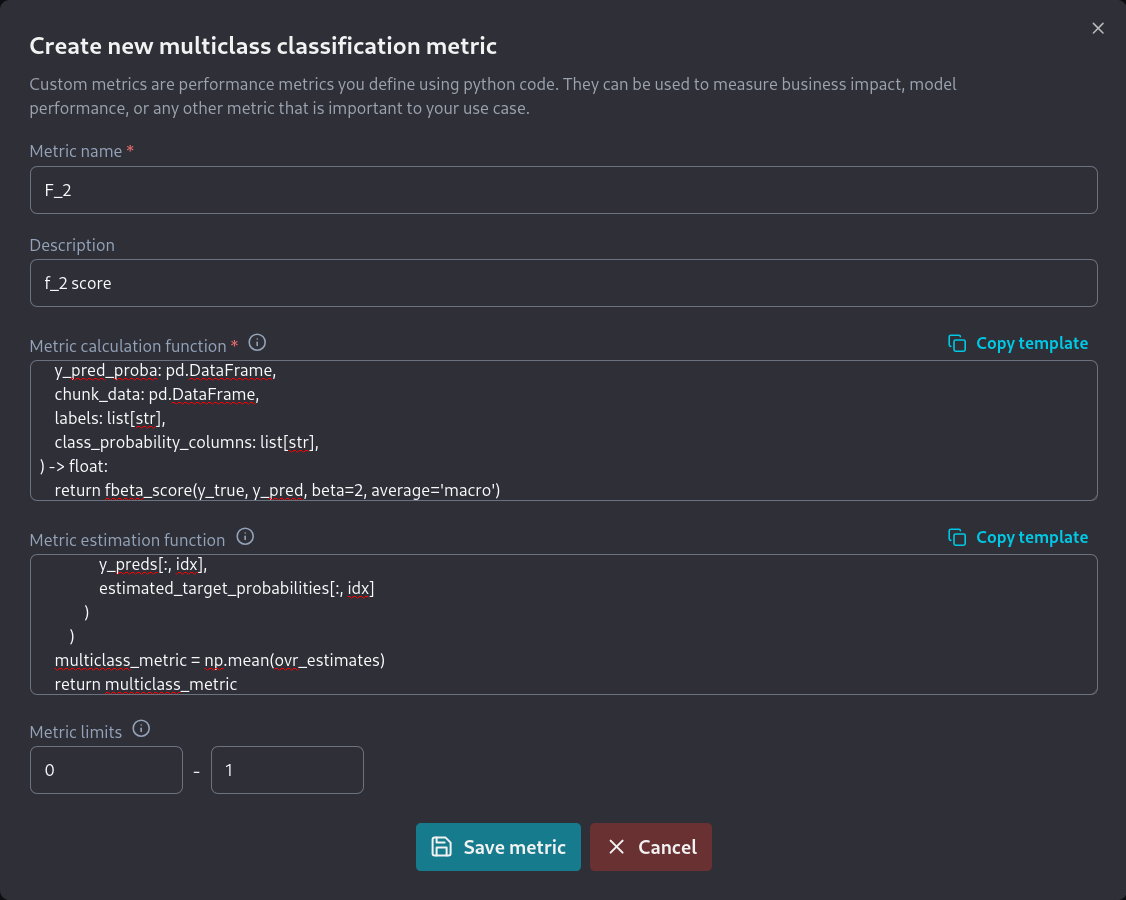

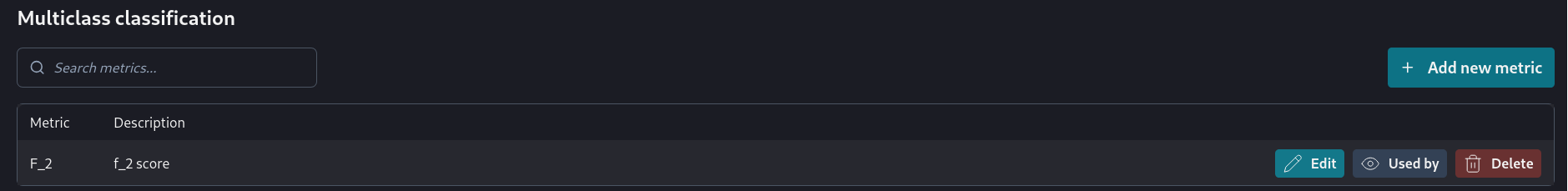

return fbetaimport pandas as pd

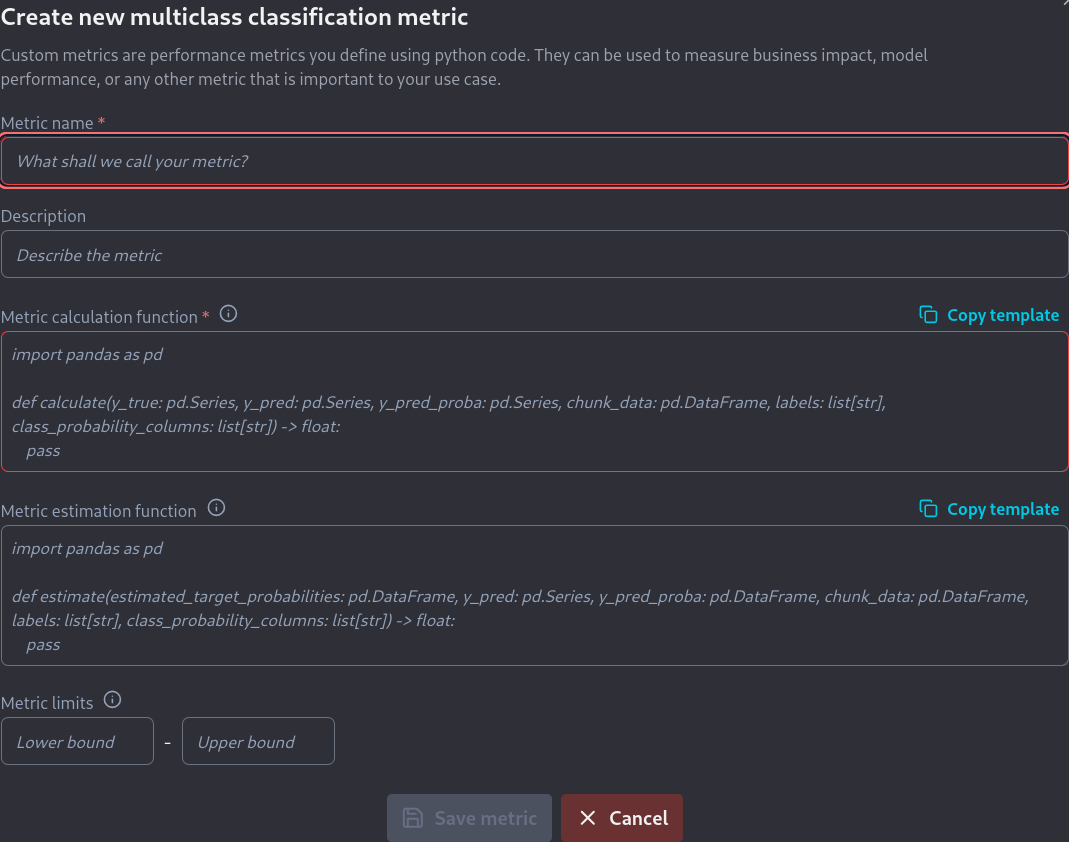

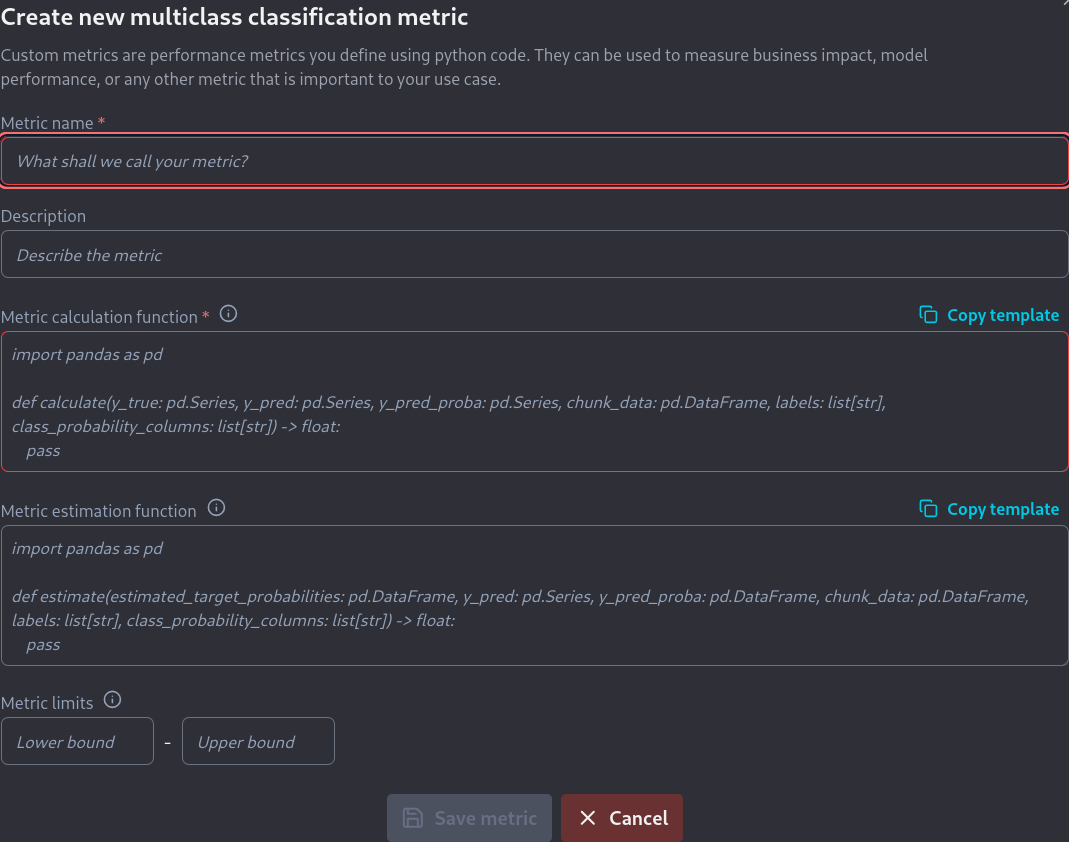

from sklearn.metrics import fbeta_score

def calculate(

y_true: pd.Series,

y_pred: pd.Series,

y_pred_proba: pd.DataFrame,

chunk_data: pd.DataFrame,

labels: list[str],

class_probability_columns: list[str],

) -> float:

return fbeta_score(y_true, y_pred, beta=2, average='macro')import numpy as np

import pandas as pd

from sklearn.preprocessing import label_binarize

def estimate(

estimated_target_probabilities: pd.DataFrame,

y_pred: pd.Series,

y_pred_proba: pd.DataFrame,

chunk_data: pd.DataFrame,

labels: list[str],

class_probability_columns: list[str],

):

beta = 2

def estimate_fb(_y_pred, _y_pred_proba, beta) -> float:

# Estimates the Fb metric.

#

# Parameters

# ----------

# y_pred: np.ndarray

# Predicted class label of the sample

# y_pred_proba: np.ndarray

# Probability estimates of the sample for each class in the model.

# beta: float

# beta parameter

#

# Returns

# -------

# metric: float

# Estimated Fb score.

est_tp = np.sum(np.where(_y_pred == 1, _y_pred_proba, 0))

est_fp = np.sum(np.where(_y_pred == 1, 1 - _y_pred_proba, 0))

est_fn = np.sum(np.where(_y_pred == 0, _y_pred_proba, 0))

est_tn = np.sum(np.where(_y_pred == 0, 1 - _y_pred_proba, 0))

fbeta = (1 + beta**2) * est_tp / ( (1 + beta**2) * est_tp + est_fp + beta**2 * est_fn)

fbeta = np.nan_to_num(fbeta)

return fbeta

estimated_target_probabilities = estimated_target_probabilities.to_numpy()

y_preds = label_binarize(y_pred, classes=labels)

ovr_estimates = []

for idx, _ in enumerate(labels):

ovr_estimates.append(

estimate_fb(

y_preds[:, idx],

estimated_target_probabilities[:, idx],

beta=2

)

)

multiclass_metric = np.mean(ovr_estimates)

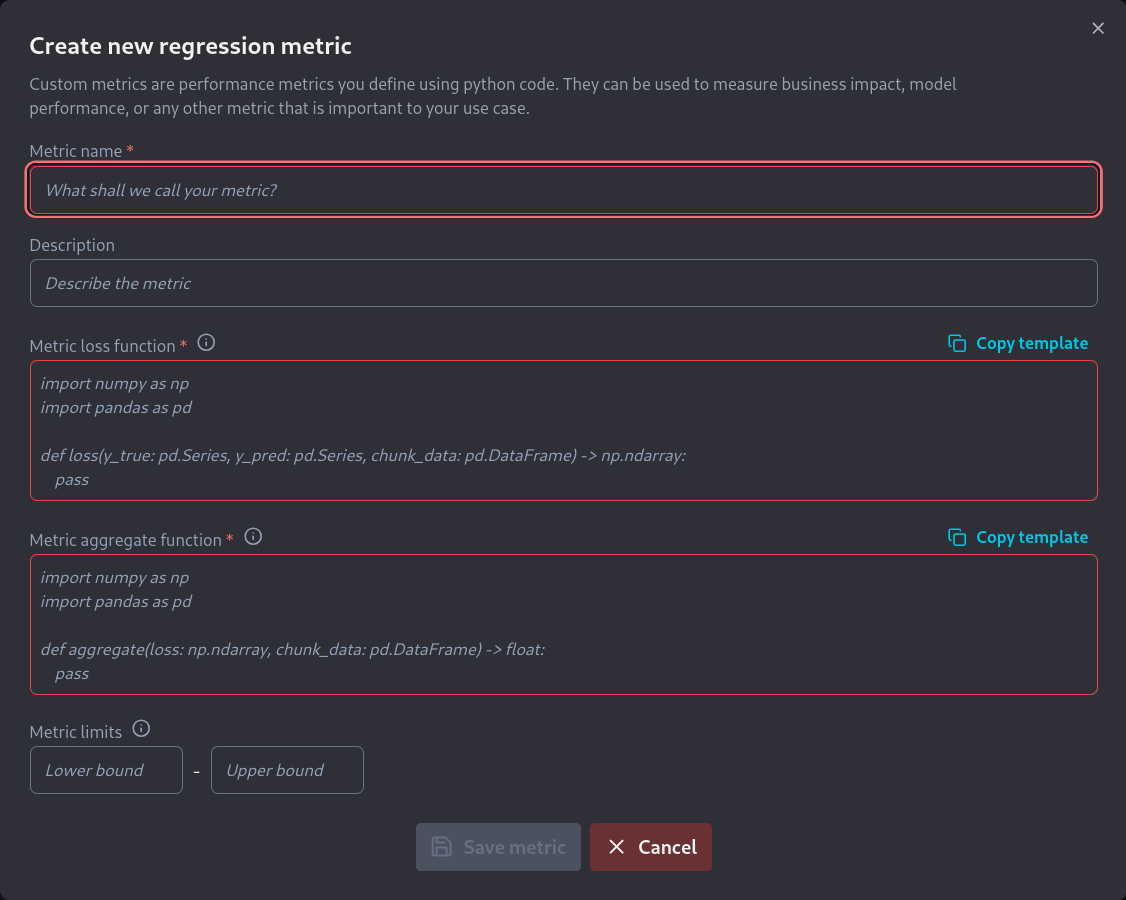

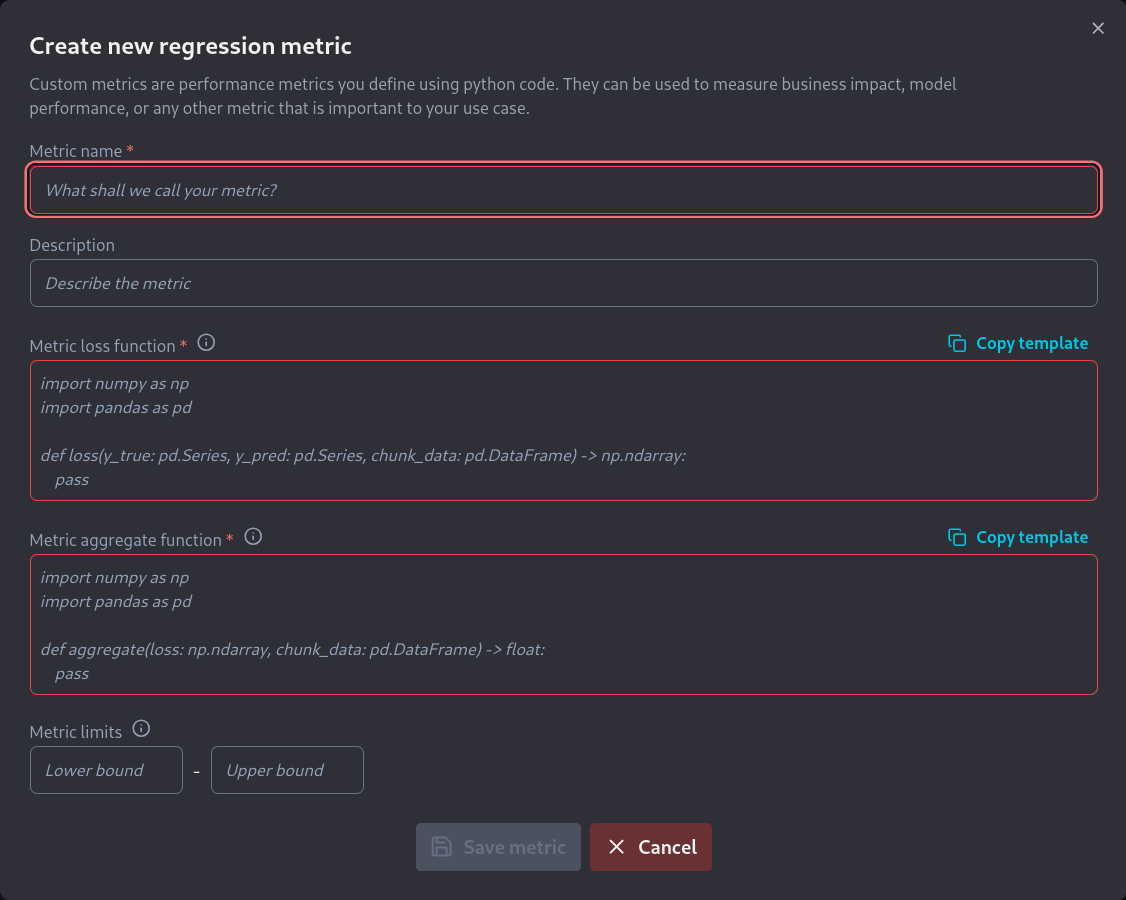

return multiclass_metricimport numpy as np

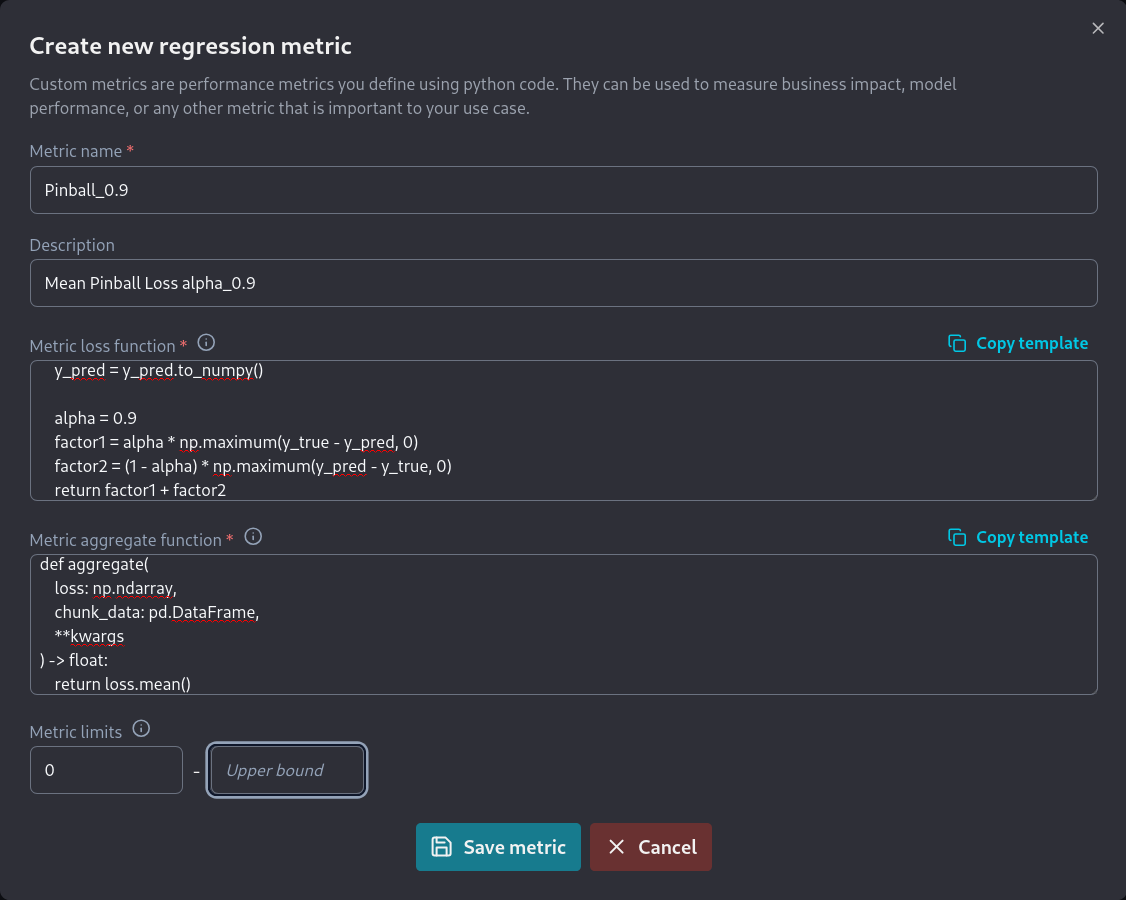

import pandas as pd

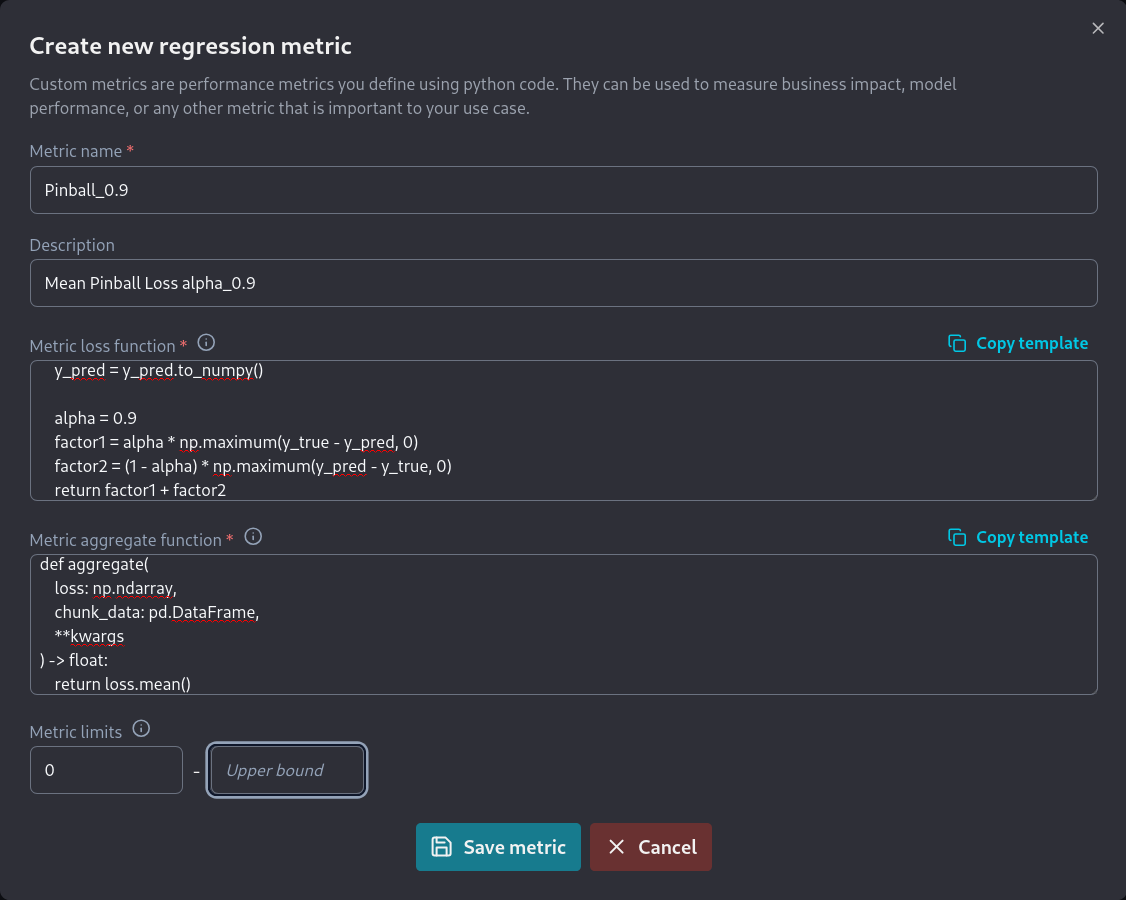

def loss(

y_true: pd.Series,

y_pred: pd.Series,

chunk_data: pd.DataFrame,

**kwargs

) -> np.ndarray:

y_true = y_true.to_numpy()

y_pred = y_pred.to_numpy()

alpha = 0.9

factor1 = alpha * np.maximum(y_true - y_pred, 0)

factor2 = (1 - alpha) * np.maximum(y_pred - y_true, 0)

return factor1 + factor2import numpy as np

import pandas as pd

def aggregate(

loss: np.ndarray,

chunk_data: pd.DataFrame,

**kwargs

) -> float:

return loss.mean()