Quickstart

Get familiar with NannyML Cloud by monitoring a hotel booking cancellation prediction model.

Getting access

If you don't have access yet, NannyML Cloud is available on both the Azure and AWS marketplaces, and you can deploy it in two different ways, depending on your needs.

Managed Application: With the Managed application, no data will leave your environment. This option will provision the NannyML Cloud components and the required infrastructure within your own Azure or AWS subscription. To learn more about this, check out the docs on how to set up NannyML Cloud on Azure and AWS.

Monitoring a classification model

If you prefer a video walkthrough, here's our Quickstart YouTube guide:

Hotel booking prediction model

The dataset comes from two hotels in Portugal and has 30 features describing the booking entry. To learn more about this data, check out the hotel booking demand dataset. After simple preprocessing, we trained the model to predict whether the booking would be canceled or not, with the final result of 0.87 ROC AUC on a test set.

To monitor the model in production, we created reference and analysis sets. NannyML uses the reference set to establish a baseline for model performance and shift detection. The test set is an ideal candidate to serve as a reference. The analysis set is the production data - NannyML checks if the model maintains performance or if there's a concept or covariate shift.

Note that analysis data is sometimes referred to as monitored data.

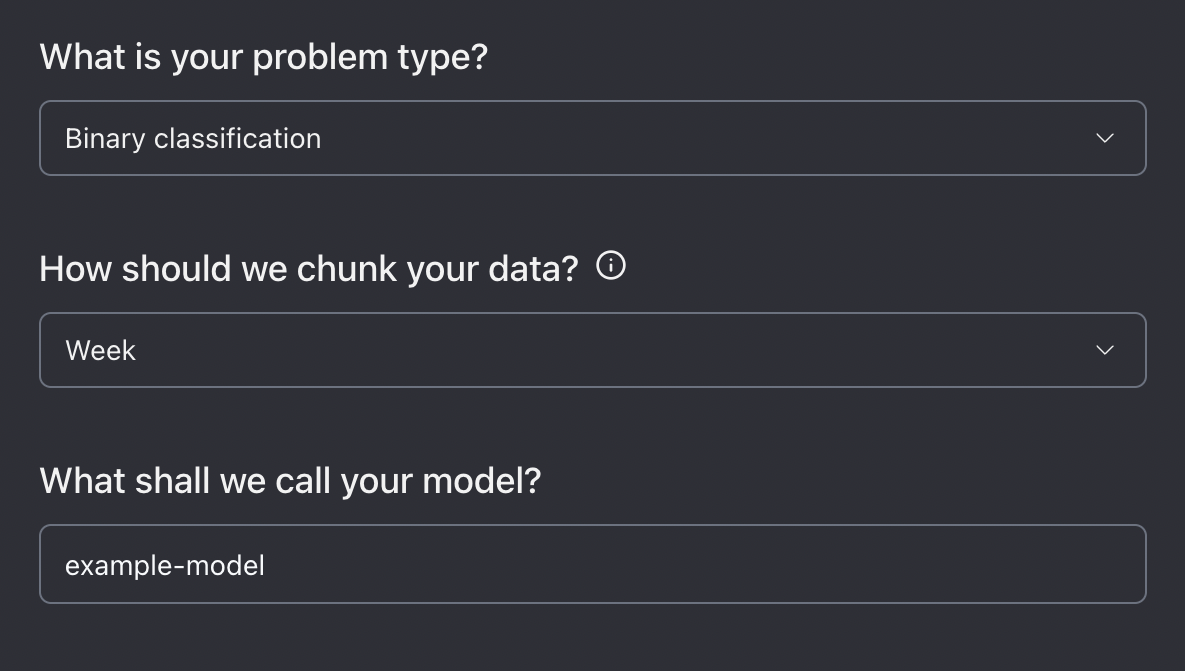

Let's add our model:

Click on the Add New Model button in the navigation bar.

Complete the new model setup configuration.

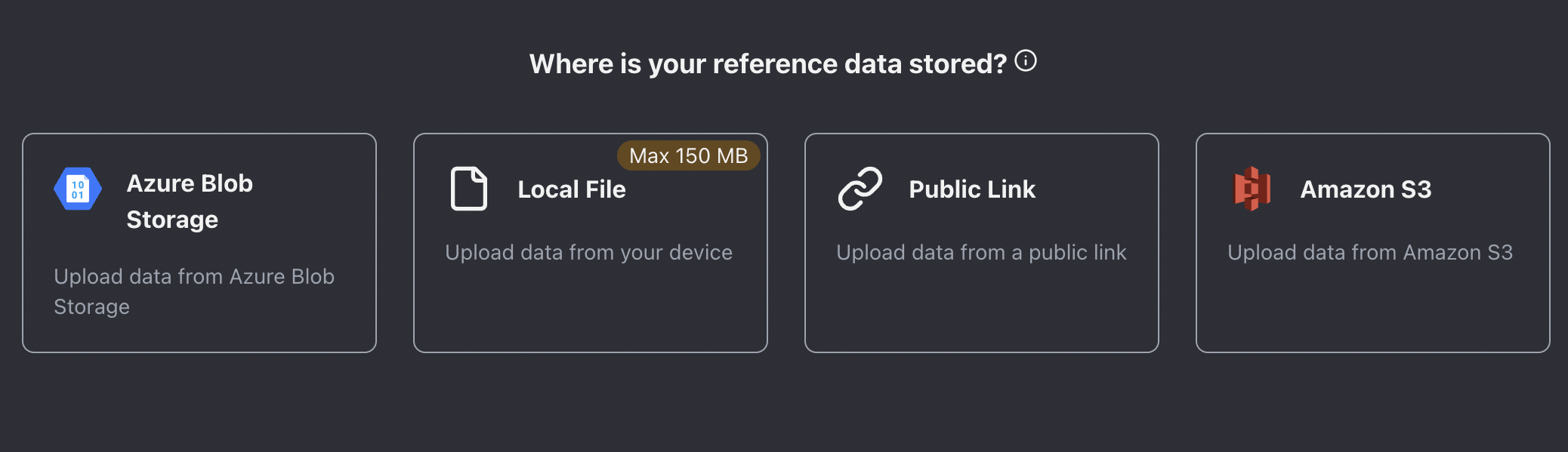

Upload the reference dataset by choosing one of the available upload methods (see image below). For this example, we will use the following links:

Reference dataset link -

https://raw.githubusercontent.com/NannyML/sample_datasets/main/hotel_booking_dataset/hotel_booking_reference_march.csvAnalysis dataset link -

https://raw.githubusercontent.com/NannyML/sample_datasets/main/hotel_booking_dataset/hotel_booking_analysis_march.csv

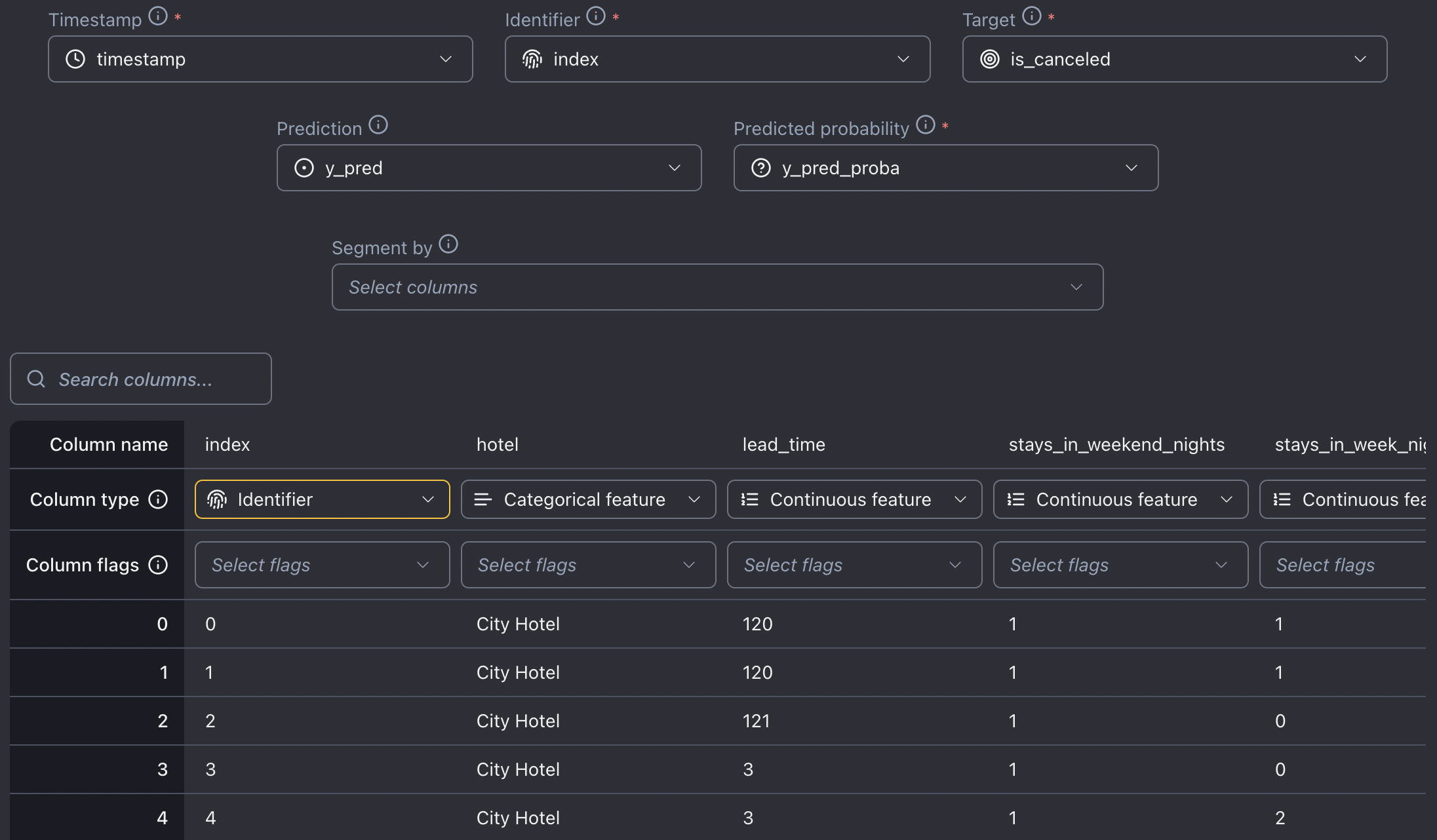

Configure the reference dataset. The uploaded reference data contains the following:

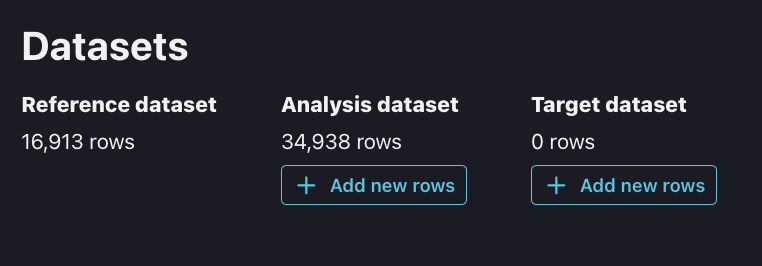

Timestamp - the information when the booking was made. The reference data spans from May to September 2016, while the analysis data covers October 2016 to August 2017.

Identifier - a unique identifier for each row in the dataset.

Target - ground truth/labels. In this example, the target is whether a booking is canceled or not. Notice that the target is not available in the analysis set. In this way, we simulate a real-world scenario where the ground truth is only available after some time.

Predictions - the predicted class. Whether the booking will get canceled or not

Predicted probability - the model scores outputted by the model.

Model inputs - 27 features about hotel booking, including booking like customer's country of origin, age, children, etc.

Optionally indicate the columns you wish to use for segmentation. This can be done by selecting specific columns using the Segment by dropdown menu or by selecting the Segment by flag for specific columns.

Upload the analysis data in the same way you uploaded the reference dataset.

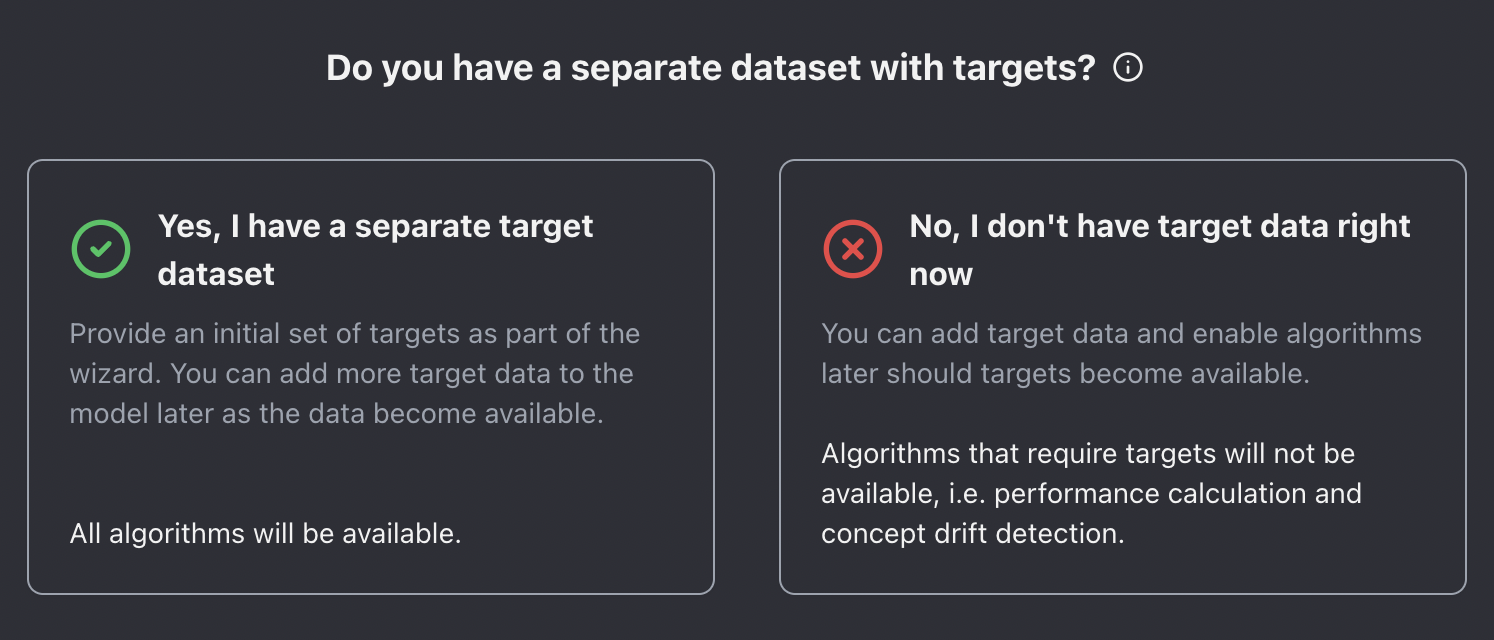

Next, upload the target data if it is available (in our working example, we don't have access to target data).

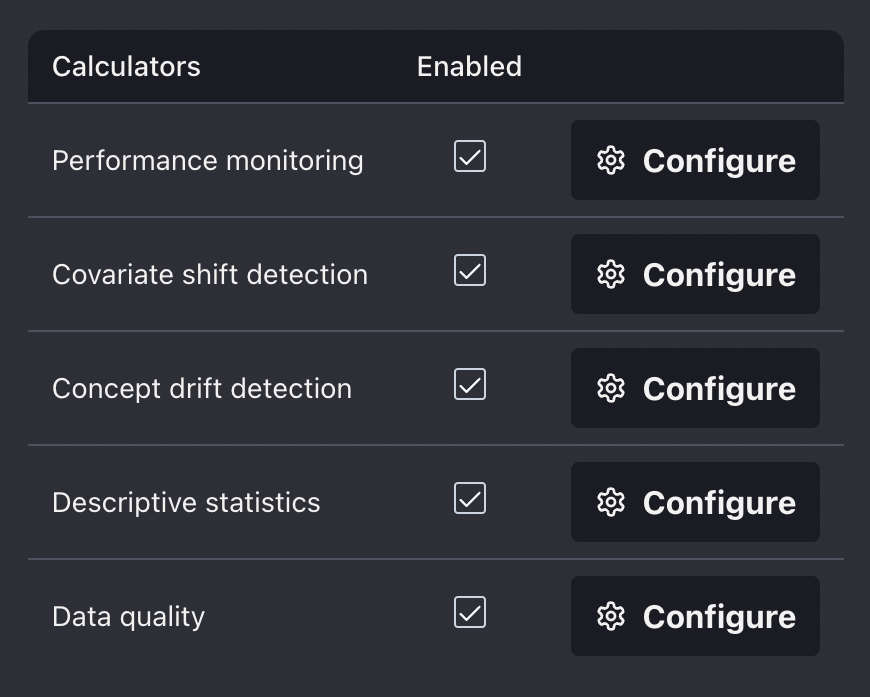

Configure the metrics you wish to evaluate.

Finally, review and upload the model.

To get started, simply follow the initial steps outlined in the SDK documentation. This will guide you through installing the SDK library and obtaining the NannyML Cloud URL and API Token.

After successful installation, create a Python script, import the SDK and Pandas library, and add the credentials.

For demonstration purposes, we recommend using a Jupyter or Colab notebook to upload data with the SDK instead of a Python script.

Now, we will load reference and analysis data.

We can now use the Schema class together with the from_df() method to configure the schema of the model.

Then, we create a new model by using the Model.create() method. Where we can set chunk period to monthly, and accuracy as the main monitoring performance metric.

And voila, the model should now be available in your Model Overview dashboard! 🚀

Estimated performance

ML models are deployed to production once their performance has been validated and tested. This usually takes place in the model development phase. The main goal of ML model monitoring is to continuously verify whether the model maintains its anticipated performance (which is not the case most of the time).

Monitoring performance is relatively straightforward when targets are available, but in many use cases like demand forecasting or insurance pricing, the labels are delayed, costly, or impossible to get. In such scenarios, estimating performance is the only way to verify if the model is still working properly.

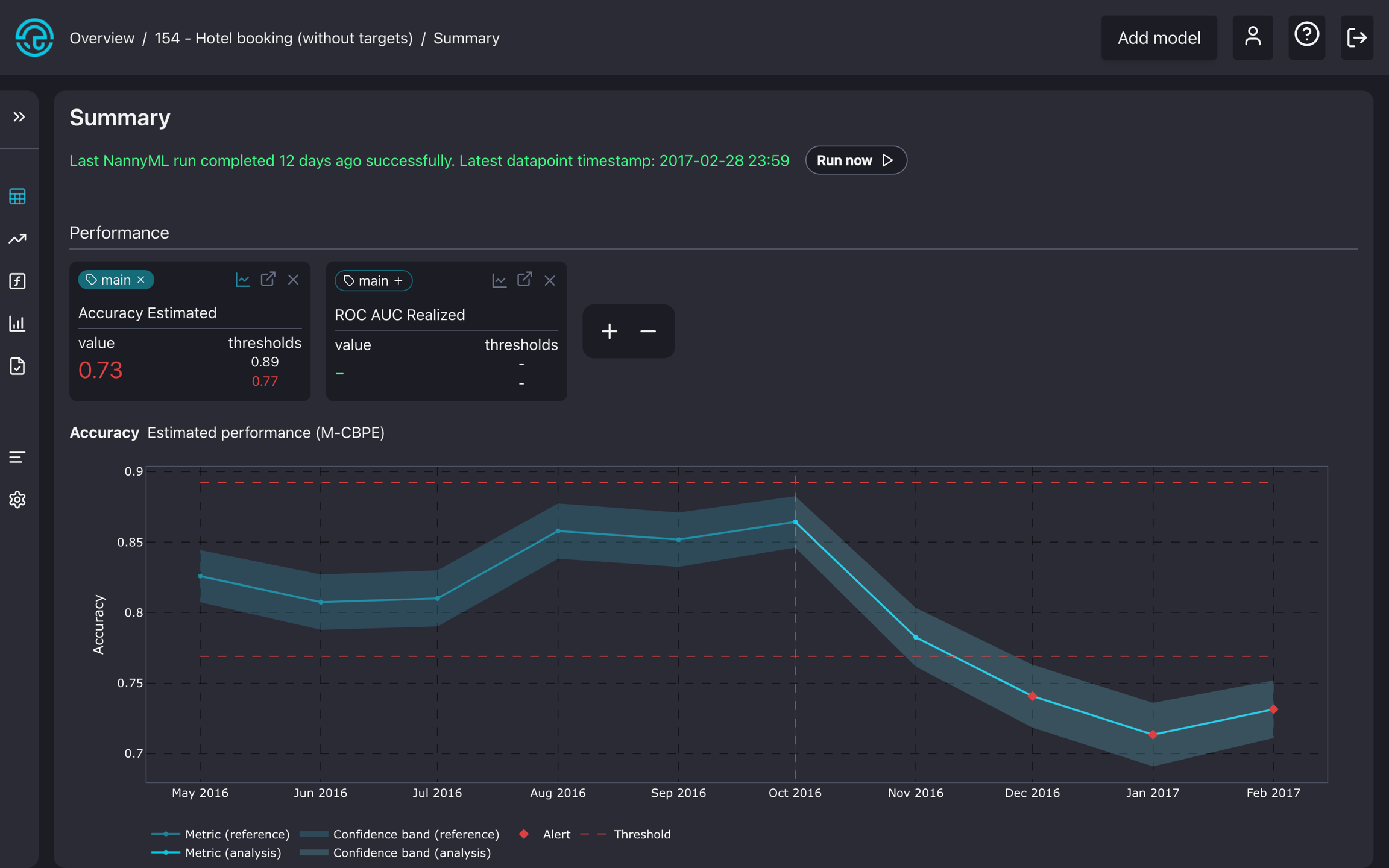

Let's see the estimated performance of our model on the summary page. The model summary shows signs of performance degradation in the last chunks of performance estimation.

Also, the PCA reconstruction error from the multivariate drift detection method seems to be higher than the given thresholds.

Notice that concept shift detection is not available since it requires ground truth data in the analysis set.

Multivariate drift detection is the first step in covariate shift detection, focusing on detecting any changes in the data structure over time. The accuracy and reconstruction error plot shows alerts in the last three months, which tells us that covariate shift is possibly responsible for it. But we still don't know what real-world changes are causing this. To figure that out, we need to analyze the drifting features more deeply.

Why did the performance drop?

Once we’ve identified a performance issue, we need to troubleshoot it. The first step is to look into potential distribution shifts for all the features in the covariate shift panel.

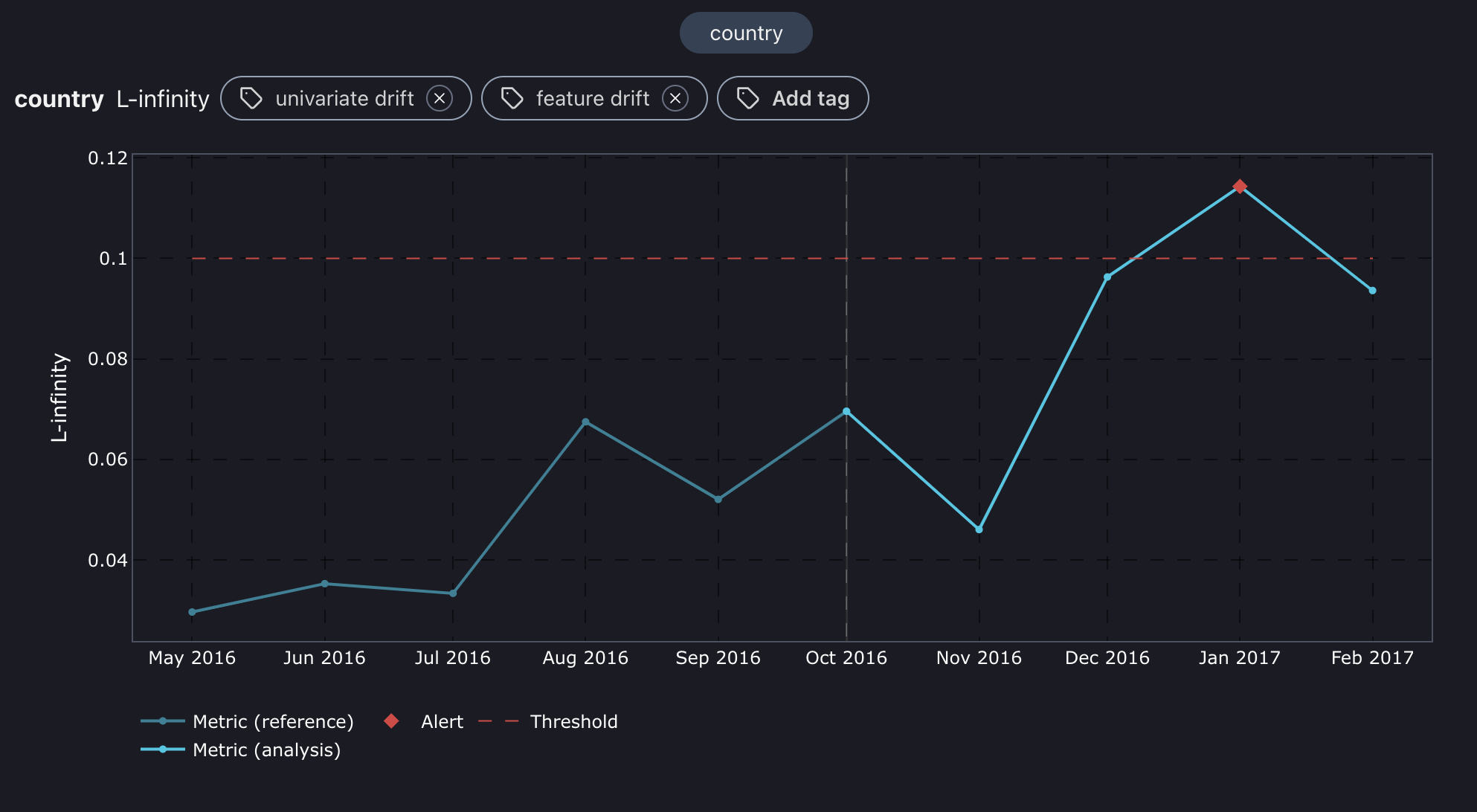

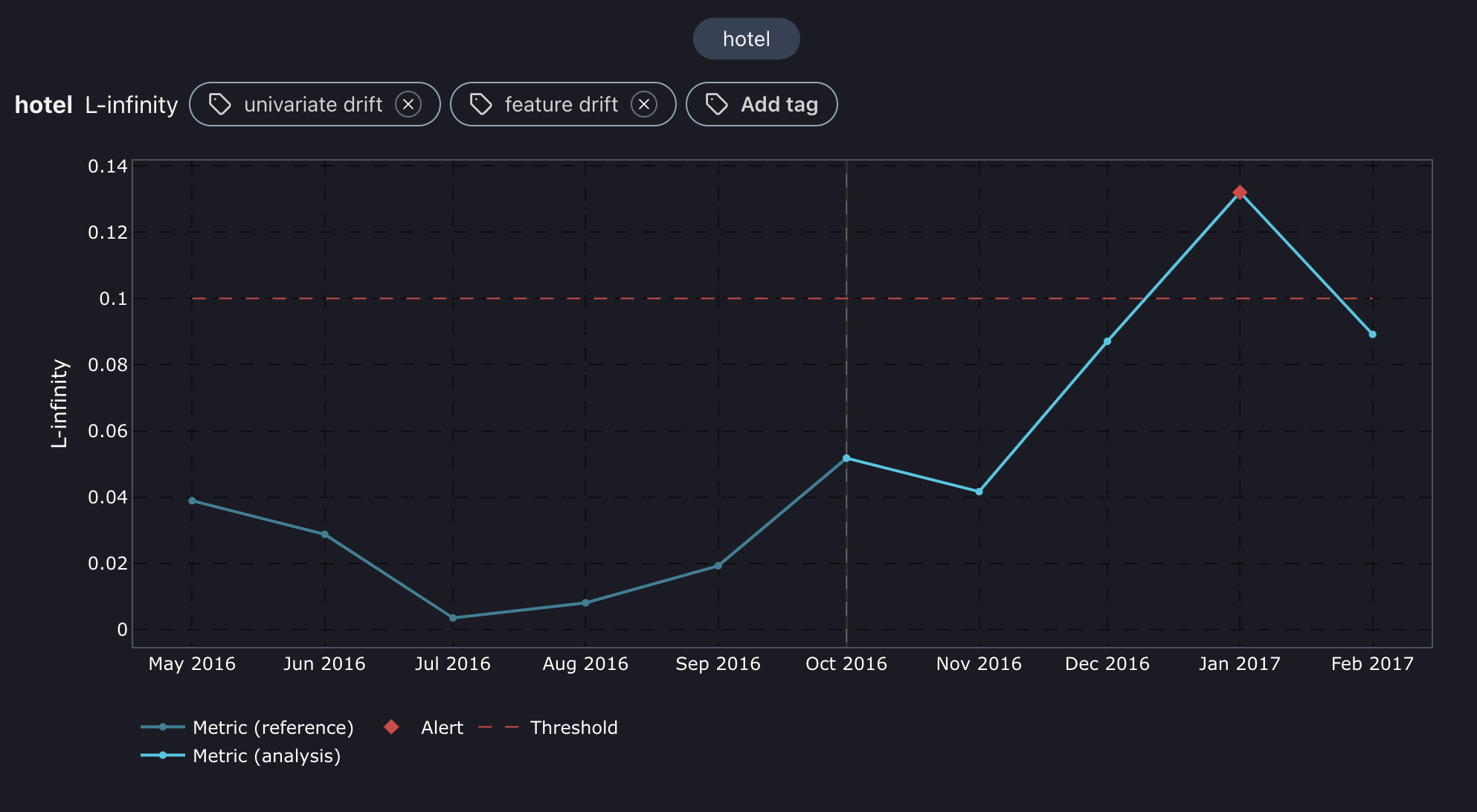

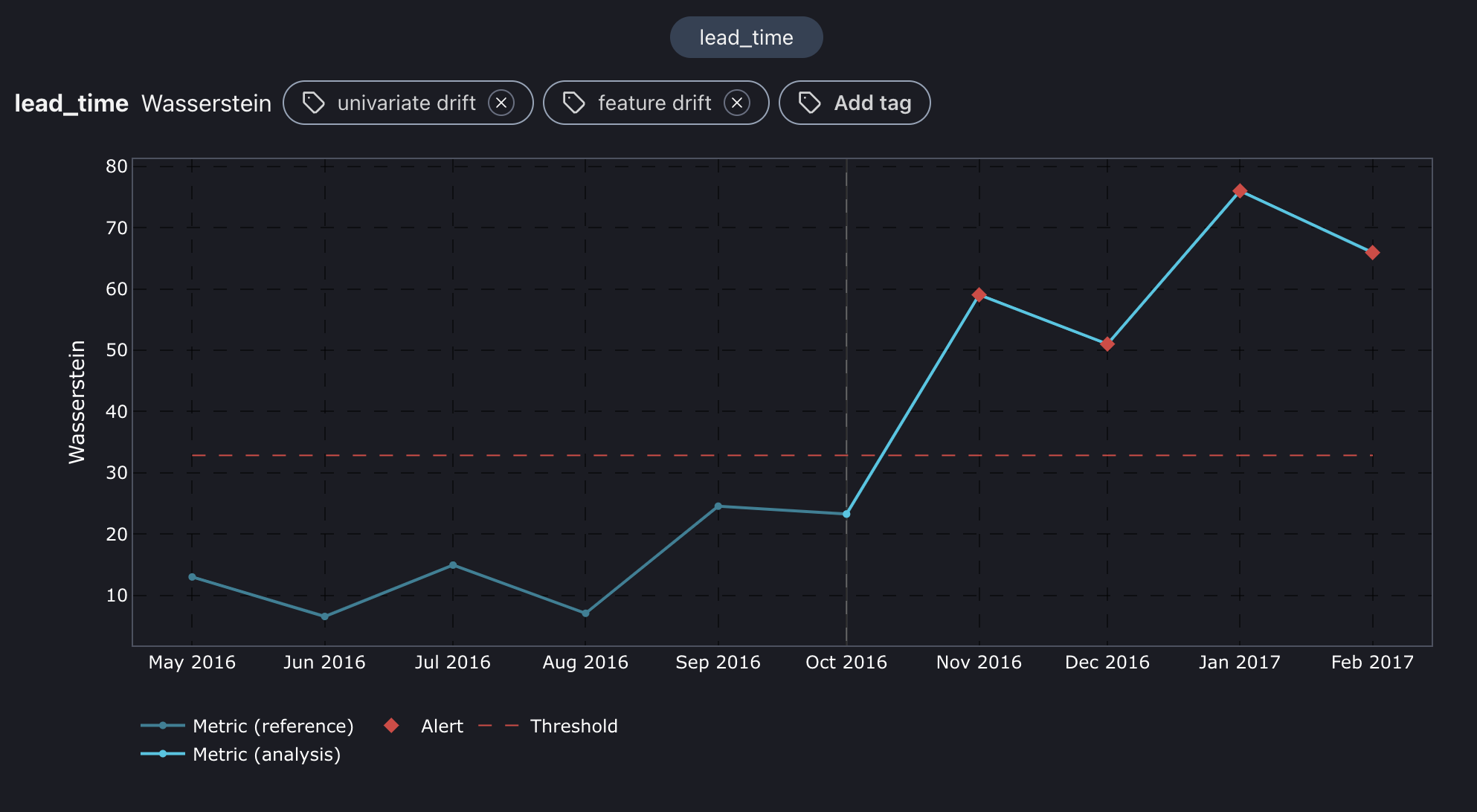

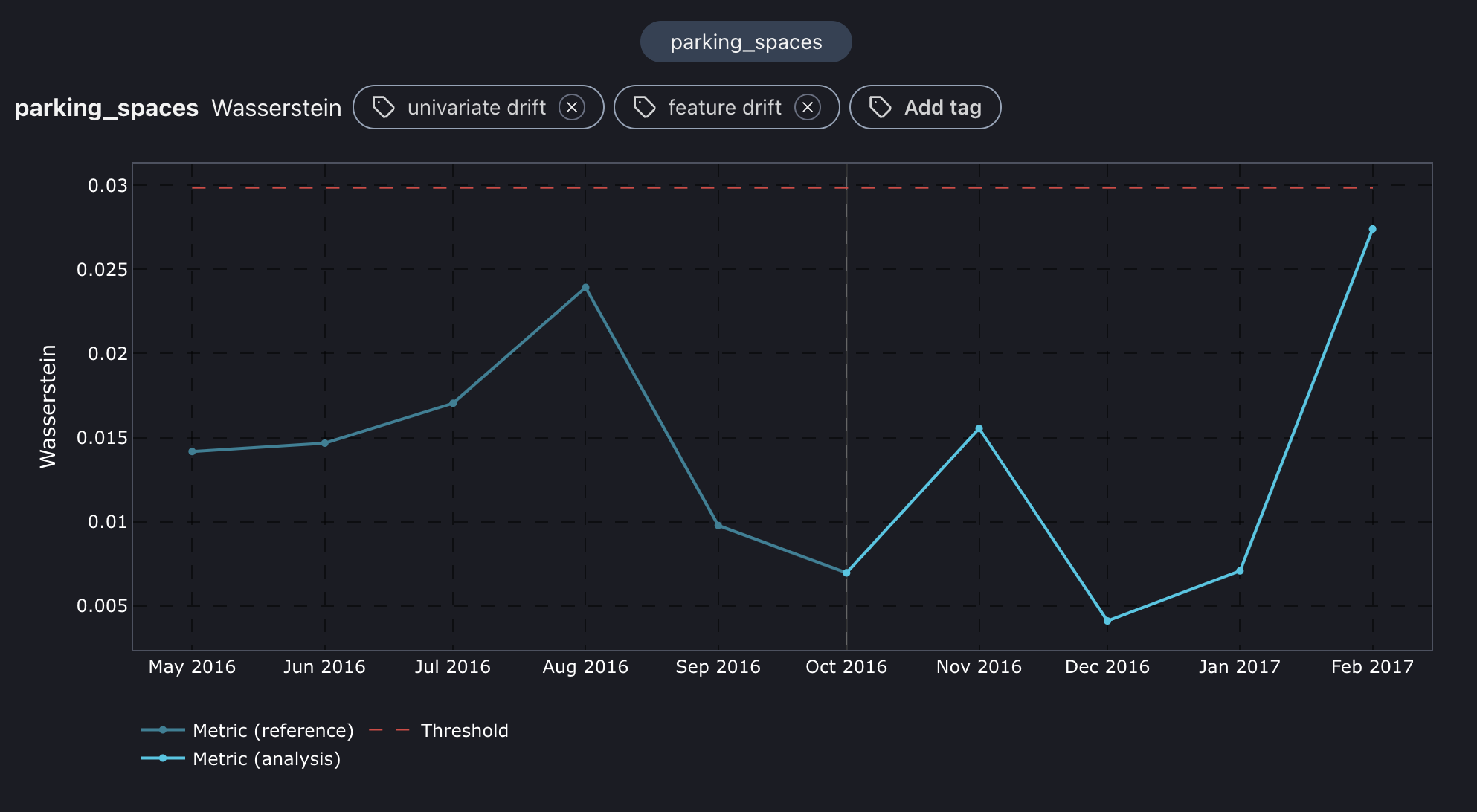

We have six methods to determine the amount of shift in each feature. To begin the process, we focus on the four most important features: hotel, lead_time, parking_spaces, and country. These features were determined using the feature importance method after the model was trained. To streamline the analysis, the methods used are l-infinity for categorical variables and the Wasserstein method for continuous features.

For the features country and hotel, we noticed a shift in February that lines up with a drop in performance. Also, lead_time began to drift from December to March, which is exactly when we started seeing a gradual decline in accuracy. So, it looks like the root cause is linked to the drift in these three features. Now, let's reason more broadly about these shifts and relate them to the customer's behavior around that time.

Shift explanations

The root cause is associated with the drift in three key features: lead_time, hotel, and country. During November-March, it's common for the temperatures in northern European countries like Germany, Sweden, and the Netherlands to drop below 0 degrees. Additionally, many children had their winter break at that time, allowing them to travel with their parents. It's possible that tourists from northern Europe chose to visit Portugal during this period to escape the cold, which explains the shift in the country's feature distribution.

Furthermore, Portugal's sunny weather and temperatures around 20 degrees are mainly found in Algarve, the southern part of the country, as opposed to Lisbon, where temperatures are around 15 degrees. This accounts for the shift in the hotel distribution feature, as more people are booking hotels in the Algarve. Lastly, many of these winter getaways by foreigners are typically planned well in advance to secure travel tickets, make train reservations, request time off from work, and make other preparations. This advanced planning explains the significant shift in the lead time feature.

These changes ultimately resulted in a decrease in the performance of the model during the December to March period.

Comparing estimated and realized performance

We used performance estimation before since our analysis set didn't have targets. Now, we can add to validate the estimations and see if the concept drift has also affected the performance.

Go to Model settings.

Click on the Add new rows button.

Upload the target dataset via the following link: -

https://raw.githubusercontent.com/NannyML/sample_datasets/main/hotel_booking_dataset/hotel_booking_gt_march.csvRun the analysis.

We first need to load our target data before we upload it to the NannyML Cloud. Once we've done that, just run NannyML Cloud again to view the realized performance and concept shift results.

We can see that the realized performance also dropped in December, January, and February, confirming that the estimations were really accurate. Let's check if the concept drift is present in our data.

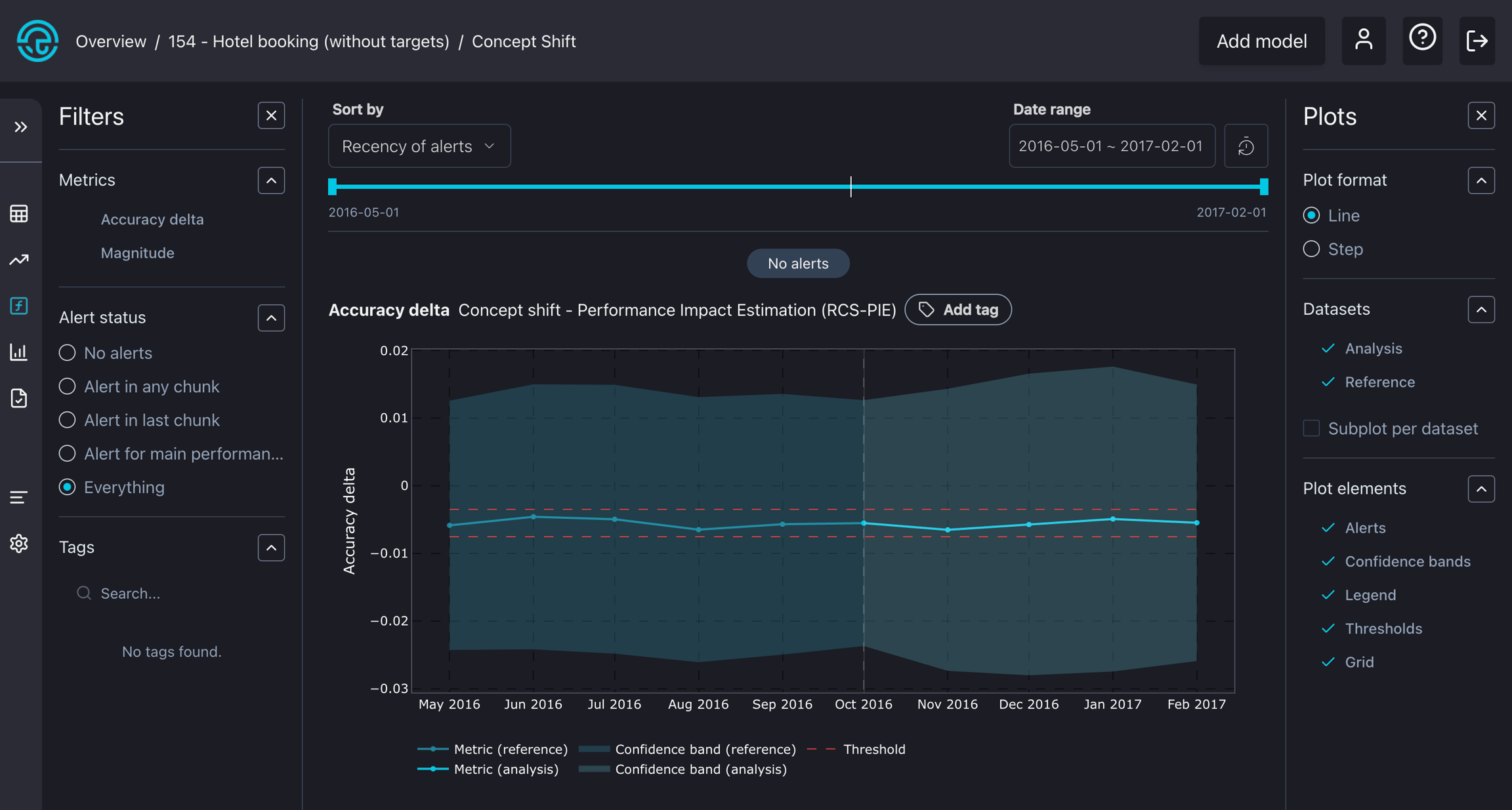

Concept drift detection

The graph illustrates the impact of concept drift on performance, with the x-axis representing the change in accuracy due to concept drift. As we can see, it's less than -0.01 and remains within the specified thresholds, not triggering any alerts. This suggests that concept drift did not contribute to a drop in accuracy during that period, only previously analyzed covariate shift!

What's next?

Discover what else you can do with NannyML Cloud.

🧑💻 Tutorials

Explore how to use NannyML Cloud with text and images

👷♂️ Miscellaneous

Learn how the NannyML Cloud works under the hood