Version 0.22.0

Release Notes

We're happy to introduce our latest product iteration, version 0.22.0.

This version brings UI improvements and adds new metrics & algorithms for multiclass classification problems. Let's see what's new!

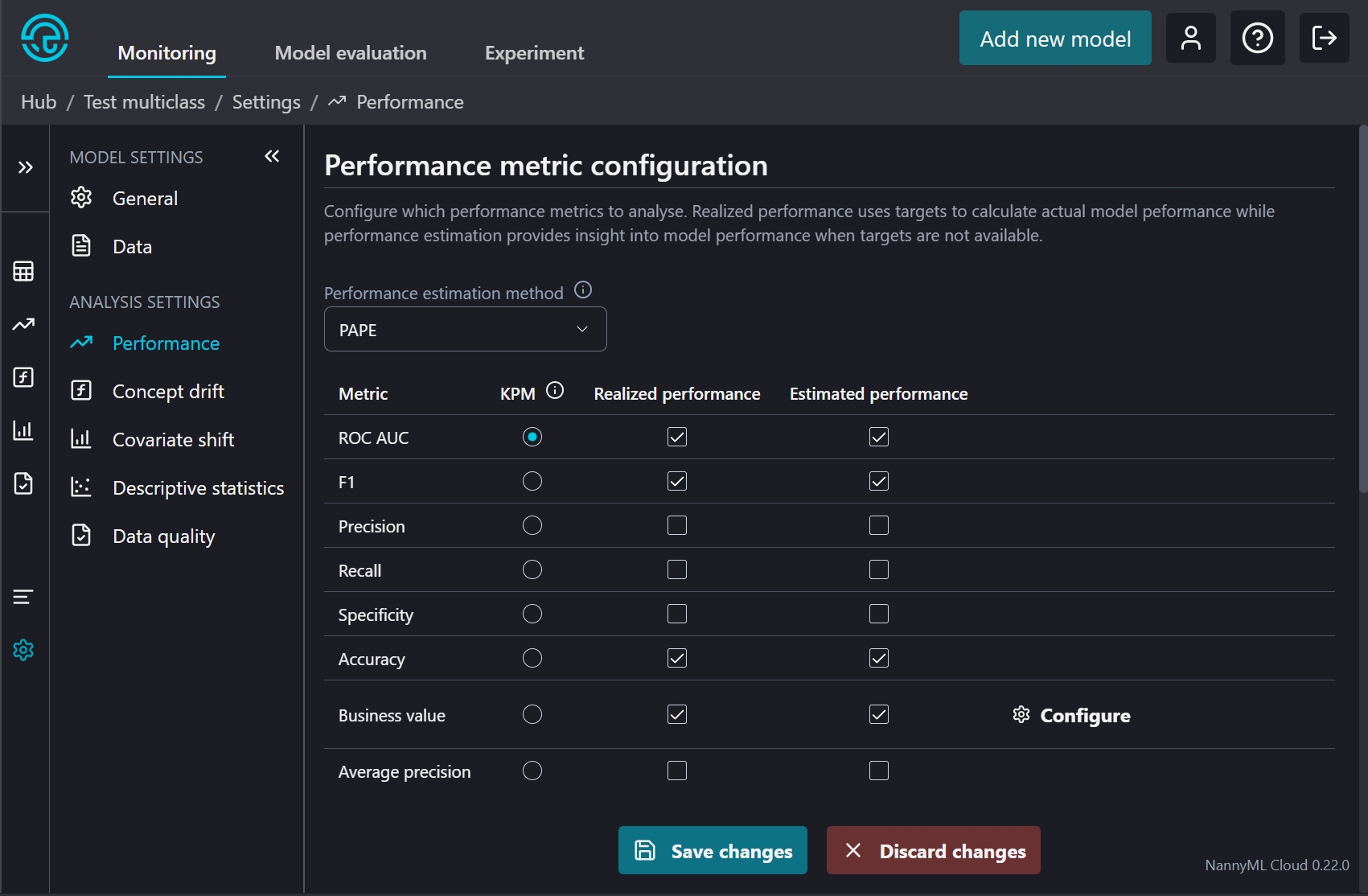

Business value for multiclass classification problems

We've supported business value for binary classification problems for a while now. This release also makes it available for multiclass classification problems. It allows all stakeholders to understand the business impact of your model.

To enable business value for a new model, select the realized - or estimated performance options in the model creation wizard. For existing models, enable it on the model settings page.

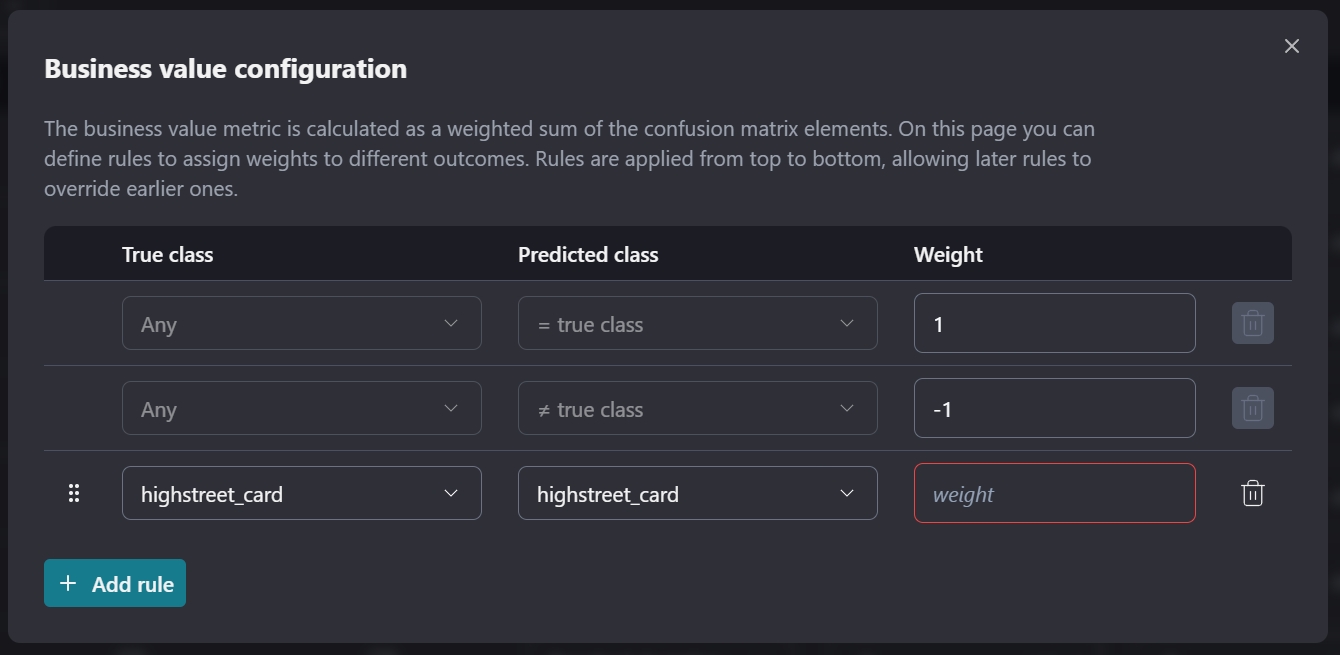

Once business value is enabled, configure rules to calculate business impact from the confusion matrix. Two default rules are in place to set a weight for correct and incorrect predictions. You can set values appropriate to your use case and add additional rules to set weights for specific outcomes.

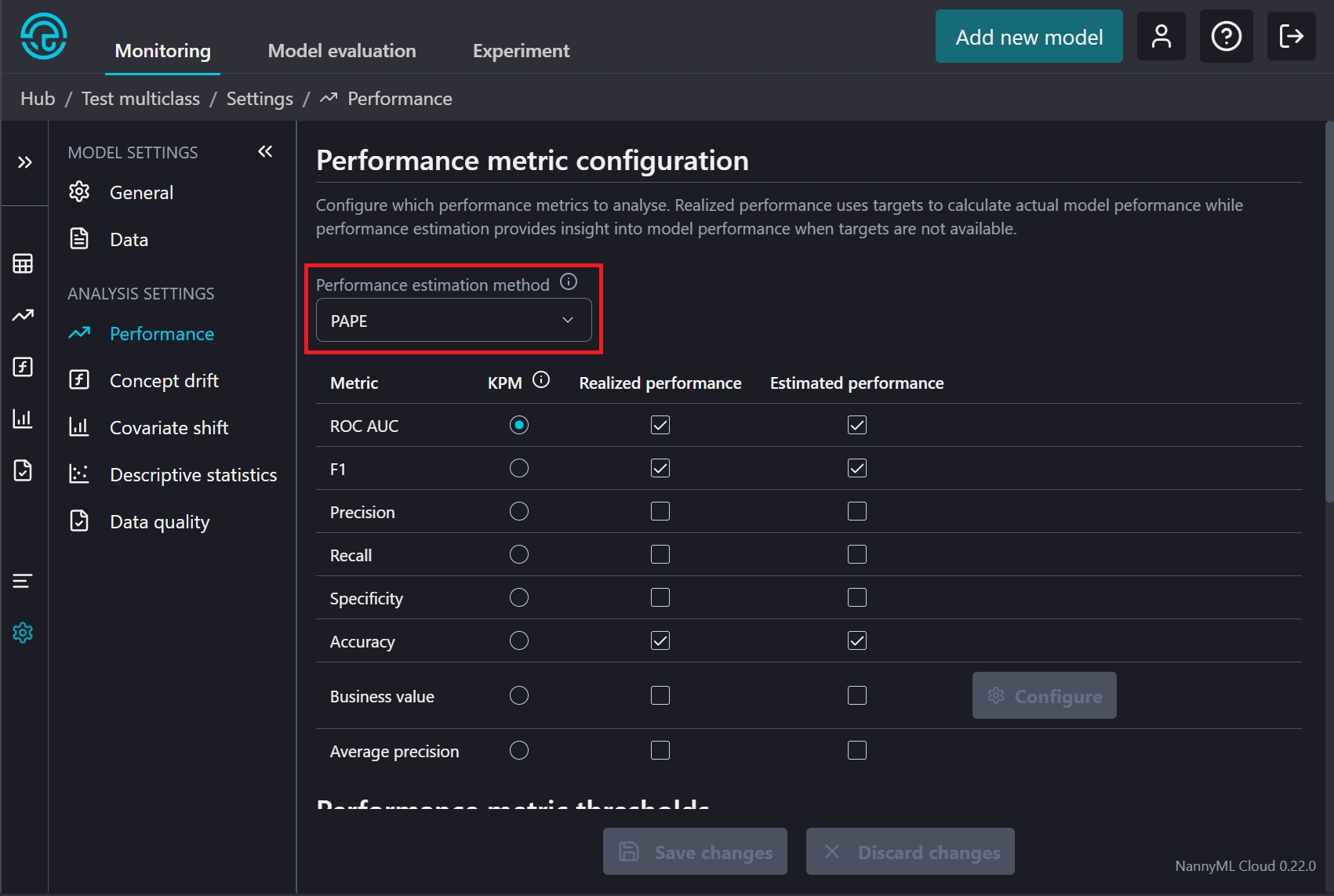

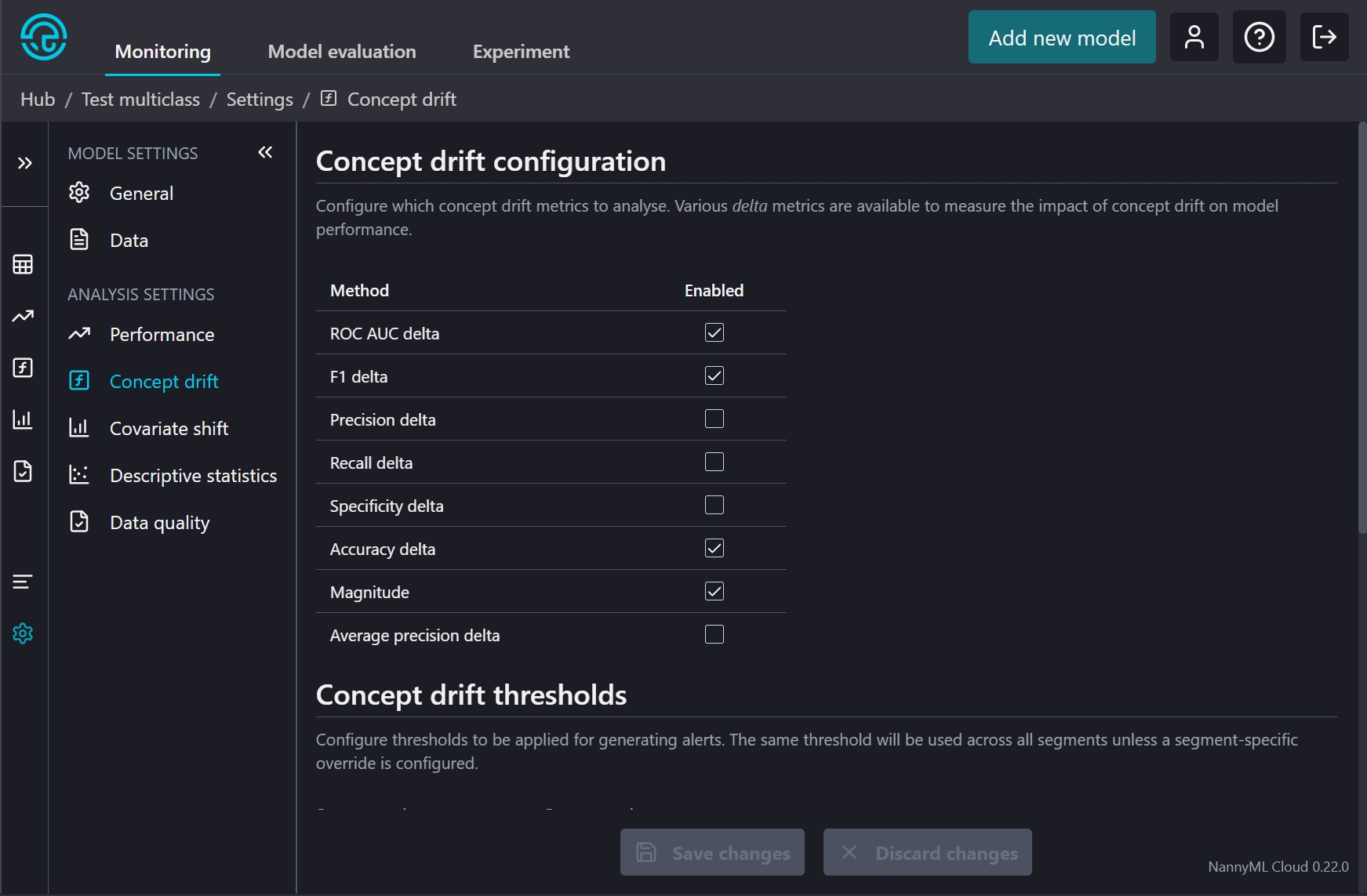

New algorithms for multiclass classification problems

We've added two new algorithms for binary classification problems recently: PAPE and RCD. With this release these algorithms are now also available for multiclass problems!

For newly created multiclass models PAPE and RCD will be enabled by default. For existing models you can enable them in the model settings:

Select PAPE as performance estimation method in the performance settings

Select concept drift metrics to calculate in the concept drift settings

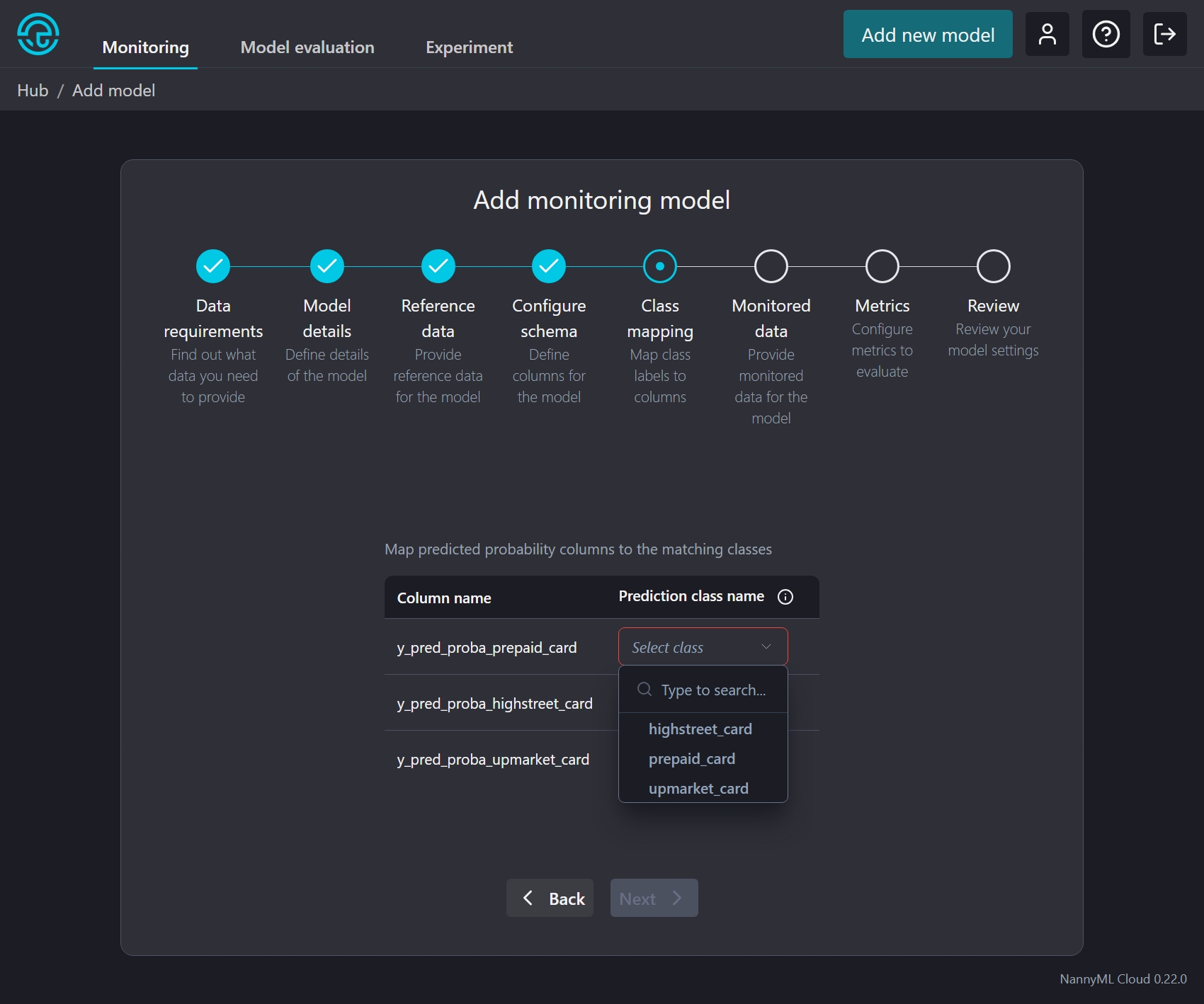

Improved class mapping

As part of the model creation wizard for multiclass classification models, each predicted probability column must be mapped to its associated class. Previously this was done using a text field where the user was expected to fill in the class name. Imagine trying to remember all the class names and the potential for typos. Yikes!

We've changed this to a much easier to use dropdown menu. Behind the scenes we're collecting the available classes from the target column after you configure the schema. Depending on the dataset size, this can take a little time. Generally it should be pretty fast because we stop as soon as we find enough classes to match the number of predicted probability columns. But do make sure all classes are represented in your reference data, or you may be waiting a while 😅.

UX improvements

With every release we aim to improve the user experience, based on your valuable feedback. Some of the highlights in this release:

Dates can be annoying. We've all been there: is 1/2/2024 the 1st of February 2024, or the 2nd of January 2024? Doubt no more! All dates in NannyML Cloud are now displayed in year-month-date format, e.g. 2024-02-01.

Clicking on a card in the model summary page will now update the filters on the result page so that you only see that metric.

Renamed concept shift to concept drift.

Updated the log page to visually indicate skipped calculators in the timeline.

Added an appropriate error screen when unable to connect to the NannyML Cloud server.