Engineering

Deployment architecture

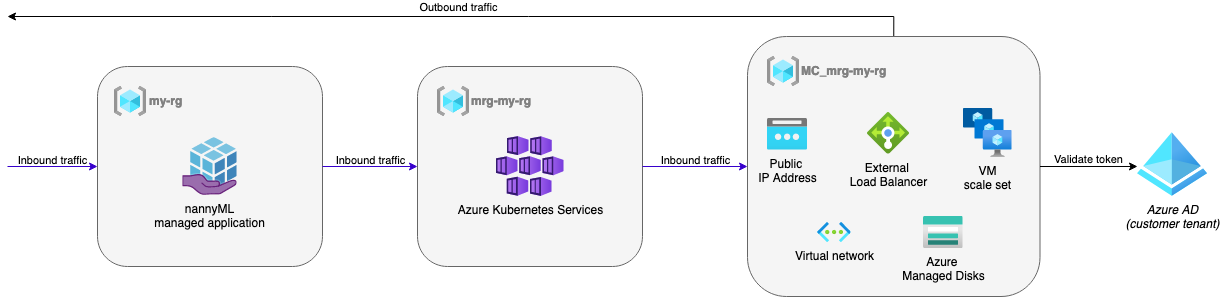

NannyML Cloud is deployed as a containerized application on a dedicated Kubernetes cluster, making it more scalable, robust, and resource-efficient.

Application configuration and model inputs/outputs are stored in databases running in the cluster. The volumes are persisted using Azure Managed Disks.

Network traffic is routed through an Azure Load Balancer (ALB). The ALB instance has a dynamic public IP assigned to it. It is also exposed using a prefix of your choosing as

<dns-prefix>.<location>.cloudapp.azure.com, e.g.my-nannyml.westeurope.cloudapp.azure.com.

Application & Data security

All traffic between clients and the web application uses a TLS-encrypted connection. Certificates are provided by Let’s Encrypt and automatically refreshed.

Data at rest - i.e., written on a disk or in databases - is written to Azure-managed disks, where they are encrypted by default using Server Side Encryption (SSE).

Only a NannyML support team member can access the cluster after explicit approval has been granted by you.

Backups & Disaster recovery

We’re in the process of implementing an automated backup solution.

NannyML is not intended to serve as your primary storage for a model in/outputs. Please ensure you safeguard that data using alternative storage means (e.g., Azure Delta Lake).

Authentication & User management

NannyML integrates with Azure Active Directory (Azure AD) for user authentication.

After you register NannyML as an application in Azure AD, you can control user access to the NannyML cloud application using Azure AD users and groups.

Prerequisites

A subscription and or resource group in Azure where you have sufficient permissions for resource creation

An application identifier and tenant ID are obtained from performing an “app registration” in Azure AD.

Expected cost

The infrastructure cost of NannyML consists of three components: compute cost, network traffic cost, and storage cost. The compute cost will attribute the most to the overall cost. You can exert influence on this cost by tweaking the compute infrastructure used by NannyML. Factors to tweak are:

Cluster size: set a fixed number of nodes for strict cost control or increase robustness and scalability by enabling cluster auto-scaling. Fewer nodes are cheaper; more nodes increase your computation power and failure tolerance.

Node type: select the VM size that best matches your use workload. NannyML computations are typically not strictly time-bound but might use a lot of memory for larger datasets. Balance between cost and a future-proof setup when selecting the appropriate CPU and memory sizes. Workloads in Kubernetes fail when running out of memory but are just throttled when hitting CPU limits.

This is what a minimal NannyML setup looks like:

Auto-scaling cluster, minimum of 1 node, maximum of 3 nodes. Start off at a single node, favoring lower cost above robustness.

Nodes of a “General Purpose” type like the Azure Dsv3 series. For a small number of models,

Standard_D2s_v3andStandard_D4s_v3are excellent options.

Pricing simulations for a minimal cluster with a single Standard_D4s_v3 node put the infrastructure cost estimates at about $250/month.