Getting Started

Interact programatically with nannyML cloud throughout its SDK

NannyML Cloud SDK is a Python package that enables programmatic interaction with NannyML Cloud. It allows you to automate all aspects of NannyML Cloud, including:

Creating a model for monitoring.

Logging inferences for analysis.

Triggering model analysis

If you prefer a video walkthrough, here's our YouTube guide:

Installation

Currently, the package hasn't been published on PyPI yet, which means you cannot install it via the regular Python channels. Instead, you'll have to install directly from the repository.

Authentication

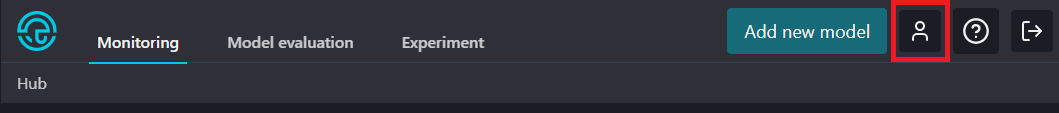

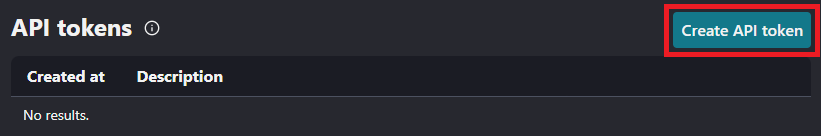

To use the NannyML Cloud SDK, you need to provide the URL of your NannyML Cloud instance and an API token to authenticate. You can obtain an API token on the account settings page of your NannyML Cloud instance.

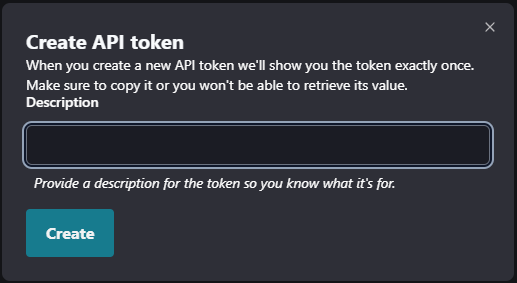

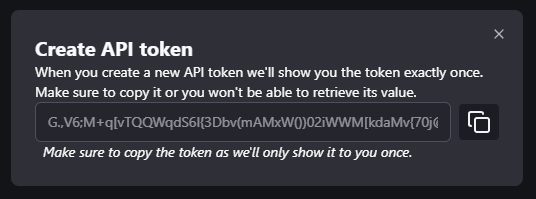

After clicking the create button you'll be presented with a prompt to enter an optional description for the API token. We recommend describing what you intend to use the token for so you know which token to revoke later when you no longer need it. Copy the token from the prompt and store it in a secure location.

Once you have an API token you can use it to authenticate the NannyML Cloud SDK. Either by inserting the token & URL directly into the python code:

Or using environment variables:

We recommend using an environment variable for the API token. This prevents accidentally leaking any token associated with your personal account when sharing code.

Example

The following snippets provide an example of how you can set up the monitoring data and create a model in NannyML Cloud to start monitoring it.

To run the example, we will use the synthetic dataset included in the NannyML OSS library, where the model predicts whether a customer will repay a loan to buy a car. Check out Car Loan Dataset to learn more about this dataset.

Step 1: Authenticate and load data

Step 2: Set up the model schema

We use the Schema class together with the from_df method to set up a schema from the reference data.

In this case, we define the problem as 'BINARY_CLASSIFICATION' but other options like 'MULTICLASS_CLASSIFICATION' and 'REGRESSION' are possible.

More info about the Schema class can be found in its API reference.

Step 3: Create the model

We create a new model by using the create method. Where we can define things like how the data should be chunked, the key performance metric, etc.

More info about the Model class can be found in its API reference.

In case you are wondering why we need to pass the reference_data twice —once in the schema and another in the model created— the reason is that both steps are treated differently. In the schema inspection step, we transmit only a few rows (100 to be precise) to the NannyML Cloud server for deriving the schema. While when creating the model, the entire thing is uploaded, so that will take a bit more time.

Step 4: Ensure continuous monitoring

The previous three steps allow you to monitor an ML on the analysis data previously set. But once new production data is available, you might want to know how your model is performing on it.

You can load any previously set model by searching for it by name. Then, it's a matter of loading the new model predictions, adding them to the model using the method add_analysis_data, and triggering a new monitoring run.

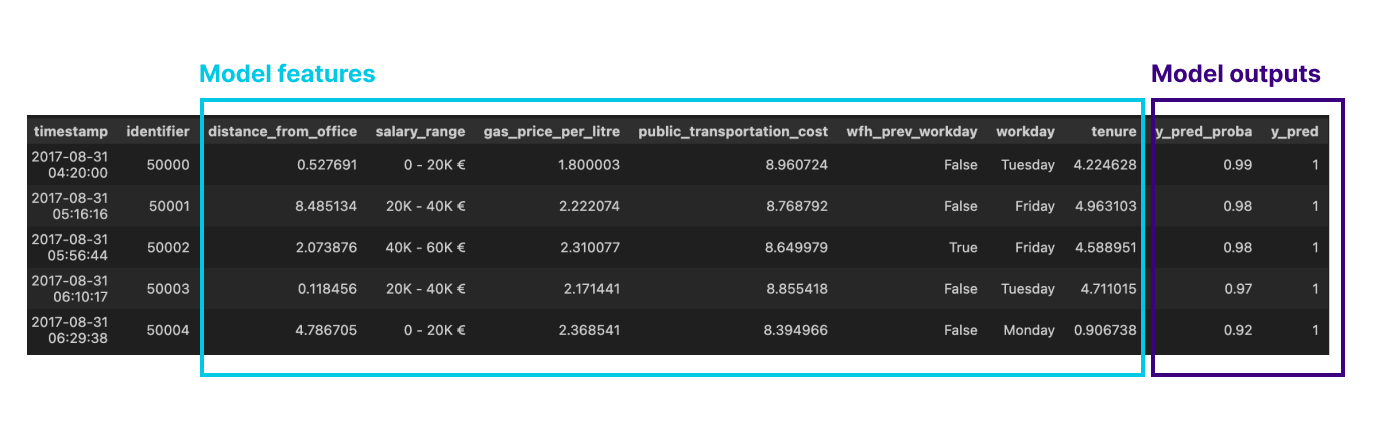

new_infererences can be a dataset with several new model inferences:

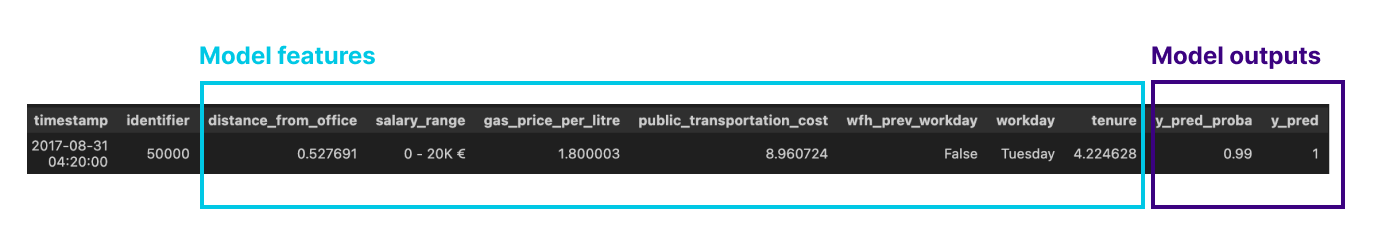

or even a single observation:

It is also worth noting that you can trigger a monitoring run whenever you want (e.g., after adding 1000 observations) by calling the trigger method from the Run class.

Step 5 (optional): Add delayed ground truth data

If ground truth becomes available at some point in the future, you can add it to nannyML Cloud by using the method add_analysis_target_data from the Model class.

Last updated