Concept drift

The Concept drift dashboard enables you to analyze the impact and magnitude of concept drift. To calculate these results, NannyML Cloud requires the analysis set to include a ground truth column.

Note: Concept drift detection is currently supported only for binary classification use cases. For custom support in regression use cases, please contact us on our website.

If you prefer a video walkthrough, here is our guide explaining how to use the concept drift page:

Here, you can find detailed descriptions of various elements on the concept drift page:

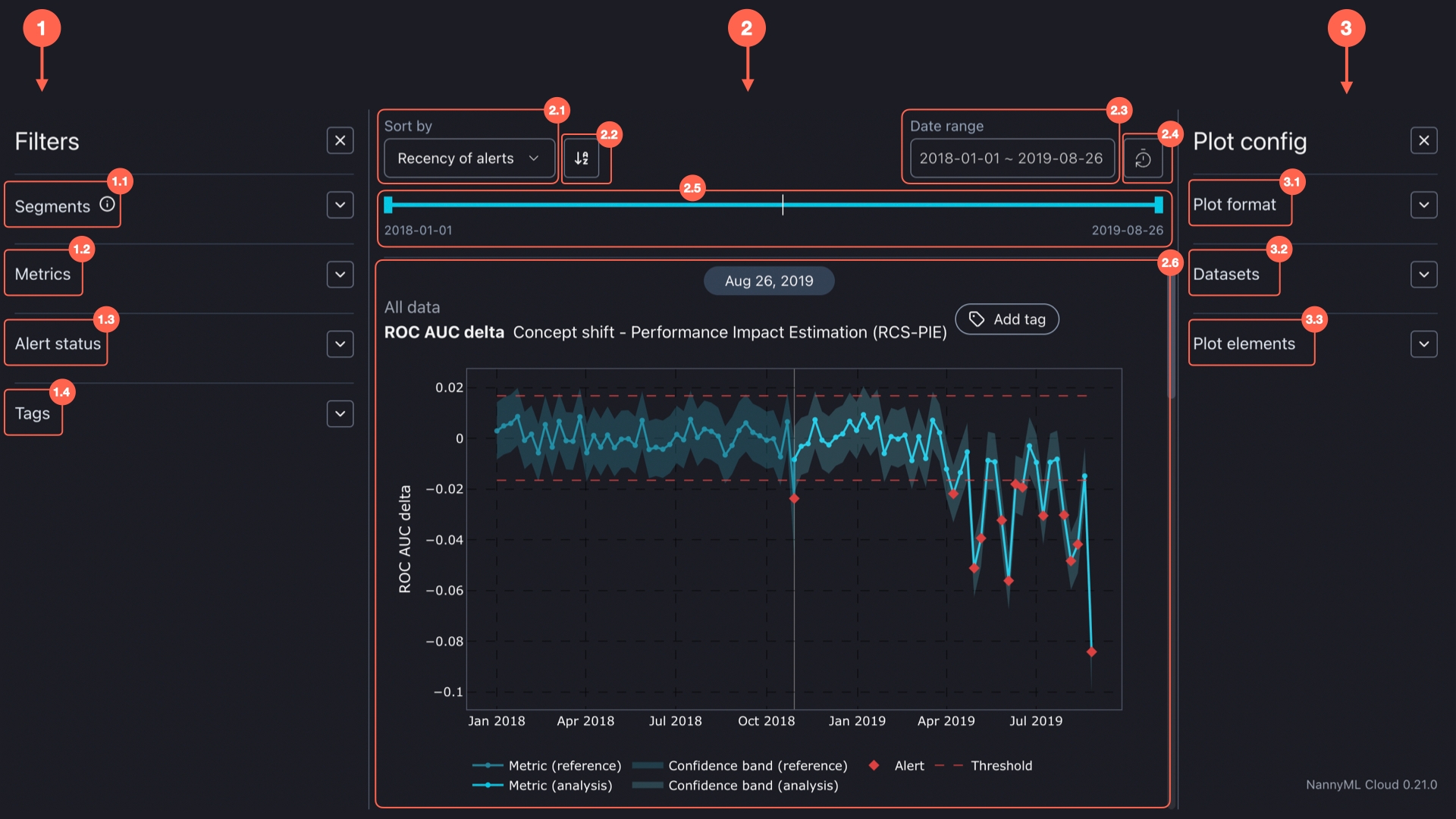

The Concept drift dashboard consists of three main components:

1. Filters

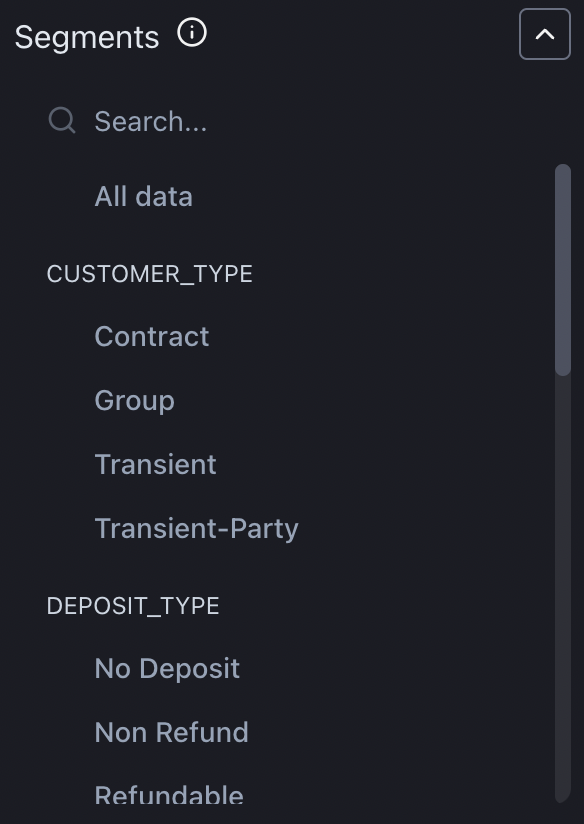

Segmentation allows you to split your data into groups and analyze them separately.

For a given model, each of the columns that are selected for segmentation during configuration or in the model settings appears under the segmentation filter. Segments are then created for each of the distinct values within that column.

In the filter section, you can select the segments you want to see visualized. You can also select All data to visualize results for the entire dataset.

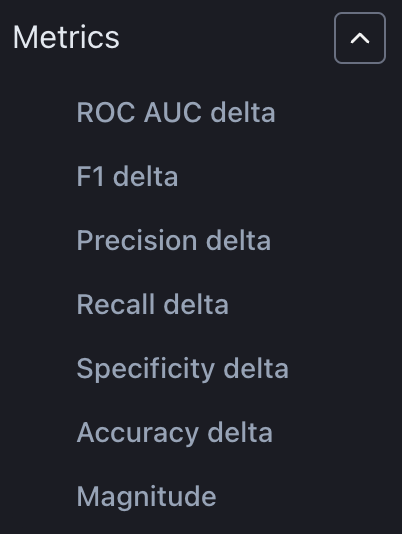

Select which metrics you want to have displayed on the performance impact graph. Additionally, a magnitude metric illustrates the magnitude of concept drift at specific periods.

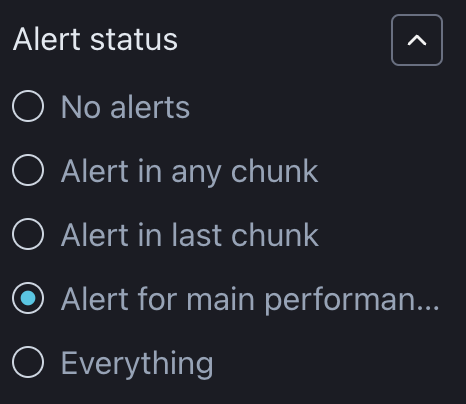

Choose which metrics to display based on whether there are no alerts, alerts in any or only the last chunk, alerts in performance metric with the main tag, or include all charts regardless of when and if any alerts occurred.

Filter charts by the previously specified tags.

2. Visualizations

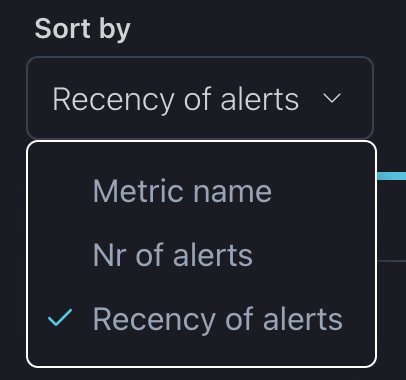

You can change the order of charts based on the metric name, number, or recency of the alerts.

For all sorting methods, the icons shown below toggle between ascending and descending order. The icon displayed depends on the selected sorting method.

Metric Name: The icon toggles between alphabetical order and reverse alphabetical order. The default mode is alphabetical order.

Nr of Alerts: The icon toggles between ascending and descending order based on the number of alerts. The default mode displays plots with the most alerts first.

Recency of Alerts: The icon toggles between showing newer alerts first and older alerts first. The default mode shows the most recent alerts first.

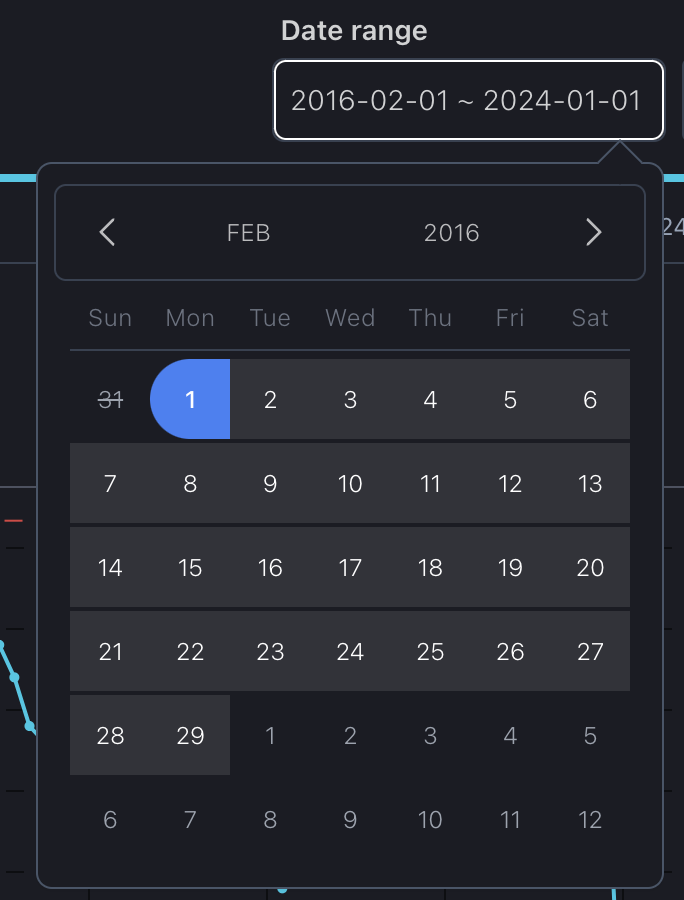

You can select a specific period of interest which applies to all charts.

To reset a previously set date period, whether using the date range or slider, simply press the "Reset" button.

Similar to selecting a date range, you can choose a specific period of interest by simply moving the date slider.

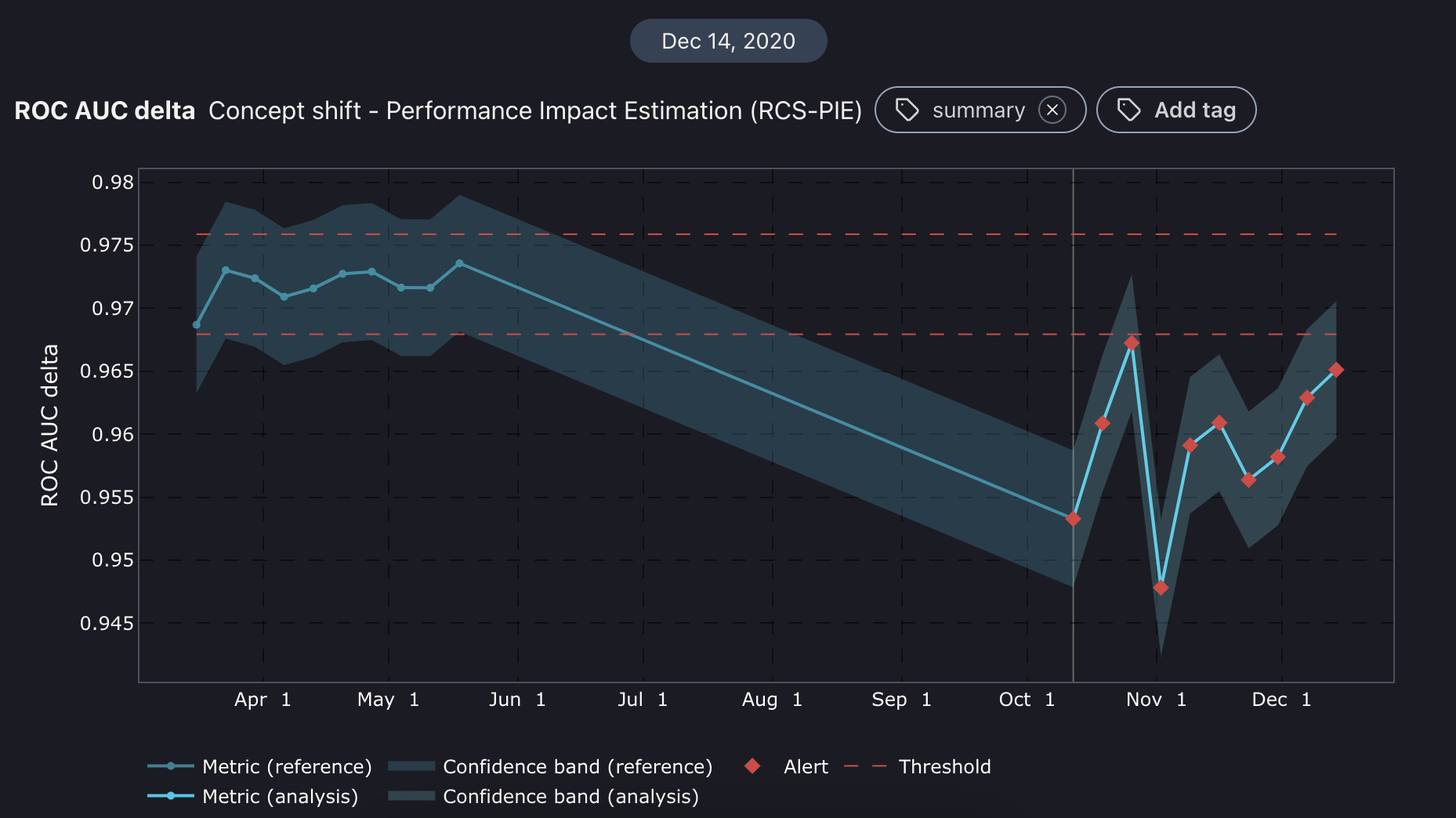

The charts are interactive, allowing you to hover over them for more details. Red dotted lines indicate the thresholds, while the blue line shows the metric during the reference period. The light blue line represents the metric during the analysis period. The lightly shaded area around the performance impact estimation is the metric's confidence band.

You can also zoom in on any part of a chart. Simply press and hold your mouse button, then draw a square over your area of interest. To reset the zoom, just double-click on the chart.

3. Plot config

There are two types of plot formats: line and step. A line plot smoothly connects points with straight lines to show trends, while a step plot uses sharp vertical and horizontal lines to show exact changes between points clearly.

Select datasets to zoom in on reference, analysis, or create a separate subplot for both.

Toggle on or off some components on the charts, like alerts, confidence bands, thresholds, and legends.