☂️Introduction

Monitor what matters, find what is broken, and fix it.

What is NannyML Cloud?

NannyML Cloud is a machine learning monitoring platform to monitor, analyze, and improve ML models in production.

To monitor today’s and tomorrow’s ML models properly, we need solutions that frame the monitoring problem better, solutions that have full coverage, and that monitor the model, not just its features. Because of this, NannyML Cloud focuses on model performance estimation and takes a performance-centric monitoring approach. Our monitoring approach goes as follows.

The NannyML way

1. Monitor what matters

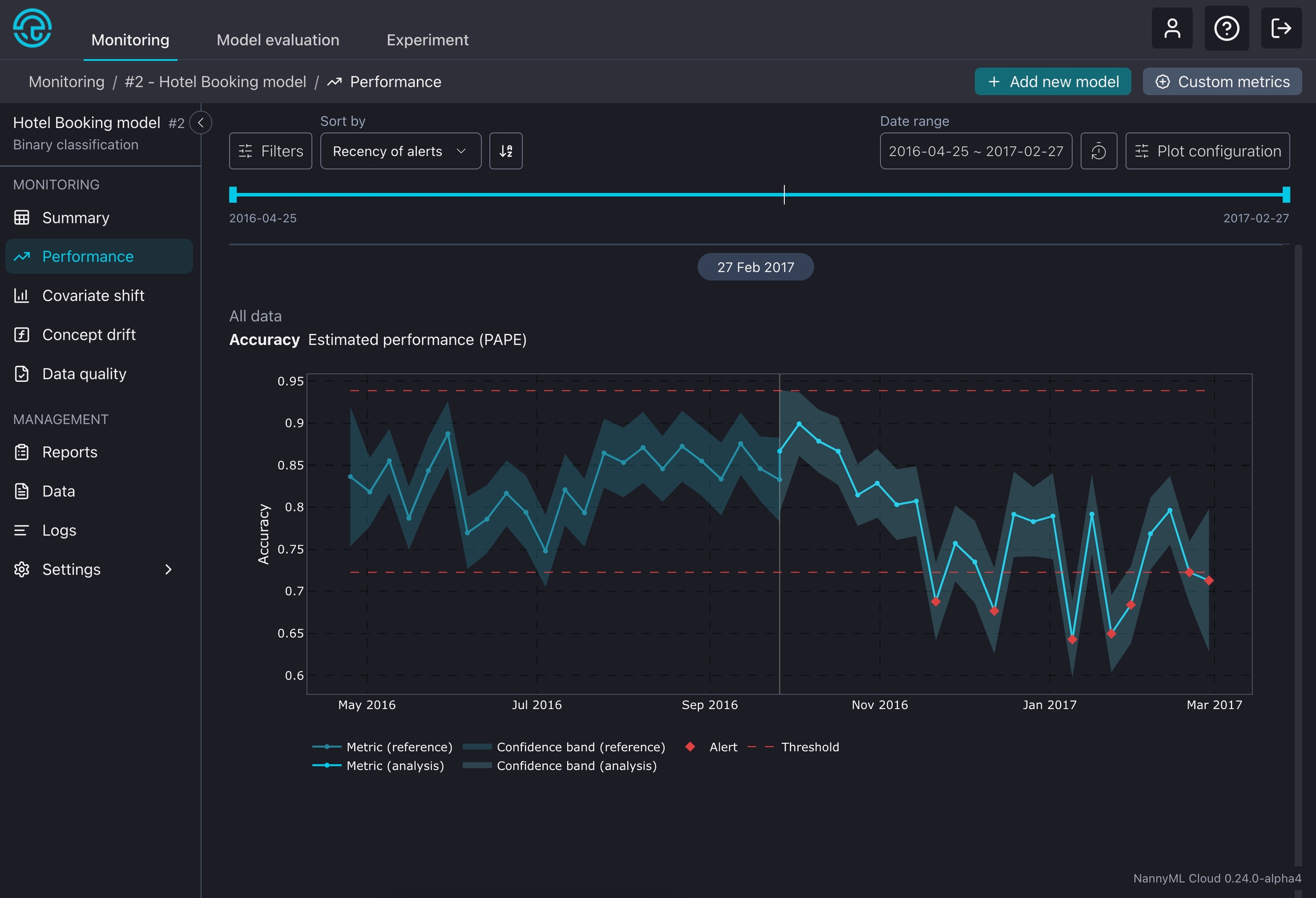

Focus on one single metric to know how your model is performing and get alerted when the performance drops.

Estimate model performance: Know the performance of your ML models 24/7. NannyML estimates the performance of your ML models even if the ground truth is delayed or absent.

Measure the business impact of your models: Tie the performance of your model to monetary or business-oriented outcomes. So that you always know what your ML brings to the table.

Avoid alert fatigue: Traditional ML monitoring tends to overwhelm teams with many false alarms. By focusing on what matters, NannyML alerts are always meaningful.

2. Find what is broken

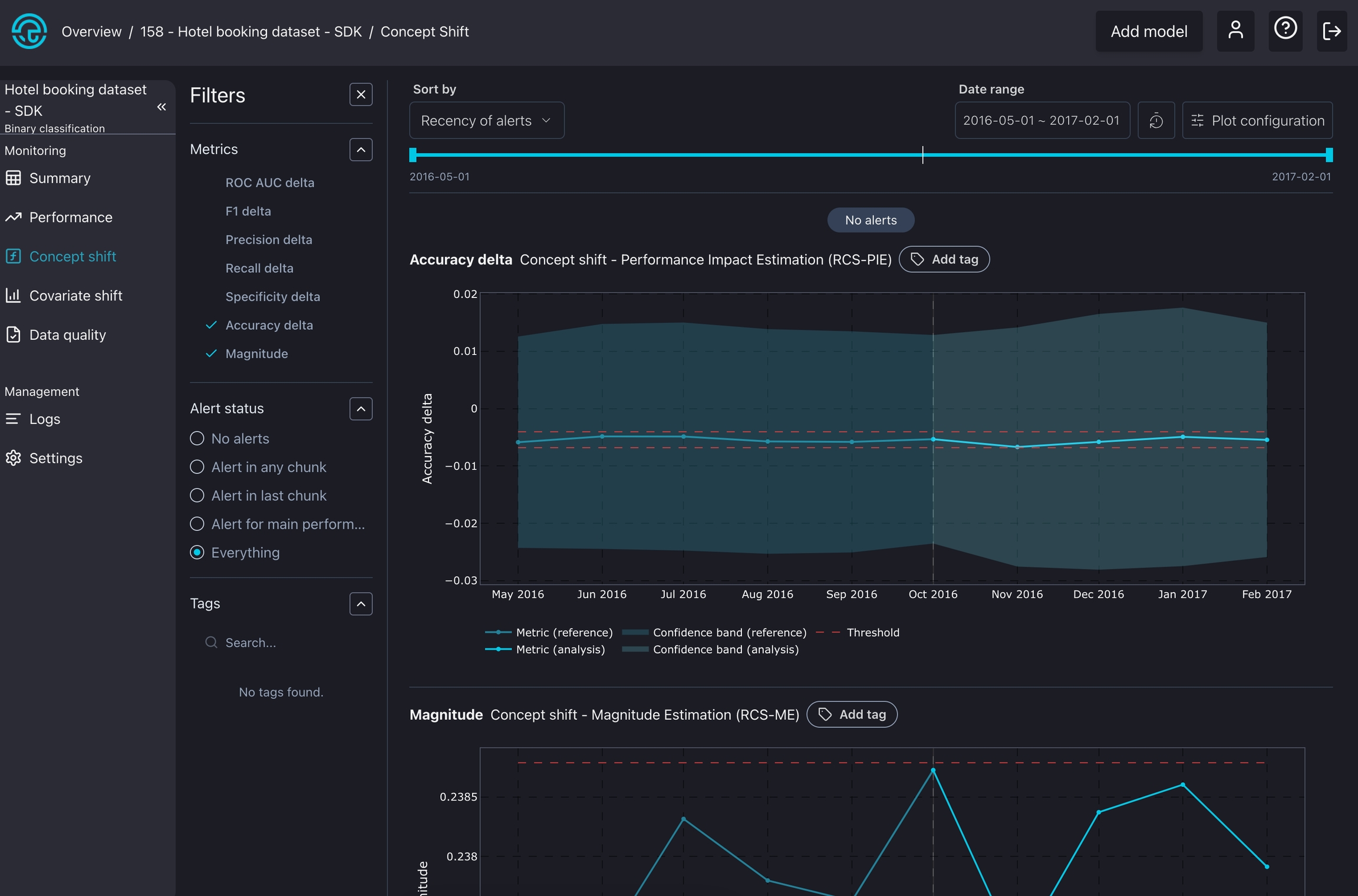

If something is broken, understand the underlying causes by correlating performance issues with data drift alerts and concept drift variations. This approach provides an actionable path for solving model performance issues.

Measure the impact of Concept Drift on model performance: Our concept drift algorithms use the latest ground-truth data to validate if the performance change has been due to a change of concept.

Uncover the most subtle changes in the data structure: Leverage our multivariate drift detection method to uncover changes that univariate approaches cannot detect.

Go on a granular investigation: Apply univariate drift detection methods allow to perform a granular investigation across the model's features. And easily find which ones correlate with the performance changes.

Intelligent alert ranking: NannyML links back data drift alerts to changes in the model performance. So you can easily detect which features are causing the performance issues.

Segment your data for better interpretability: Use segmentation to divide a dataset into meaningful subgroups, allowing you to monitor and analyze specific subsets of the population more effectively.

3. Fix it

Once you understand what went wrong, it becomes possible to tell whether a model is worth retraining, refactoring or if another issue resolution strategy is needed.

Retrain only when necessary: Leverage NannyML Cloud SDK to automate monitoring data ingestion and the webhooks to trigger retraining pipelines when the estimated performance drops.

How to set up NannyML Cloud?

NannyML Cloud is available on both the Azure and AWS marketplaces, and you can deploy it in two different ways, depending on your needs.

Managed Application: With the Managed application, no data will leave your environment. This option will provision the NannyML Cloud components and the required infrastructure within your Azure or AWS subscription. To learn more about this, check out the docs on how to set up NannyML Cloud on Azure and AWS.

NannyML OSS vs NannyML Cloud

NannyML Cloud, built on our open-source NannyML library, adds new features and algorithms into an all-in-one monitoring platform.

Performance estimation - CBPE and DLE

✅

✅

PAPE - 10% better performance estimations than CBPE

❌

✅

Concept shift detection

❌

✅

Covariate shift detection

✅

✅

Data quality checks

✅

✅

Intelligent alerting

✅

✅

Interactive visualizations

✅

✅

Slack and email notifications

❌

✅

Customizable dashboards

❌

✅

Programmatic data collection

❌

✅

Metric storage

❌

✅

Scheduling monitoring runs

❌

✅

Segmentation

❌

✅

Where to go next?

Here, you can find several helpful guides to aid with onboarding.

🏃♂️ Quickstart

Get started with investigating the simple use-case

Find out how to use NannyML Cloud

🧑💻 Tutorials

Explore using NannyML Cloud with tabular, text, and image data

Learn how to deploy NannyML Cloud on Azure and AWS

</> SDK

Learn how to interact with NannyML Cloud via code

👷♂️ Miscellaneous

Learn how the NannyML Cloud model monitoring works under the hood