Version 0.23.0

Release Notes

We're proud to bring our latest 0.23.0 release!

We've worked hard on a new product feature that is unlocking huge potential within NannyML Cloud: custom metrics!

Custom metrics support

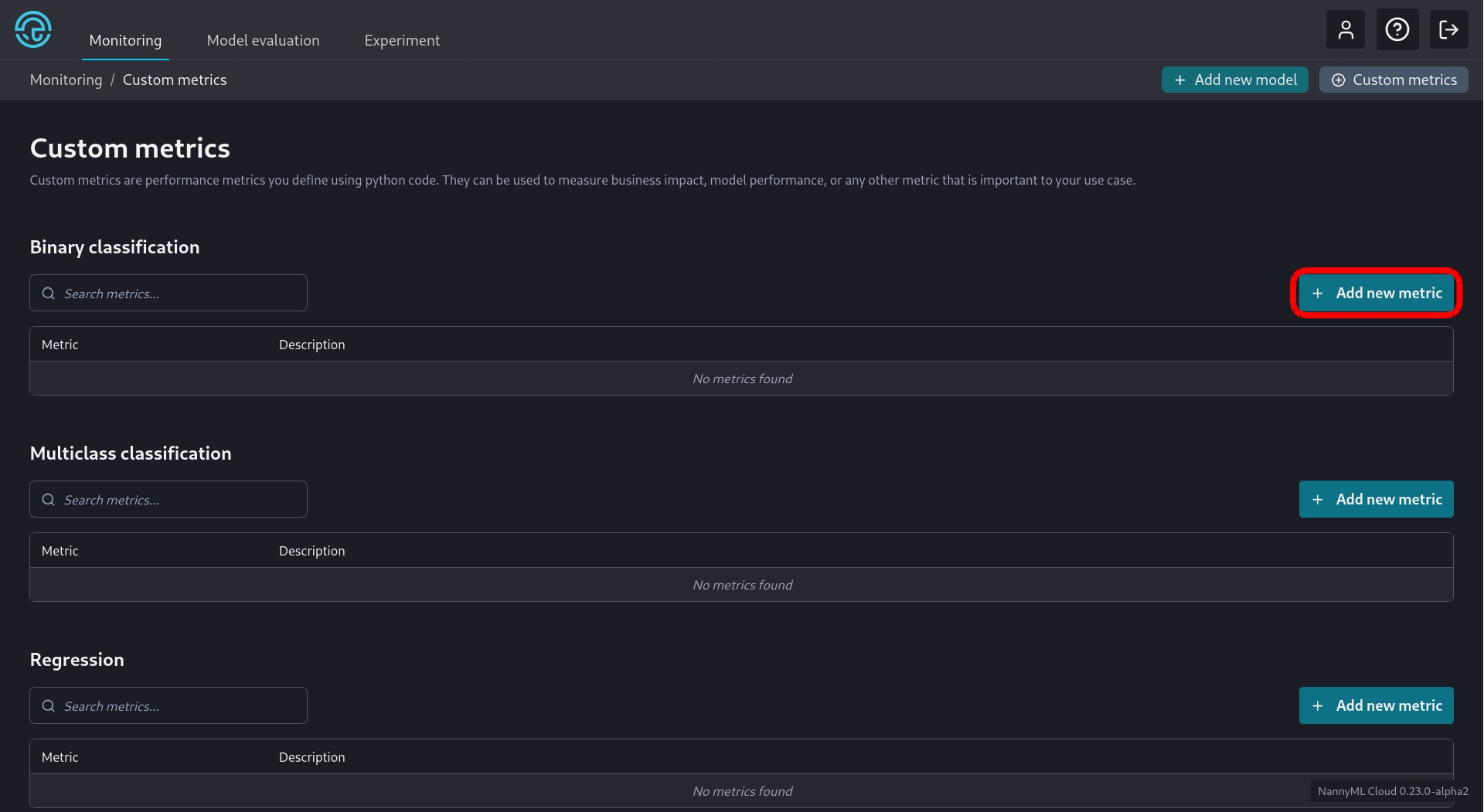

NannyML Cloud includes a suite of performance metrics, but sometimes your use case requires a very specific one. The newly added support for custom metrics allows you to provide us with an implementation of your very own metric, so we can plug it into our algorithms for performance calculation and estimation. We support custom metrics for binary classification, multiclass classification and regression models.

If you are already monitoring models using NannyML Cloud, you can easily add new custom metrics to the monitoring workflow! In the custom metrics overview, you can create a new custom metric.

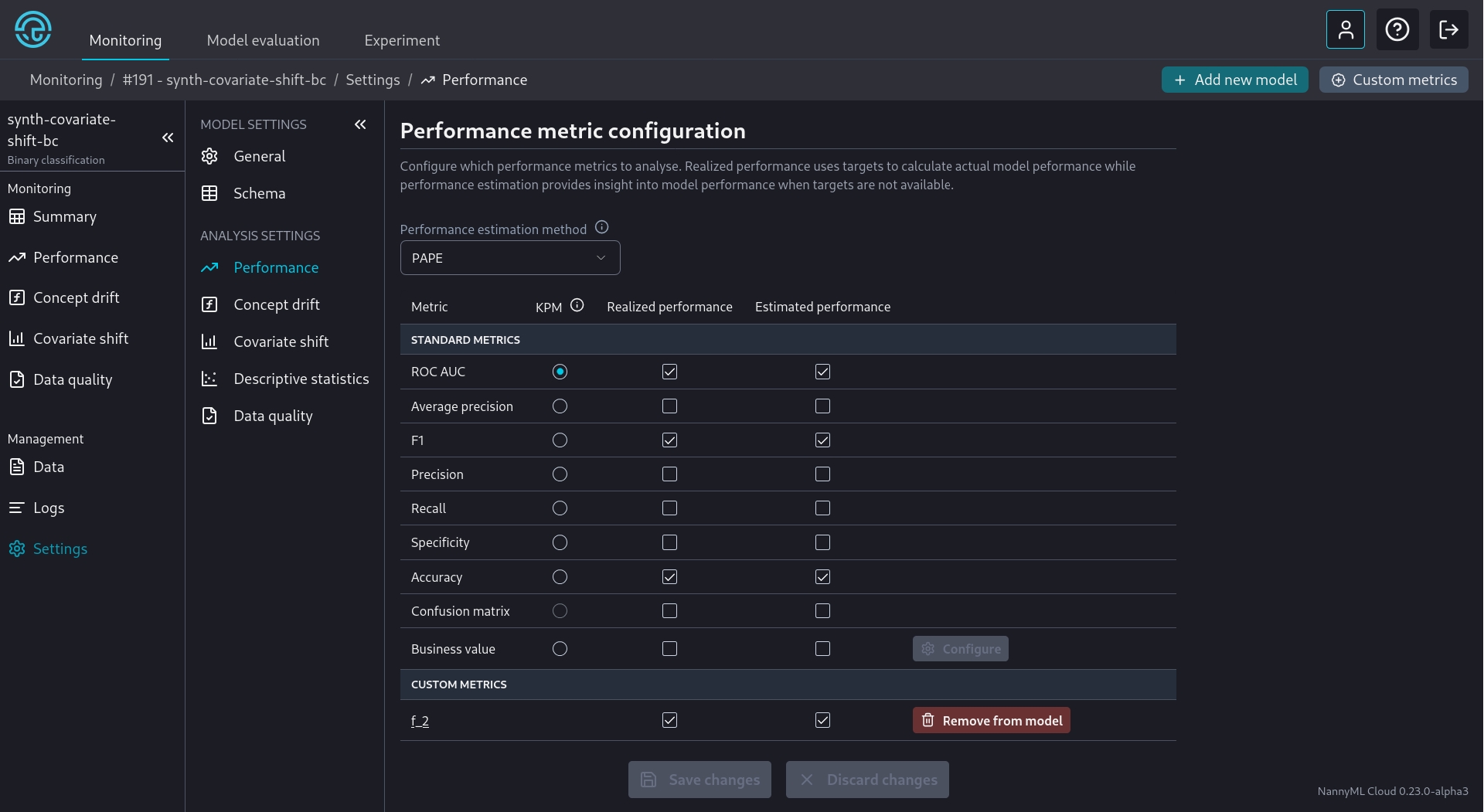

For custom classification metrics, you'll have to provide one function to calculate the realized performance based on your model predictions, targets or any column available in NannyML Cloud. You can optionally provide an estimation function as well, which will then be plugged into our estimation algorithms.

Once the custom metric has been created we'll assign it to our model.

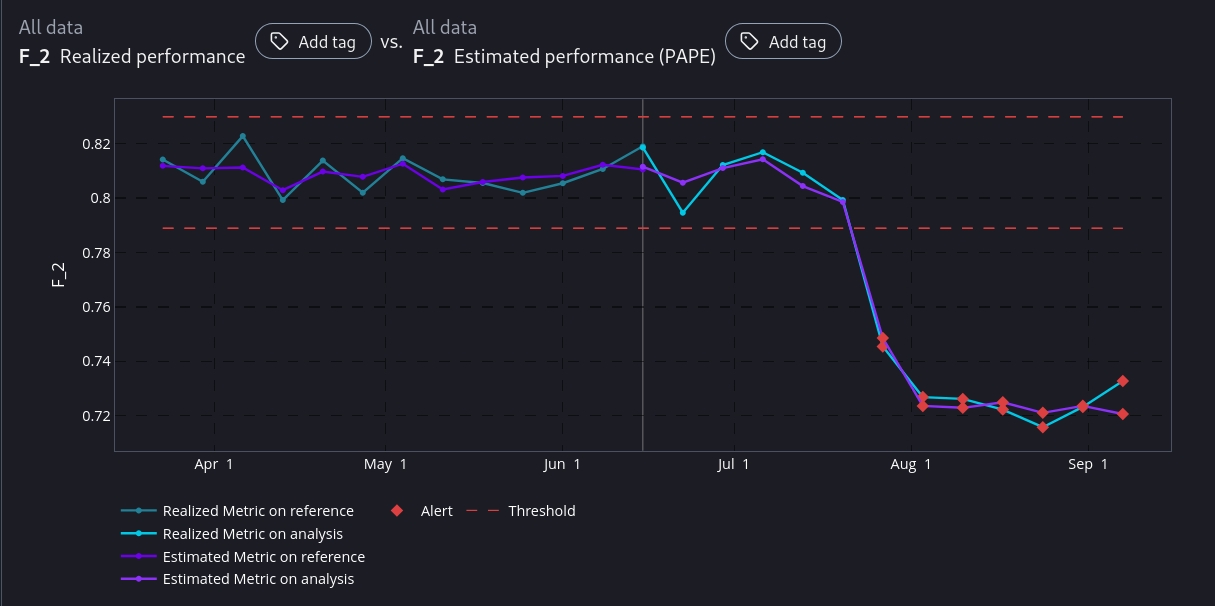

The next time we calculate the model metrics, the custom metric will be included. You'll be able to see the results in the performance pane of the web application. You can also add it to your model dashboard or use it as a key performance metric!

We've written a lot of documentation to get you started with custom metrics easily!

Check out the Creating Custom Metrics guide to help you set up custom metrics for binary classification, multiclass classification or regression models. For a more advanced, real life example you can read the Advanced Tutorial: Creating a MTBF Custom Metric.