Creating a new report

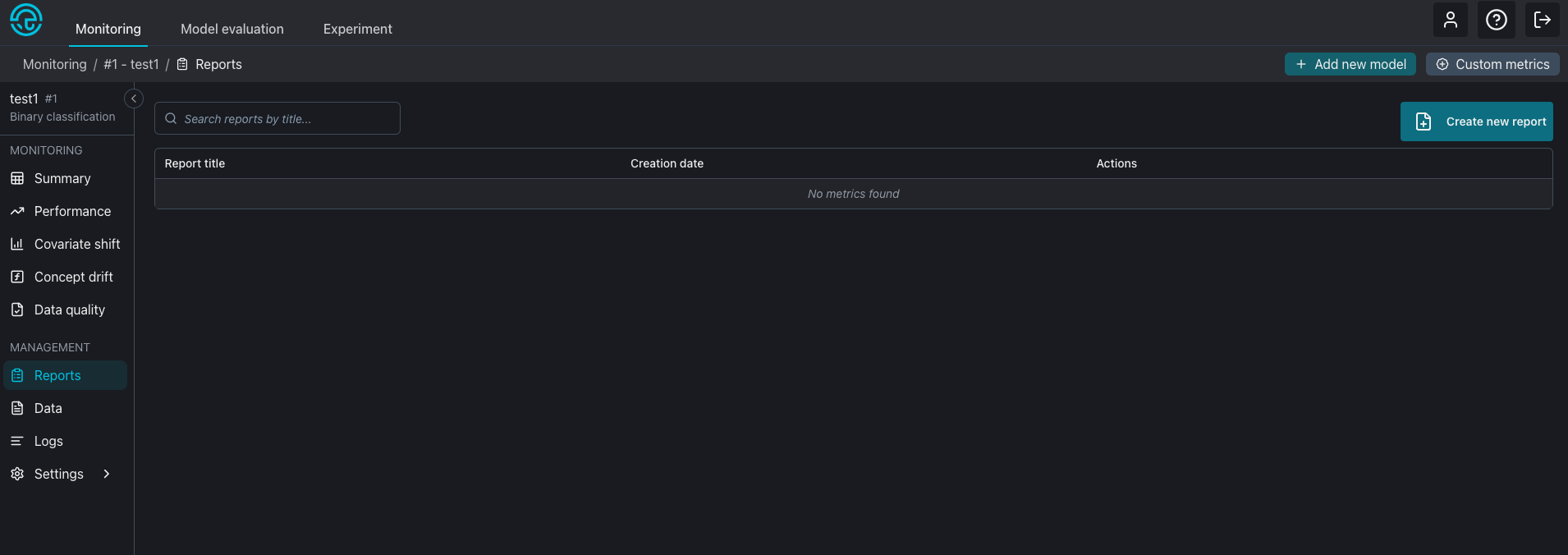

To create a report, a user can either navigate to the Report feature, by selecting a model, and clicking on Reports, in the left menu under Management. Then click on Create new report button on the top right screen.

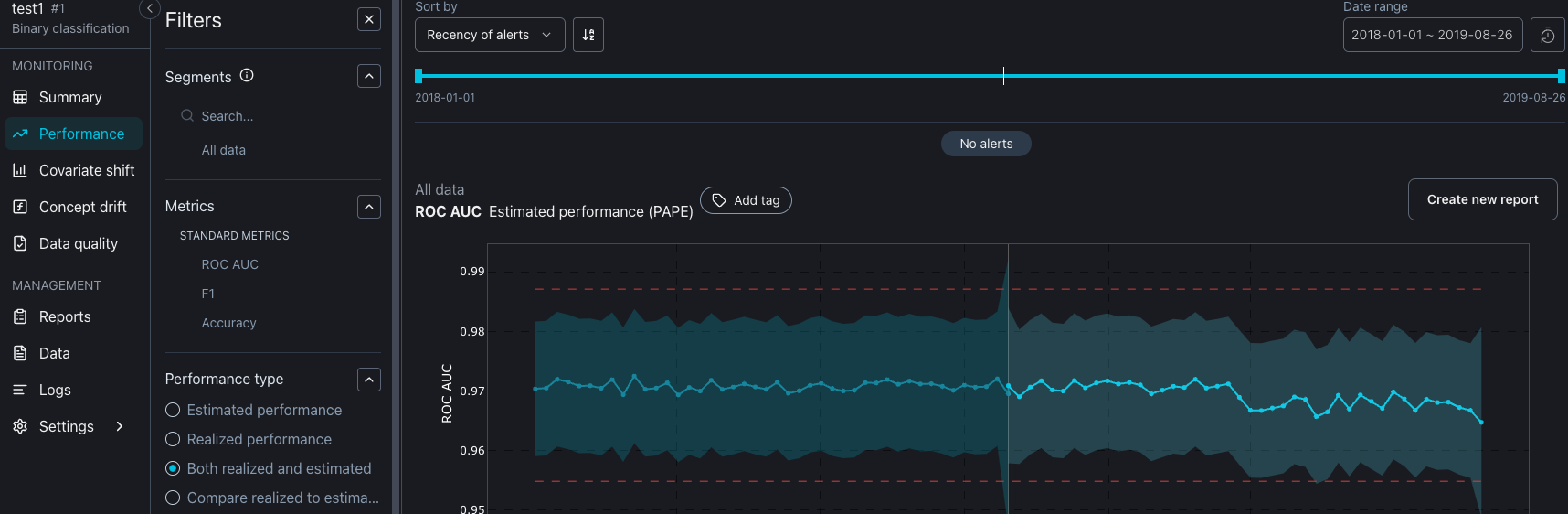

It is also possible to create a new report from the Monitoring dashboards, like Performance, by clicking on the 'Create new report' button on the top of each plot.

New report options

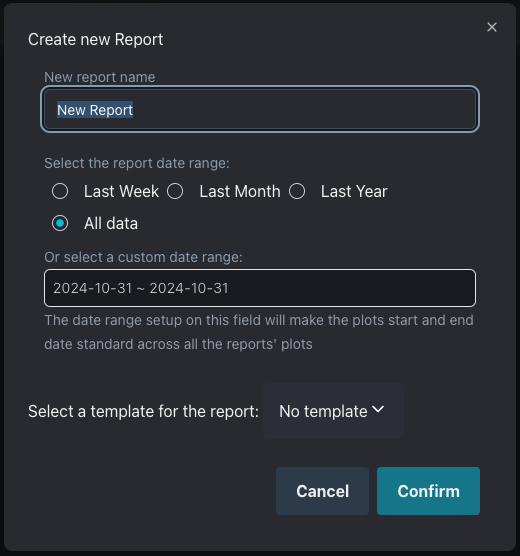

By clicking on 'Create new report' button, a modal appears for the user to set up the initial report configuration.

The user can set up a report name, date range and template.

Report name

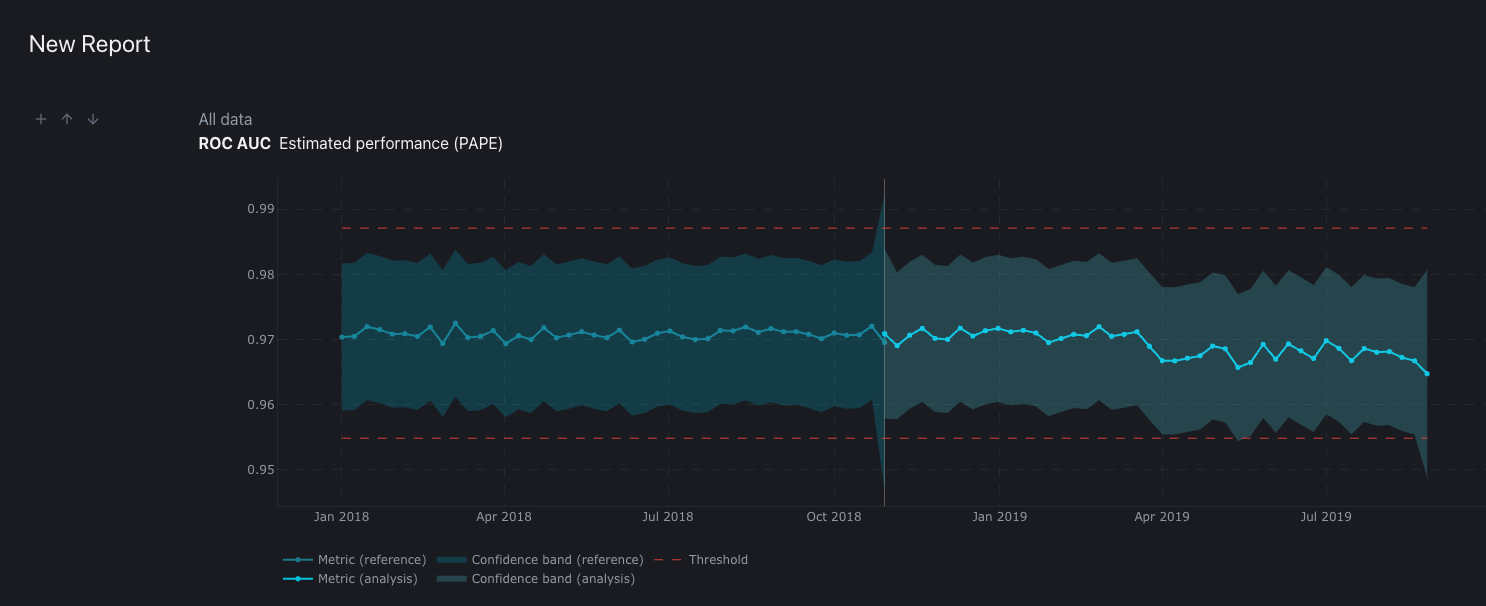

The report name will be also the report title. If no new name is provided, the report will receive the name 'New Report'. The report name does not need to be unique and it can be changed later after the report is created.

Date range

The report date range is the period of time the report represents. By default, the option 'All data' is selected, other options are 'Last Week' (last 7 days), 'Last Month' (last 30 days), 'Last Year' (last 365 days) and a custom date range. By defining a date range the user is saying that all the plots present on the report will have the defined start date and end date, making the report a snapshot in time.

By selecting All data, the user will have, instead of a snapshot in time, the report date range starts on the first data point of the model's dataset and ends on the last data point. In this case every new datasets added to the model will affect the report plots by appending the new data over it.

The user needs to be aware that the report date range cannot be changed after the report creation and if the selected plot for the report has no data over the selected date range. no data will be displayed.

Template

The report template allows for a pre-filled report with plots and information about the data being displayed on the report. If alerts were identified on the reports's selected date range some technical information will be provided by the template.

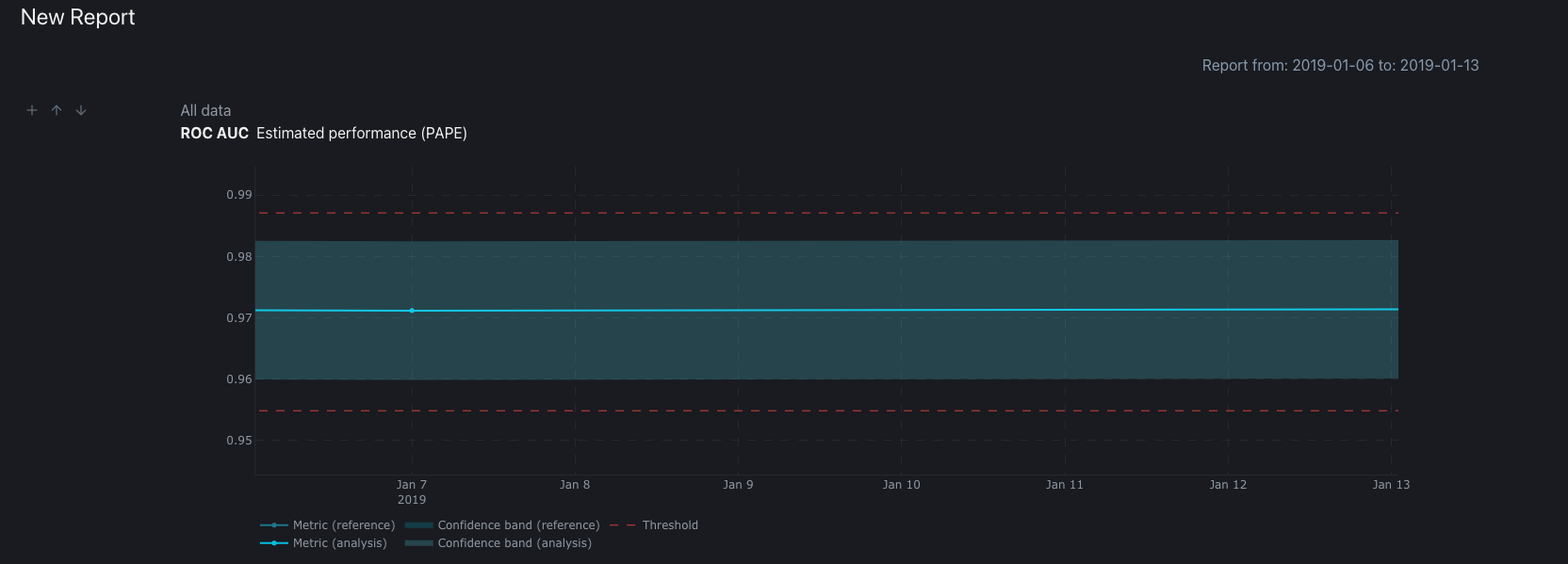

Report creation

After the user clicks on 'Confirm', if on the Reports page, the user will be redirected to the newly created report and can start to editing/adding information to it. If the report is created from the Monitoring dashboard, the new report will appear on the 'Add to report' feature but the user will not be redirected to the new report.