Advanced Tutorial: Creating a MTBF Custom Metric

Creating a MTBF custom metric using the timestamp column from chunk data.

Often we will need to create more complicated custom metrics. Let's use Mean Time Between Failures as an example. In order to calculate it we will need information from columns in the chunk data dataframe.

We will assume the user has access to a Jupyter Notebook running Python with the NannyML open-source library installed.

Repurposing our binary classification sample dataset

We will be using the same dataset we saw when writing custom metric functions for binary classification. The dataset is publicly accessible here. It is a pure covariate shift dataset that consists of:

5 numerical features:

['feature1', 'feature2', 'feature3', 'feature4', 'feature5',]Target column:

y_trueModel prediction column:

y_predThe model predicted probability:

y_pred_probaA timestamp column:

timestampAn identifier column:

identifierThe probabilities from which the target values have been sampled:

estimated_target_probabilities

Here the meaning of the dataset will be a little different. We have some machines operating over a time period. We are also performing regular checks on them to see if there are any failures. We can aggregate our results over this time period to see how many failures we observed over that period compared to the total operating time of all machines inspected during this period. This will be our simple MTBF metric.

We can inspect the dataset with the following code in a Jupyter cell:

Developing the MTBF metric functions

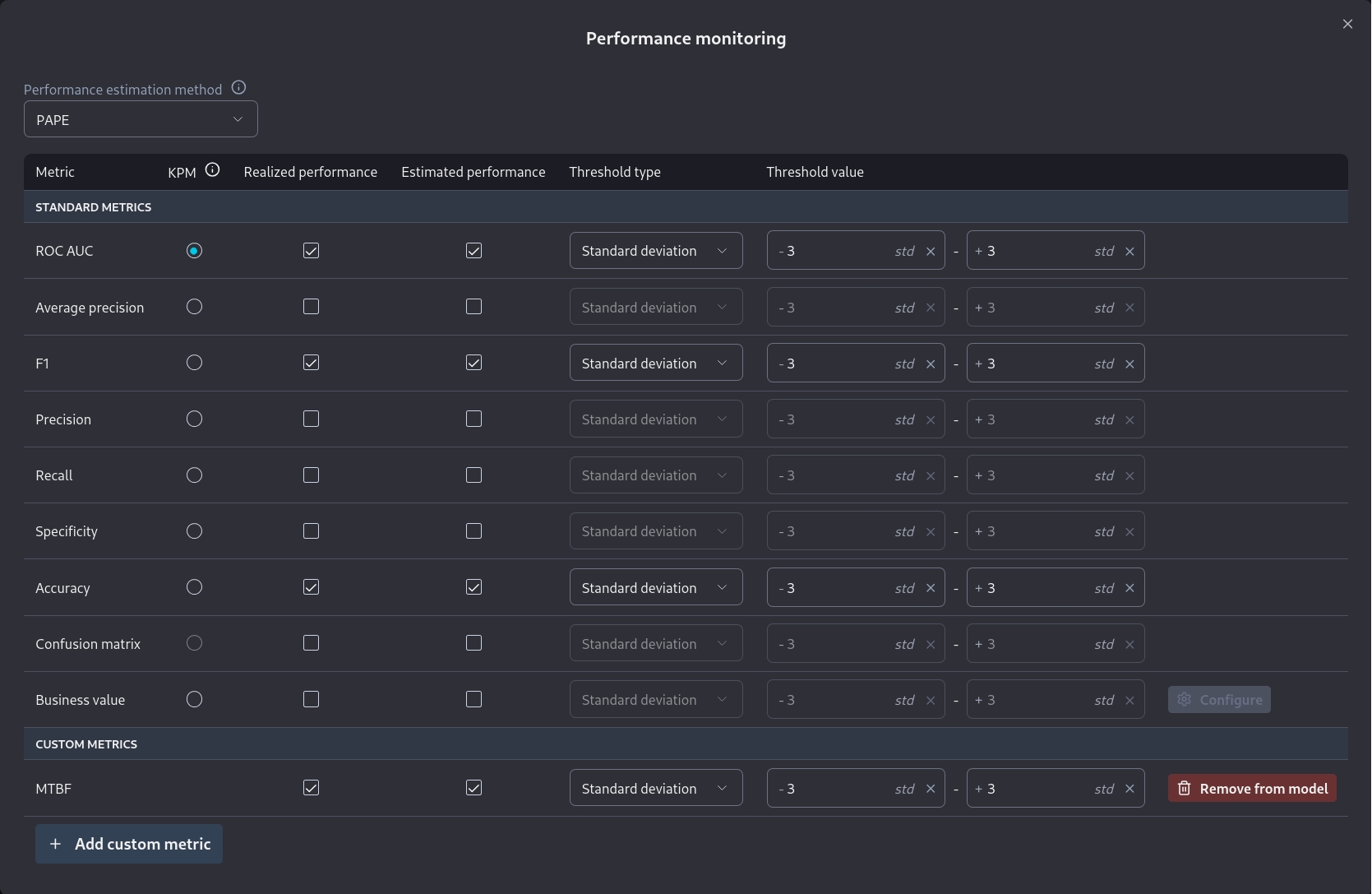

NannyML Cloud requires two functions for the custom metric to be used. The first is the calculate function, which is mandatory, and is used to calculate realized performance for the custom metric. The second is the estimate function, which is optional, and is used to do performance estimation for the custom metric when target values are not available.

To create a custom metric from the MTBF metric we create the calculate function below:

The estimate function is relatively straightforward in our case. We can estimate the number of failures by using the sum of estimated_target_probabilities. Hence we get:

We can test those functions on the dataset loaded earlier. Assuming we run the functions as provided in a Jupyter cell we can then call them. Running calculate we get:

While running estimate we get:

We can see that the values between estimated and realized MTBF score are very close. This means that we are likely estimating the metric correctly. The values will never match due to the statistical nature of the problem. Sampling error will always induce some differences.

Testing MTBF in the Cloud product

We saw how to add a binary classification custom metric in the Custom Metrics Introductory page. We can further test it by using the dataset in the cloud product. The datasets are publically available hence we can use the Public Link option when adding data to a new model.

Reference Dataset Public Link:

Monitored Dataset Public Link:

The process of creating a new model is described in the Monitoring a tabular data model.

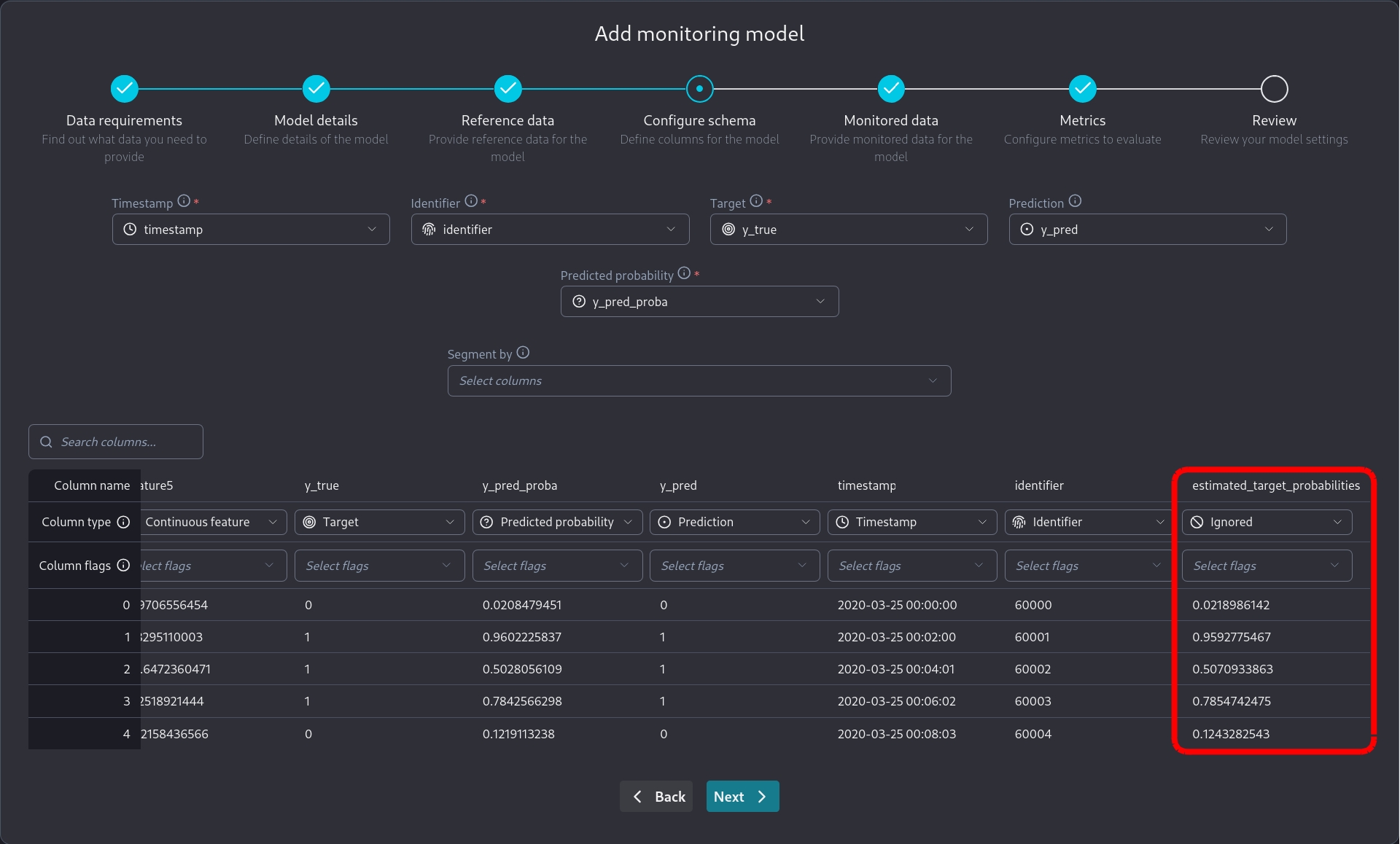

We need to be careful to mark estimated_target_probabilities as an ignored column since it's related to our oracle knowledge of the problem and not to the monitored model the dataset represents.

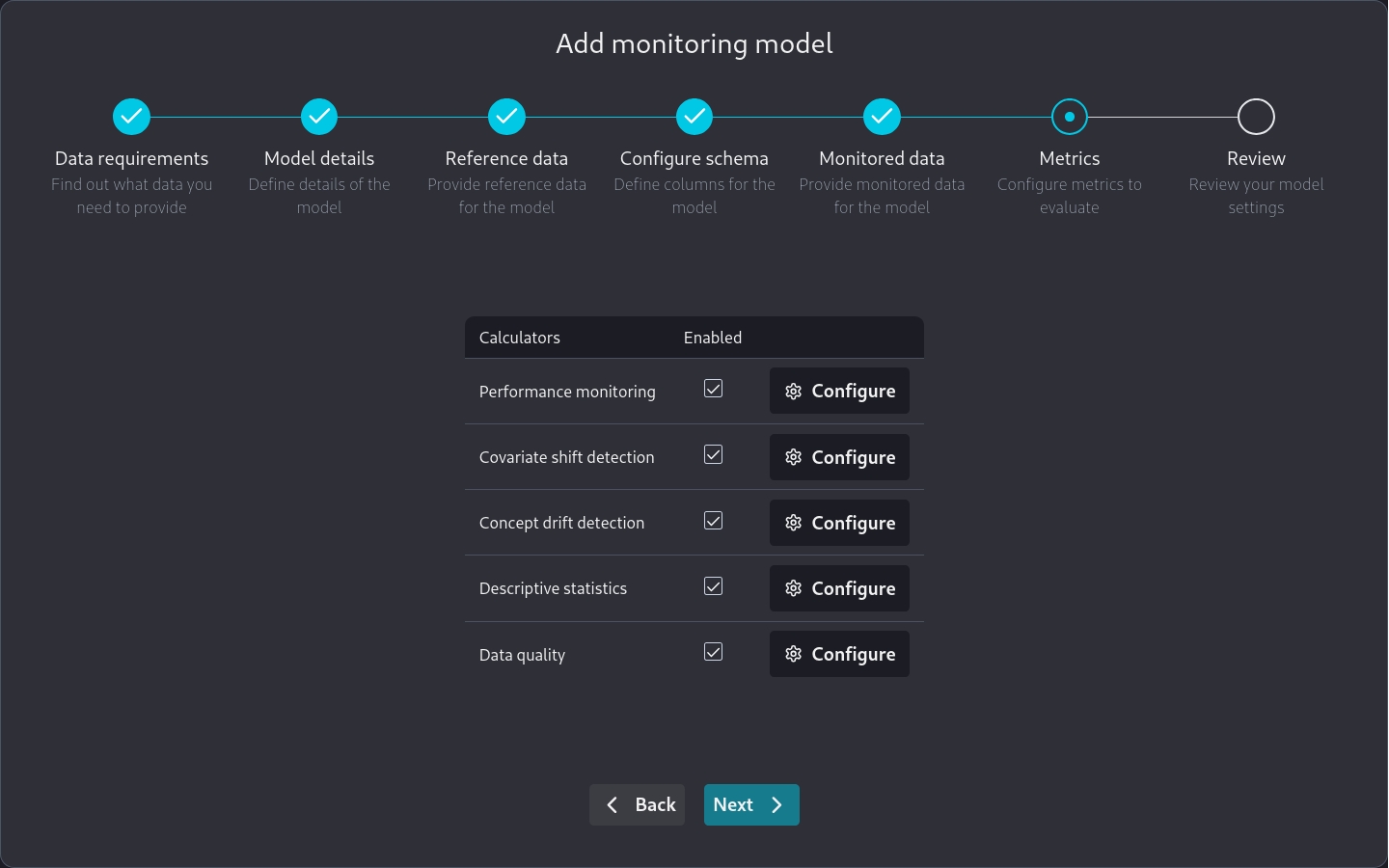

Note that when we are on the Metrics page

we can go to Performance monitoring and directly add a custom metric we have already specified.

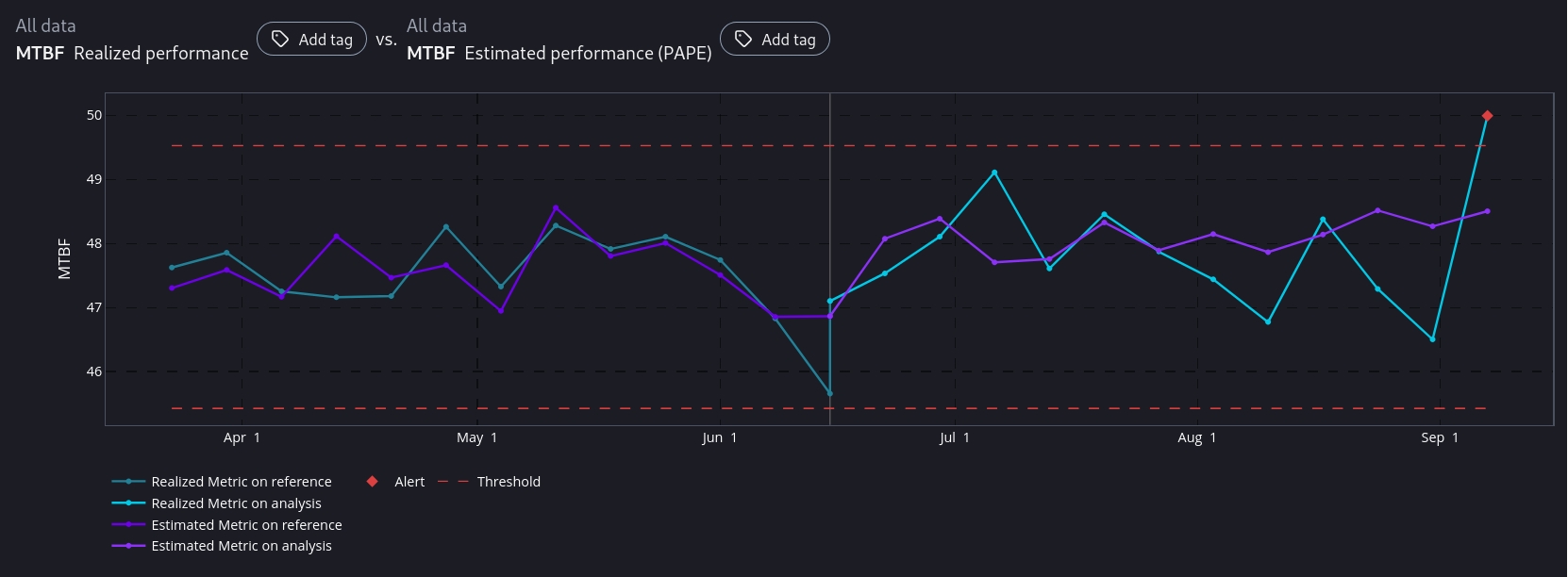

After the model has been added to NannyML Cloud and the first run has been completed we can inspect the monitoring results. Of particular interest to us is the comparison between estimated and realized performance for our custom metric.

We see that NannyML can accurately estimate our custom metric across the whole dataset. Even in the areas where there is a performance difference. This means that our calculate and estimate functions have been correctly created as the dataset is created specifically to facilitate this test.

You may have noticed that for custom metrics we don't have a sampling error implementation. Therefore you will have to make a qualitative judgement, based on the results, whether the estimated and realized performance results are a good enough match or not.

Next Steps

You are now ready to use your new custom metric in production. However, you may want to make your implementation more robust to account for the data you will encounter in production. For example, you can add missing value handling to your implementation.